End-to-end (E2E) testing is a crucial practice for ensuring the quality and reliability of web applications. It simulates real user scenarios, allowing developers to validate the entire application flow from start to finish. This comprehensive guide explores the intricacies of E2E testing, providing a structured approach to help you create robust and maintainable tests that catch potential issues before they impact your users.

We’ll cover the essential aspects of E2E testing, from selecting the right tools and setting up your testing environment to writing effective test cases and analyzing results. This includes understanding the benefits of E2E testing, choosing appropriate frameworks like Selenium, Cypress, or Playwright, and implementing best practices for test maintenance and debugging. Whether you’re a seasoned developer or new to the concept, this guide will equip you with the knowledge and skills needed to master E2E testing.

Introduction to End-to-End Testing for Web Applications

End-to-end (E2E) testing is a crucial phase in the software development lifecycle, simulating real user scenarios to validate an application’s functionality from start to finish. This comprehensive approach ensures that all components of a web application, from the user interface (UI) to the database, work together seamlessly. E2E tests provide confidence in the overall system’s behavior and help to identify potential issues before they impact end-users.E2E testing plays a vital role in verifying the complete workflow of a web application.

By mimicking user interactions, it validates that the application behaves as expected across all integrated components. This testing method focuses on the entire system, not just individual parts.

Purpose of End-to-End Testing

The primary purpose of E2E testing is to verify the entire application flow. This includes:

- Validating the complete user journey: E2E tests ensure that users can successfully navigate through all critical paths of the application, from login to logout, and everything in between.

- Confirming the integration of all system components: E2E testing validates that different parts of the application, such as the front-end, back-end, database, and third-party services, work together correctly.

- Identifying system-level defects: E2E tests are designed to detect defects that may not be found in unit or integration tests, such as performance bottlenecks or data inconsistencies.

- Ensuring the application meets business requirements: By simulating real-world user scenarios, E2E tests help confirm that the application meets the specified business requirements and provides the intended functionality.

Benefits of End-to-End Testing Compared to Other Testing Types

Compared to other testing types, E2E testing offers several distinct advantages.

- Comprehensive System Validation: Unlike unit tests, which focus on individual components, and integration tests, which validate interactions between components, E2E tests validate the entire system.

- Real-World Scenario Simulation: E2E tests simulate real-world user scenarios, providing a more accurate assessment of the application’s behavior than tests that only focus on isolated components.

- Early Defect Detection: E2E tests can detect system-level defects early in the development cycle, reducing the cost and effort required to fix them.

- Increased Confidence in Application Quality: By validating the complete workflow, E2E tests increase confidence in the application’s overall quality and reliability.

Scenarios Where End-to-End Testing is Most Crucial for Web Applications

E2E testing is particularly crucial in several scenarios:

- Complex Workflows: For web applications with complex workflows, such as e-commerce platforms or financial applications, E2E testing is essential to ensure that all steps in the workflow function correctly.

- Integration with Third-Party Services: When a web application integrates with third-party services, such as payment gateways or social media platforms, E2E testing is crucial to validate the integration and ensure that data is exchanged correctly.

- Critical User Journeys: For critical user journeys, such as user registration, login, and checkout, E2E testing is essential to ensure that these core functionalities work flawlessly.

- Applications with High User Volume: For web applications with a high volume of users, E2E testing can help identify performance bottlenecks and ensure that the application can handle the load.

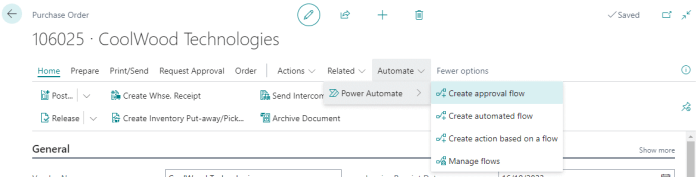

Selecting the Right Tools and Technologies

Choosing the right tools and technologies is crucial for successful end-to-end (E2E) testing of web applications. The selection impacts test execution speed, maintainability, and the overall effectiveness of the testing process. A well-chosen tool can streamline test creation, debugging, and reporting, while a poorly chosen one can lead to frustration, wasted time, and unreliable tests.This section will delve into popular E2E testing tools, compare their features, and provide guidance on selecting the best tool for your specific project needs.

Popular Tools for E2E Testing

Several tools have gained popularity in the realm of E2E testing, each with its own strengths and weaknesses. Understanding these characteristics is key to making an informed decision.

- Selenium: Selenium is a widely adopted, open-source framework for automating web browsers. It supports multiple programming languages, including Java, Python, C#, and JavaScript. Its flexibility and large community make it a versatile choice. However, Selenium tests can be slower and require more setup and maintenance compared to some newer tools.

- Cypress: Cypress is a modern, JavaScript-based E2E testing framework designed for ease of use and developer-friendly features. It offers fast test execution, automatic waiting, and excellent debugging capabilities. Cypress has a limited language support (JavaScript and TypeScript only) and is primarily focused on front-end testing.

- Playwright: Developed by Microsoft, Playwright is a cross-browser E2E testing framework that supports multiple languages, including JavaScript, TypeScript, Python, Java, and .NET. It offers fast and reliable test execution, auto-waiting, and built-in features for handling common testing scenarios. Playwright is known for its robustness and ability to handle complex web applications.

- TestCafe: TestCafe is a free and open-source Node.js end-to-end testing framework. It offers a simple API, cross-browser testing, and no external dependencies. It supports JavaScript and TypeScript and is easy to set up and use, making it a good option for beginners.

Comparing Testing Frameworks

A comparative analysis of popular testing frameworks highlights their key differences, helping in selecting the best fit for your project. The following table provides a structured comparison of Selenium, Cypress, and Playwright.

| Tool | Language Support | Key Features |

|---|---|---|

| Selenium | Java, Python, C#, JavaScript, Ruby, etc. | Mature framework, large community, supports multiple browsers, supports testing on mobile devices, requires more setup and maintenance. |

| Cypress | JavaScript, TypeScript | Fast test execution, excellent debugging tools, automatic waiting, time travel feature, limited browser support (Chrome-based browsers). |

| Playwright | JavaScript, TypeScript, Python, Java, .NET | Fast and reliable test execution, cross-browser support, auto-waiting, built-in assertions, easy to use, robust. |

Factors for Choosing a Testing Tool

Several factors should be considered when choosing an E2E testing tool. These factors will influence the effectiveness, maintainability, and overall success of your testing efforts.

- Programming Language Proficiency: The primary programming languages used by your development team significantly impact the choice. Selecting a tool that supports the team’s existing skill set can reduce the learning curve and accelerate test creation. For example, if your team primarily uses JavaScript, Cypress or Playwright (with JavaScript support) would be suitable choices.

- Browser and Platform Compatibility: Consider the browsers and operating systems your application needs to support. Ensure the testing tool supports these platforms. Playwright, with its cross-browser capabilities, excels in this area.

- Test Execution Speed: Faster test execution allows for quicker feedback and reduces the time spent on debugging. Cypress and Playwright are known for their speed, making them ideal for projects with frequent releases.

- Debugging Capabilities: Effective debugging tools are essential for identifying and resolving test failures. Look for tools that provide detailed error messages, screenshots, and video recordings. Cypress offers excellent debugging features.

- Ease of Use and Learning Curve: The simplicity of the tool’s API and the availability of documentation and community support can impact the learning curve and the speed at which tests can be written. TestCafe and Cypress are known for their ease of use.

- Community Support and Documentation: A strong community and comprehensive documentation are invaluable resources for troubleshooting issues and learning best practices. Selenium has a large and active community.

- Project Requirements: Consider the specific needs of your project. For instance, if you need to test complex interactions or cross-browser compatibility, Playwright or Selenium might be better choices.

Setting Up Your Testing Environment

Setting up a robust testing environment is crucial for the success of end-to-end (E2E) tests. A well-configured environment ensures tests run consistently and accurately, providing reliable feedback on the application’s functionality. This section Artikels the necessary steps, tool configuration, and CI/CD pipeline setup to create a comprehensive testing environment.

Preparing the Environment for End-to-End Testing

Before diving into specific tools, several foundational elements must be considered to create a suitable testing environment. These elements contribute to test stability, maintainability, and overall efficiency.

- Selecting a Dedicated Testing Environment: Avoid running E2E tests against production environments. Use dedicated environments like staging or pre-production environments that closely mirror production. This minimizes the risk of data corruption and ensures tests reflect real-world conditions.

- Database Setup and Management: Ensure the testing environment has a database mirroring the production database schema. Implement data seeding and cleanup strategies to maintain a consistent state between test runs. Consider using database migration tools to manage schema changes.

- Environment Configuration Management: Define and manage environment-specific configurations (URLs, API keys, database connection strings) separately from the test code. Use environment variables or configuration files to switch between environments easily.

- Network Considerations: Ensure the testing environment has reliable network connectivity. Consider the impact of network latency and bandwidth on test execution. In some cases, you may need to simulate network conditions to test application behavior under various network constraints.

- Browser and Device Compatibility: Define the browsers and devices to be tested. Install the necessary browsers and their corresponding drivers (e.g., ChromeDriver for Chrome, GeckoDriver for Firefox). If testing on mobile devices, set up emulators or simulators, or use cloud-based device farms.

Installing and Configuring the Chosen Testing Tool

Once the environment is prepared, installing and configuring the chosen testing tool is the next step. The process varies depending on the tool selected (e.g., Selenium, Cypress, Playwright). This section will provide a general example using Selenium with Python.

For example, to install Selenium in Python, use pip:

pip install selenium

Next, download the appropriate browser driver (e.g., ChromeDriver) and place it in a location accessible by your system’s PATH environment variable. Then, initialize the WebDriver in your test code:

from selenium import webdriverfrom selenium.webdriver.chrome.service import Service# Initialize the ChromeDriver serviceservice = Service(executable_path='/path/to/chromedriver')# Initialize the WebDriverdriver = webdriver.Chrome(service=service)# Navigate to a websitedriver.get("https://www.example.com")# Perform actions and assertionsprint(driver.title)# Close the browserdriver.quit() For other tools like Cypress, installation is typically done via npm (Node Package Manager):

npm install cypress --save-dev

Cypress will create a `cypress.config.js` file where you can configure your test environment. For Playwright, the installation also utilizes npm:

npm install -D playwright

Playwright automatically downloads the necessary browser binaries, making setup straightforward.

Configuring the testing tool involves setting up test frameworks, specifying browser configurations, and defining test execution parameters. These configurations depend on the chosen testing tool, such as the test runner and the testing framework (e.g., pytest for Python with Selenium, Mocha or Jest for JavaScript with Cypress/Playwright).

Creating a CI/CD Pipeline for Automated E2E Testing

Automating E2E tests as part of a Continuous Integration/Continuous Deployment (CI/CD) pipeline is essential for rapid feedback and efficient software development. The following steps Artikel how to set up a basic CI/CD pipeline.

- Choose a CI/CD Tool: Select a CI/CD platform like Jenkins, GitLab CI, GitHub Actions, or CircleCI. The choice depends on your existing infrastructure and team preferences.

- Define the Build and Test Steps: Create a configuration file (e.g., `Jenkinsfile`, `.gitlab-ci.yml`, `.github/workflows/*.yml`) that defines the steps of your CI/CD pipeline. This typically includes:

- Checkout Code: Clone the repository from your version control system (e.g., Git).

- Install Dependencies: Install the necessary dependencies for your testing tool and application. For Python projects, this involves using `pip install -r requirements.txt`. For JavaScript projects, this means running `npm install` or `yarn install`.

- Run Tests: Execute the E2E tests using the testing tool’s command-line interface (e.g., `pytest`, `cypress run`, `npx playwright test`).

- Collect Test Results: Collect the test results in a format that can be parsed by the CI/CD tool (e.g., JUnit XML).

- Report Results: Display the test results in the CI/CD platform’s dashboard.

- Integrate with Version Control: Configure the CI/CD pipeline to trigger automatically on code changes (e.g., pull requests, commits to specific branches). This ensures tests are run every time code is updated.

- Configure Environment Variables: Set up environment variables within the CI/CD platform to manage secrets, API keys, and environment-specific configurations. Avoid hardcoding sensitive information in your test code.

- Implement Notifications: Configure the CI/CD pipeline to send notifications (e.g., email, Slack) upon test completion, including test results and any failures.

- Example: GitHub Actions Workflow (YAML):

name: E2E Testson: push: branches: -main pull_request: branches: -mainjobs: e2e-tests: runs-on: ubuntu-latest steps: -uses: actions/checkout@v3 -name: Set up Python uses: actions/setup-python@v4 with: python-version: '3.x' -name: Install dependencies run: | python -m pip install --upgrade pip pip install -r requirements.txt -name: Run E2E tests run: pytest -name: Upload test results uses: actions/upload-artifact@v3 if: always() with: name: pytest-results path: | ./reports/ This GitHub Actions workflow checks out the code, sets up Python, installs dependencies, runs the tests using pytest, and uploads the test results as an artifact.

Writing Effective Test Cases

Effective test cases are the cornerstone of reliable end-to-end (E2E) testing. They provide a structured way to verify that a web application functions as expected, ensuring a seamless user experience and preventing critical bugs from reaching production. Designing well-crafted test cases involves a systematic approach, focusing on key user flows, functionalities, and potential edge cases. This section delves into the art of writing effective test cases, covering design principles, organization strategies, and considerations for different user roles.

Designing Test Cases for Key User Flows and Functionalities

The primary goal of E2E tests is to validate the application’s core functionalities from the user’s perspective. This involves identifying the most critical user flows and designing test cases that simulate real-world interactions. This approach ensures that the most important aspects of the application are thoroughly tested.To illustrate, consider a shopping cart feature. Effective test cases should cover various scenarios, ensuring the cart functions correctly under different circumstances.

The following bullet points showcase example test cases for a shopping cart feature:

- Adding Items to the Cart: Verify that users can successfully add different products to their shopping cart. This includes checking that the product quantity is correctly reflected, the price is accurate, and the cart total updates accordingly.

- Removing Items from the Cart: Test the ability to remove individual items from the cart. Ensure the cart total and item count are updated correctly after removal. Also, test removing the last item and verifying the cart is then empty.

- Updating Item Quantities: Check that users can update the quantity of items in their cart. Confirm that the cart total and individual item prices are recalculated accurately when the quantity changes.

- Applying Discounts and Promotions: Test the application of discounts and promotional codes. Verify that the correct discount is applied to the cart total and that the discounted price is displayed accurately.

- Proceeding to Checkout: Validate the process of proceeding to checkout. Ensure the user is correctly redirected to the checkout page and that the cart data is accurately transferred.

- Empty Cart Behavior: Test the application’s behavior when the cart is empty. Verify that an appropriate message or display is shown to the user, and that attempting to proceed to checkout with an empty cart is handled gracefully.

- Cart Persistence: Test that the shopping cart persists across different sessions (e.g., after the user closes and reopens the browser). This involves verifying that the items and quantities in the cart are preserved.

Organizing Test Cases Based on User Stories or Features

Organizing test cases effectively is crucial for maintaining testability and readability. Grouping test cases based on user stories or features allows for better traceability and easier identification of the areas of the application that are being tested. This approach promotes a structured and maintainable testing process.Using user stories as a basis for test case organization offers several benefits. Each user story describes a specific feature or functionality from the user’s point of view.

By creating test cases that directly correspond to these user stories, the testing process becomes more focused and aligned with the application’s requirements. This organization makes it easier to track test coverage and identify any gaps in testing.

Writing Test Cases for Different User Roles and Permissions

Web applications often have multiple user roles, each with different permissions and access levels. It is essential to design test cases that cover these different roles to ensure that users can only access the functionalities and data they are authorized to use. This includes testing scenarios where users try to access features or data they should not have access to.To illustrate, consider a web application with the following user roles:

- Administrator: Has full access to all features and data.

- Editor: Can create, edit, and delete content.

- Viewer: Can only view content.

Test cases should be designed to verify the following:

- Administrator Tests: Verify that the administrator can access all features, including user management, content creation, and system settings.

- Editor Tests: Confirm that the editor can create, edit, and delete content, but cannot access administrator-only features. Test the editor’s ability to publish content and manage their own profile.

- Viewer Tests: Validate that the viewer can only view content and cannot access any editing or administrative features. Test their ability to browse content and search for information.

- Permission Enforcement Tests: Create test cases that attempt to access restricted features as different user roles. For example, a viewer attempting to edit content should be denied access. This confirms that the application’s permission system is functioning correctly.

Locating Elements and Interacting with the Web Application

Effectively locating and interacting with elements is crucial for writing robust end-to-end (E2E) tests. These interactions simulate user behavior, ensuring the application functions as expected. This section will cover various methods for element location, along with techniques for interacting with elements and handling dynamic content.

Locating Elements on a Web Page

Accurately identifying elements on a web page is the foundation of any E2E test. Various methods are available, each with its strengths and weaknesses. The choice of method often depends on the structure of the HTML and the specific requirements of the test.

- CSS Selectors: CSS selectors are a powerful and versatile way to locate elements. They leverage the same syntax used for styling web pages with CSS. They are generally preferred for their readability and performance.

- Example: To locate a button with the class “submit-button,” you could use the selector:

.submit-button. - Example: To locate an input field with the ID “username,” you could use the selector:

#username. - CSS selectors offer a wide range of capabilities, including selecting elements by class, ID, tag name, attributes, and their relationships to other elements (e.g., parent, child, sibling).

- XPath: XPath (XML Path Language) is a query language for selecting nodes from an XML document, which can also be applied to HTML documents. It provides a more flexible way to locate elements, especially when the HTML structure is complex or when CSS selectors are insufficient. However, XPath expressions can sometimes be less readable and potentially slower than CSS selectors.

- Example: To locate a button with the text “Submit,” you could use the XPath expression:

//button[text()='Submit']. - Example: To locate the first paragraph within a div with the ID “content,” you could use the XPath expression:

//div[@id='content']/p[1]. - XPath uses a path-based syntax to navigate the HTML structure, allowing you to specify the exact location of an element within the document.

- Other Methods: Some testing frameworks offer additional methods for locating elements, such as by text content, link text, or accessibility attributes (e.g., “aria-label”). These methods can simplify test writing in specific scenarios.

Interacting with Elements

Once elements are located, the next step is to interact with them to simulate user actions. This includes clicking buttons, filling out forms, and navigating between pages. The specific actions available depend on the testing framework being used.

- Clicking Buttons: Clicking buttons is a common interaction. This typically involves locating the button element and then triggering a “click” event.

- Example (using a hypothetical framework):

// Locate the button using a CSS selector

const submitButton = await page.$('.submit-button');

// Click the button

await submitButton.click();

- Filling Forms: Filling out forms involves locating input fields (text boxes, text areas, etc.) and entering text into them.

- Example (using a hypothetical framework):

// Locate the username input field

const usernameInput = await page.$('#username');

// Enter the username

await usernameInput.type('testuser');

- Navigating Between Pages: Navigating between pages often involves clicking links or submitting forms that trigger page loads. The test needs to wait for the new page to load before proceeding.

- Example (using a hypothetical framework):

// Locate the "Sign Up" link

const signUpLink = await page.$('a[href="https://anakpelajar.com/signup"]');

// Click the link

await signUpLink.click();

// Wait for the new page to load (e.g., by checking for a specific element)

await page.waitForSelector('#signup-form');

Handling Dynamic Content and Asynchronous Operations

Web applications often use dynamic content and asynchronous operations (e.g., AJAX calls) that load data in the background. E2E tests must handle these situations to ensure accurate and reliable results.

- Waiting for Elements: Before interacting with an element, the test needs to ensure that the element is present in the DOM (Document Object Model). This is often achieved by using “wait” or “expect” commands provided by the testing framework.

- Example (using a hypothetical framework):

// Wait for the success message to appear

await page.waitForSelector('.success-message');

- Handling AJAX Calls: AJAX calls can update the page content without a full page reload. Tests may need to wait for these calls to complete before verifying the results.

- Techniques: Use techniques like waiting for specific network requests to complete (e.g., using `page.waitForResponse()`), or waiting for specific elements to appear after the AJAX call has completed.

- Example (using a hypothetical framework):

// Wait for a response with a specific URL

await page.waitForResponse(response => response.url().includes('/api/data') && response.status() === 200);

- Dealing with Timeouts: Set appropriate timeouts to prevent tests from failing due to slow-loading content or network issues. Adjust the timeout values based on the application’s performance characteristics.

- Mocking and Stubbing: In some cases, it might be necessary to mock or stub external services or APIs to control the data returned and make tests more predictable and isolated. This can be particularly useful when testing edge cases or when external dependencies are unreliable.

Handling Test Data and Test Data Management

Effective end-to-end (E2E) tests rely heavily on well-managed test data. The quality and availability of this data directly impact the reliability and efficiency of your testing process. Poorly managed data can lead to flaky tests, wasted time, and inaccurate results. Therefore, understanding how to handle and manage test data is crucial for successful E2E testing.

The Importance of Using Test Data in E2E Tests

Test data forms the foundation upon which E2E tests are built. It represents the various states and inputs that your web application will encounter in a real-world scenario. Using appropriate test data ensures that your tests accurately reflect how users interact with your application and that you can validate its functionality under different conditions.

- Test Coverage: Comprehensive test data allows you to cover a wider range of scenarios, including positive and negative test cases. This helps you identify potential bugs and vulnerabilities more effectively. For example, testing a registration form requires data for valid and invalid email addresses, strong and weak passwords, and required and optional fields.

- Realistic Scenarios: Test data should mimic real-world user data as closely as possible. This ensures that your tests accurately reflect how users will interact with your application. For example, if your application deals with financial transactions, your test data should include realistic transaction amounts, dates, and account balances.

- Data-Driven Testing: Using test data allows you to run the same test with different sets of data, significantly increasing the efficiency of your testing process. This is particularly useful for testing data input validation, data processing, and reporting features.

- Reduced Test Flakiness: When tests rely on consistent and predictable data, they are less likely to fail due to unexpected changes in the application’s state. Data management strategies help to minimize these issues.

Strategies for Managing Test Data

Managing test data involves several key activities, including creating, updating, and cleaning up data. Implementing robust data management strategies ensures that your tests are reliable, efficient, and maintainable.

- Data Creation: The process of generating the initial test data. This can involve creating data manually, programmatically, or by importing data from external sources.

- Manual Creation: Suitable for small-scale tests or when specific data is required. This can be time-consuming for large datasets.

- Programmatic Creation: Involves writing scripts to generate data dynamically. This is ideal for creating large datasets or when data needs to be varied.

- Data Import: Importing data from CSV files, databases, or other sources. This is useful for using existing data or seeding your test environment with specific data.

- Data Updates: Modifying existing test data to reflect changes in the application or to test specific scenarios.

- In-Test Updates: Updating data within the test script itself. Suitable for simple data modifications.

- Data Refreshing: Regularly refreshing data to ensure its consistency and relevance. This is particularly important in environments where data changes frequently.

- Data Cleanup: Removing or resetting test data after tests have completed. This prevents data pollution and ensures that subsequent tests start with a clean slate.

- Database Cleanup: Deleting or resetting data in databases used by the application.

- File Cleanup: Removing temporary files or directories created during tests.

Examples of Using Different Data Sources in Tests

Test data can be sourced from various locations. The choice of data source depends on the complexity of the data, the frequency of updates, and the overall test environment.

- CSV Files: Simple and easy to use for storing tabular data. Suitable for small to medium-sized datasets.

For example, consider a test for a product catalog. The CSV file might look like this:

product_id,product_name,price,description

101,Laptop,1200,"High-performance laptop"

102,Mouse,25,"Wireless mouse"

Your test script would read this data and use it to verify the product details displayed on the web page.

- Databases: Provide a more robust and scalable solution for managing test data, especially when dealing with complex relationships and large datasets.

For instance, to test a user login feature, you could store user credentials in a database table:

user_id,username,password,email

1,john.doe,password123,[email protected]

2,jane.doe,securePass,[email protected]

Your test script would query the database to retrieve user credentials and use them to log in.

After the test, the test can also delete the user or reset the database to a known state.

- JSON Files: JSON (JavaScript Object Notation) files are useful for storing structured data in a human-readable format.

Imagine testing an API that returns a list of blog posts. You could store the expected JSON response in a file:

["id": 1,

"title": "First Post",

"author": "John Doe"

,"id": 2,

"title": "Second Post",

"author": "Jane Doe"]

Your test would then compare the actual API response with the data in the JSON file to ensure that the API returns the correct data.

- Test Data Builders/Factories: Test data builders or factories are designed to create test data dynamically and consistently. These can simplify test data creation, ensuring that all necessary fields are populated and that data relationships are maintained.

For example, a test data builder for a “User” object might create a user with a random username, a generated password, and a valid email address, ready for use in a registration test.

Implementing Assertions and Verifying Results

Assertions are fundamental to end-to-end (E2E) testing, acting as the critical checkpoints that validate the expected behavior of a web application. They are the mechanisms that allow tests to confirm whether the application functions as designed. Without robust assertions, tests merely perform actions without verifying their correctness, rendering the entire testing process ineffective. This section delves into the purpose of assertions, explores various assertion types, and provides practical examples of how to verify test results.

The Purpose of Assertions in E2E Tests

Assertions serve the crucial purpose of validating the state of a web application at specific points during a test. They are essentially truth statements that must be met for a test to be considered successful. If an assertion fails, the test fails, immediately highlighting a discrepancy between the expected and actual behavior. This failure indicates a potential bug or a problem with the application’s implementation.

The use of assertions allows for:

- Verification of Application State: Assertions confirm whether the application’s elements, data, and functionalities are in the expected state after a series of actions.

- Early Bug Detection: By validating behavior at different stages, assertions help identify defects early in the development lifecycle.

- Test Result Reporting: Assertions provide clear and concise results, indicating whether each test passed or failed, which is crucial for debugging and issue tracking.

- Increased Confidence in Application Stability: Thoroughly implemented assertions increase confidence that the application is functioning as intended.

Different Assertion Types and Their Usage

Various assertion types are available, each designed to validate different aspects of the application’s behavior. Choosing the correct assertion type depends on what needs to be verified. Here are some common assertion types:

- Equality Assertions: These assertions check if two values are equal. They are used to compare expected and actual values, such as text, numbers, or boolean states. For example, to check if the text of a confirmation message matches the expected text.

- Inequality Assertions: These assertions check if two values are not equal. Useful for verifying that different values are correctly displayed or processed. For example, verifying that a user’s input doesn’t match a forbidden word.

- Boolean Assertions: These assertions check if a condition is true or false. They are commonly used to verify the visibility of elements, the enabled/disabled state of buttons, or the presence of certain conditions.

- Existence Assertions: These assertions check if an element exists on the page. They are used to confirm that expected elements are present after specific actions.

- Containment Assertions: These assertions check if a value contains another value, like a string. Useful for verifying text content, or the presence of specific elements within a list.

- Comparison Assertions: These assertions check the relationship between two values (greater than, less than, etc.). Useful for validating numerical data or ranges. For instance, checking if the total price is greater than zero.

Examples of how to use assertions:

Using JavaScript and a hypothetical testing framework:

// Example: Equality Assertion (Checking text content)const elementText = await page.locator('selector').textContent();expect(elementText).toBe('Expected Text');// Example: Boolean Assertion (Checking element visibility)const elementIsVisible = await page.locator('selector').isVisible();expect(elementIsVisible).toBe(true);// Example: Containment Assertion (Checking text content)const elementText = await page.locator('selector').textContent();expect(elementText).toContain('Partial Text');// Example: Comparison Assertion (Checking numerical value)const totalValue = await page.locator('selector').textContent();expect(parseFloat(totalValue)).toBeGreaterThan(0);Verifying Test Results: Expected Behavior and Error Messages

Verifying the results of E2E tests involves more than just asserting the presence or absence of elements. It requires a thorough understanding of the application’s expected behavior and the potential error scenarios. This involves:

- Checking for Expected Behavior: This is the primary goal of E2E testing. Test cases are designed to simulate user interactions and verify that the application responds as expected. This includes checking for successful form submissions, correct data display, and proper navigation between pages. For example, after a successful login, verifying that the user is redirected to the expected dashboard page and that their username is displayed correctly.

- Verifying Error Messages: It is important to test how the application handles errors and validates user inputs. Assertions should verify the presence and content of error messages. This includes verifying that the correct error message is displayed when the user enters invalid credentials or attempts to submit a form with missing required fields. For example, if the user enters an incorrect password, verify that the displayed error message says “Incorrect password.”

- Validating Data Integrity: Ensure that the data displayed and processed by the application is accurate and consistent. This can involve verifying the data saved in the database and the data displayed on the front-end matches. For example, after updating a user profile, verifying that the updated information is saved in the database.

Illustrative Example: Consider an e-commerce website where a user adds an item to their cart. The following steps illustrate the verification process:

- Action: User clicks the “Add to Cart” button.

- Assertion 1: Verify that a success message (“Item added to cart”) appears.

- Assertion 2: Verify that the cart icon in the header displays the correct number of items (e.g., “1”).

- Assertion 3: Navigate to the cart page and verify that the added item is displayed with the correct details (name, price, quantity).

- Error Scenario: If the user tries to add the same item again and there is a limit, verify that the appropriate error message is displayed (e.g., “Maximum quantity reached”).

By combining these assertions, the E2E test confirms that the “Add to Cart” functionality works as expected, and the application handles errors appropriately.

Debugging and Troubleshooting E2E Tests

Debugging and troubleshooting are crucial aspects of end-to-end (E2E) testing. Failed tests are inevitable, and the ability to quickly identify the root cause and implement a fix is essential for maintaining a robust and reliable testing process. This section provides practical guidance on common issues, troubleshooting techniques, and the effective use of debugging tools.

Common Issues in E2E Testing

Several common issues can lead to test failures in E2E testing. Understanding these issues is the first step towards effective troubleshooting.

- Element Locators: Incorrect or unstable element locators (e.g., XPath expressions, CSS selectors) are a frequent cause of test failures. Changes in the application’s HTML structure can break locators.

- Timing Issues: Asynchronous operations, slow-loading resources, and network latency can cause tests to fail due to timing issues. Tests may attempt to interact with elements before they are fully loaded or available.

- Test Data Problems: Incorrect or inconsistent test data can lead to unexpected behavior and test failures. This includes data validation errors, data dependencies, and incorrect initial states.

- Environment-Related Issues: Differences between testing and production environments (e.g., database configurations, API endpoints) can cause tests to fail. Inconsistent environment configurations are a common source of errors.

- Browser Compatibility: E2E tests may fail due to browser-specific rendering differences or inconsistencies in JavaScript execution. Tests should be run across multiple browsers to ensure compatibility.

- Application Bugs: Underlying bugs in the application code can directly lead to test failures. E2E tests can expose these bugs by simulating user interactions.

- Flaky Tests: Tests that intermittently pass or fail without any code changes are considered flaky. Flaky tests are often caused by timing issues, race conditions, or external dependencies.

Troubleshooting Failed E2E Tests

Effective troubleshooting involves a systematic approach to identify the cause of test failures. The following steps provide a structured process for debugging:

- Review the Test Failure Details: Examine the error messages, stack traces, and screenshots (if available). The error messages often provide clues about the problem.

- Reproduce the Issue Locally: Attempt to reproduce the test failure in a local development environment. This allows for easier debugging and isolation of the problem.

- Inspect the Application State: Use browser developer tools to inspect the application’s HTML structure, network requests, and JavaScript console. This can help identify issues with element locators, timing, and data.

- Check the Test Environment: Verify that the test environment (e.g., database, API endpoints) is configured correctly and matches the expected setup.

- Analyze the Test Logs: Review the test logs for any relevant information, such as error messages, warnings, and timing details.

- Simplify the Test Case: Temporarily simplify the test case by removing unnecessary steps or assertions. This can help isolate the root cause of the failure.

- Use Debugging Tools: Utilize debugging tools (described below) to step through the test execution and inspect variables, element states, and other relevant data.

- Consult with Developers: If the issue is related to application code or infrastructure, collaborate with developers to identify and resolve the problem.

- Consider Test Flakiness: If the test intermittently fails, investigate potential causes of flakiness, such as timing issues or race conditions. Implement strategies to mitigate flakiness, such as retries or waiting mechanisms.

Using Debugging Tools in Your Chosen Testing Framework

Most E2E testing frameworks provide built-in debugging tools or integrations with external debugging tools. These tools enable developers to step through test execution, inspect variables, and analyze the application’s state during test runs.

Here are examples, but specific instructions depend on the chosen framework:

- Using Debugging Tools in Selenium with Java:

When using Selenium with Java and an IDE like IntelliJ IDEA or Eclipse, you can set breakpoints in your test code. The IDE will pause the test execution at the breakpoint, allowing you to inspect variables, step through the code, and examine the browser’s state.

Example: In IntelliJ IDEA, you can set a breakpoint by clicking in the gutter next to the line of code. When the test runs, the execution will pause at the breakpoint, and you can then use the debugger’s controls (e.g., step over, step into, resume) to analyze the test execution. You can inspect variables by hovering over them or using the “Variables” window in the debugger.

You can also evaluate expressions in the debugger console to check values or call methods.

- Debugging with Cypress:

Cypress provides a powerful debugging experience directly within the browser. The Cypress Test Runner allows you to inspect the application’s state at each step of the test.

Example: Cypress automatically captures screenshots and videos of test runs. You can hover over commands in the Cypress Test Runner to see a preview of the application’s state at that point in the test. The Time Travel feature lets you move through the test execution step-by-step, inspecting the application’s state at each command.

You can also use the `cy.pause()` command to pause the test execution and open the browser’s developer tools for further inspection. The Cypress Dashboard provides detailed information about test runs, including error messages, screenshots, and videos, to aid in debugging.

- Debugging with Playwright:

Playwright offers excellent debugging capabilities. It allows you to step through your tests, inspect variables, and interact with the browser during test execution.

Example: You can use the `page.pause()` command to pause the test execution at a specific point. When the test pauses, Playwright opens a browser window with the current state of the page. You can then use the browser’s developer tools to inspect the DOM, network requests, and console logs. Playwright also integrates with IDEs like VS Code, allowing you to set breakpoints in your test code and step through the execution.

The Playwright Inspector provides a graphical interface for inspecting the page, highlighting elements, and viewing network requests.

Reporting and Analyzing Test Results

Generating and analyzing reports from end-to-end (E2E) tests is crucial for understanding the health of a web application, identifying areas for improvement, and making informed decisions about the development process. Comprehensive reporting provides valuable insights into test execution, failure rates, and performance metrics, allowing teams to quickly address issues and maintain a high-quality user experience.

Importance of Generating Reports

Generating reports from E2E tests offers numerous benefits. These reports provide a centralized view of the testing process, making it easier to track progress, identify trends, and pinpoint problem areas.

- Provides a Summary of Test Execution: Reports offer a clear overview of which tests passed, failed, or were skipped. This summary allows for a quick assessment of the application’s stability and functionality.

- Facilitates Issue Identification: By highlighting failed tests and associated error messages, reports help developers and testers quickly identify and address bugs. They provide crucial information needed for debugging and troubleshooting.

- Enables Trend Analysis: Tracking test results over time allows teams to identify trends, such as a decreasing number of passing tests, which could indicate a regression or a growing problem area within the application.

- Supports Stakeholder Communication: Reports provide a clear and concise way to communicate testing progress and application health to stakeholders, including project managers, product owners, and business analysts.

- Drives Continuous Improvement: Analyzing reports helps identify areas for improvement in both the application code and the testing process itself. This includes optimizing test cases, improving test coverage, and refining the development workflow.

Generating Reports that Provide Insights

Several tools and techniques can be used to generate insightful reports from E2E tests. The goal is to create reports that are easy to understand, visually appealing, and provide actionable information.

- Test Framework-Specific Reporting: Most testing frameworks, such as Selenium, Cypress, and Playwright, offer built-in reporting capabilities. These often include detailed logs, screenshots, and videos of test executions. For example, Cypress automatically generates HTML reports with screenshots and videos on failure.

- Custom Reporting with Libraries: Many programming languages offer libraries for generating custom reports. For instance, in Python, the `pytest-html` plugin can be used to generate HTML reports with detailed test results.

- Integration with CI/CD Systems: Integrating test reporting with Continuous Integration/Continuous Deployment (CI/CD) systems, like Jenkins or GitLab CI, allows for automated report generation after each test run. These reports can be easily accessed and reviewed within the CI/CD pipeline.

- Reporting Formats:

- HTML Reports: Provide a user-friendly, interactive view of test results, often including detailed information, screenshots, and videos.

- JSON Reports: Offer a machine-readable format suitable for parsing and integrating with other tools.

- CSV Reports: Useful for data analysis and exporting test results to spreadsheets.

- Report Content:

- Test Summary: Overall pass/fail rate, total tests executed, and execution time.

- Detailed Test Results: For each test, include the test name, status (pass/fail/skipped), execution time, error messages, screenshots, and videos.

- Test Execution Environment: Information about the browser, operating system, and testing environment used.

- Trend Charts: Visualizations of test results over time to identify patterns and trends.

Analyzing Test Results

Analyzing test results involves a systematic approach to identify areas for improvement and potential issues within the application and the testing process. The goal is to extract meaningful insights from the data and use them to drive positive changes.

- Reviewing the Test Summary: Start by examining the overall pass/fail rate and execution time. A low pass rate or long execution times can indicate significant issues.

- Analyzing Failed Tests: Carefully review the error messages, screenshots, and videos associated with failed tests. This will help pinpoint the root cause of the failures. For example, a test failure due to a “element not found” error suggests an issue with the element locator or the application’s user interface.

- Identifying Recurring Failures: Track which tests fail repeatedly. Recurring failures may indicate a bug in the application or a flaky test that needs to be improved.

- Examining Test Execution Time: Analyze the execution time of each test. Slow-running tests may indicate performance bottlenecks or inefficient test code.

- Identifying Flaky Tests: Flaky tests are those that pass sometimes and fail other times without any changes to the application code or the test itself. These tests can lead to false negatives and should be addressed by improving the test code or the application’s stability.

- Assessing Test Coverage: Evaluate the test coverage to ensure that all critical areas of the application are adequately tested. Identify any gaps in test coverage that need to be addressed. For instance, if the testing team notices that a specific feature is not covered by tests, it can create new tests to cover it.

- Using Data Visualization: Employ charts and graphs to visualize test results and identify trends. For example, a line chart can be used to track the pass rate over time, while a bar chart can be used to compare the performance of different tests.

- Prioritizing Issues: Prioritize identified issues based on their severity and impact on the application. High-priority issues should be addressed first.

- Collaboration and Communication: Share test results and findings with the development team and other stakeholders. This promotes collaboration and ensures that everyone is aware of the application’s health.

- Continuous Improvement: Use the analysis of test results to continuously improve the testing process and the application code. This includes updating test cases, improving test coverage, and addressing performance bottlenecks.

Best Practices for Writing Maintainable E2E Tests

Writing maintainable end-to-end (E2E) tests is crucial for the long-term success of any web application. As applications evolve, tests must adapt to reflect these changes. Well-written tests are easier to understand, modify, and debug, ultimately saving time and resources. Conversely, poorly maintained tests can become a significant liability, leading to false positives, wasted development time, and decreased confidence in the testing process.

This section provides practical tips and strategies to ensure E2E tests remain robust and effective throughout the application lifecycle.

Code Organization and Modularity in E2E Tests

Effective code organization and modularity are fundamental to creating maintainable E2E tests. This involves structuring test code in a logical and reusable manner, reducing redundancy, and improving readability. A well-organized test suite is easier to navigate, understand, and update, making it more resilient to application changes.

- Structure Tests by Feature or Component: Organize tests based on the features or components they validate. This approach makes it easier to locate and modify tests related to specific functionalities. For example, if your application has a user registration feature, group all related tests (successful registration, invalid email format, password requirements) together in a dedicated file or directory. This structure allows for quick identification of tests impacted by changes to a particular feature.

- Use Page Object Model (POM): Implement the Page Object Model (POM) design pattern. POM promotes code reusability and maintainability by creating classes that represent web pages. Each class encapsulates the elements and actions associated with a specific page. When the UI changes, you only need to update the corresponding page object, not every test that uses that page.

For example:

A `LoginPage` class might contain:

- Locators for username and password input fields.

- Methods for entering username and password.

- A method for clicking the login button.

- Create Reusable Helper Functions: Develop helper functions for common tasks, such as logging in, navigating to specific pages, or interacting with UI elements. This reduces code duplication and makes tests more concise and readable. Helper functions can be stored in a separate file and imported into your test files.

- Employ Data-Driven Testing: Utilize data-driven testing to execute the same test with multiple sets of data. This approach reduces the number of tests required and improves test coverage. Data can be stored in external files (e.g., CSV, JSON) or within the test code.

- Implement a Consistent Naming Convention: Establish a consistent naming convention for test files, functions, and variables. This enhances readability and makes it easier for team members to understand the test code. For instance, use descriptive names that clearly indicate the purpose of the test (e.g., `test_user_registration_successful`).

Refactoring and Improving Existing E2E Tests

Refactoring existing E2E tests is an ongoing process that helps improve their quality, maintainability, and efficiency. Regular refactoring ensures tests remain aligned with the evolving application and reduce the risk of test flakiness.

- Identify and Eliminate Code Duplication: Look for repeated code blocks within your tests. Extract these blocks into reusable functions or methods to avoid redundancy. Code duplication makes it harder to maintain tests and increases the risk of errors.

- Simplify Complex Tests: Break down long and complex tests into smaller, more manageable units. This improves readability and makes it easier to identify the root cause of failures. Consider using the “Arrange, Act, Assert” pattern within each test to clearly define the test’s purpose and steps.

The “Arrange, Act, Assert” pattern suggests structuring tests as follows:

- Arrange: Set up the test environment and prepare the necessary data.

- Act: Perform the action or interaction being tested.

- Assert: Verify the expected outcome.

- Update Obsolete Locators: Web applications frequently undergo UI changes. Regularly review and update element locators (e.g., CSS selectors, XPath expressions) to ensure they accurately identify the elements being tested. Obsolete locators are a common cause of test failures.

- Improve Test Coverage: Identify areas of the application that are not adequately covered by E2E tests. Add new tests to address these gaps and increase the overall test coverage. A higher test coverage helps ensure that changes to the application do not introduce regressions.

- Review and Refactor Test Data: Ensure that the test data used in E2E tests is relevant, realistic, and well-managed. Avoid hardcoding test data directly in the test code. Instead, use external data sources or test data factories to generate test data dynamically.

- Automate Test Execution and Analysis: Integrate your E2E tests into a continuous integration/continuous delivery (CI/CD) pipeline to automate test execution. Analyze test results regularly to identify trends, patterns, and areas for improvement. Automated testing provides feedback and ensures tests run consistently and reliably.

Outcome Summary

In conclusion, mastering end-to-end testing is vital for delivering high-quality web applications. By understanding the tools, techniques, and best practices Artikeld in this guide, you can create a robust testing strategy that ensures your application functions as expected. Remember to prioritize clear test case design, efficient element location, and comprehensive result analysis to maximize the value of your E2E tests.

Embrace these principles, and you’ll be well-equipped to build reliable and user-friendly web applications.

FAQ Insights

What is the difference between E2E testing and unit testing?

Unit testing focuses on individual components or functions, while E2E testing verifies the entire application flow, simulating real user interactions across multiple components and systems.

How often should I run E2E tests?

E2E tests should be run frequently, ideally as part of your continuous integration and continuous deployment (CI/CD) pipeline. This allows you to catch issues early and prevent them from reaching production.

What are some common challenges in E2E testing?

Common challenges include flaky tests, long test execution times, and the difficulty of debugging complex scenarios. Proper test design, environment management, and debugging tools are essential to mitigate these challenges.

How do I handle dynamic content in E2E tests?

Use techniques like explicit waits, which pause test execution until a specific element appears or a condition is met. Also, consider using unique identifiers or data attributes for elements that change frequently.

What is test data management, and why is it important?

Test data management involves creating, updating, and cleaning up data used in your tests. It’s crucial for ensuring test isolation, preventing data conflicts, and maintaining consistent test results. Using different data sources, like CSV files or databases, allows you to simulate various scenarios.