Navigating the cloud requires a strategic approach, particularly when it comes to security. Understanding what are the security best practices for cloud migration is paramount, as the shift to the cloud introduces new attack surfaces and necessitates a re-evaluation of traditional security paradigms. This document provides a detailed examination of these practices, equipping organizations with the knowledge to securely transition and operate in the cloud.

The journey to the cloud, while offering numerous benefits, presents unique security challenges. These include the need for robust data protection, stringent access controls, and proactive monitoring to mitigate risks. This comprehensive analysis delves into each critical aspect of cloud security, offering actionable insights and practical guidance to ensure a secure and compliant cloud migration process.

Planning and Preparation for Cloud Migration

The planning phase is paramount for a secure and successful cloud migration. A well-defined strategy, encompassing thorough security assessments and meticulous preparation, mitigates risks and ensures data integrity throughout the transition. Neglecting this crucial stage can lead to vulnerabilities, data breaches, and operational disruptions.

Importance of a Comprehensive Security Assessment Before Migration

A comprehensive security assessment before migrating to the cloud is a critical step. This assessment proactively identifies potential vulnerabilities and risks inherent in the existing on-premises infrastructure and the planned cloud environment. The process allows for the development of targeted security controls and mitigation strategies, reducing the attack surface and protecting sensitive data. Without a thorough assessment, organizations risk migrating vulnerable systems, which could be exploited by malicious actors.

The assessment provides a baseline for security posture and enables informed decision-making throughout the migration process.

Pre-Migration Security Considerations Checklist

Prior to migrating to the cloud, several security considerations require careful attention. The following checklist provides a structured approach to address these critical aspects. This table organizes the key areas, actions, responsibilities, and status tracking for effective pre-migration security planning.

| Area | Action | Responsibility | Status |

|---|---|---|---|

| Network Security | Analyze network architecture, identify ingress/egress points, and plan for virtual network segmentation in the cloud. | Network Administrator, Security Architect | To Do / In Progress / Completed |

| Identity and Access Management (IAM) | Review existing IAM policies, map users and roles, and define cloud-specific access controls (least privilege). | IAM Administrator, Security Engineer | To Do / In Progress / Completed |

| Data Security | Identify and classify sensitive data, determine encryption requirements, and plan for data loss prevention (DLP) implementation. | Data Security Officer, Compliance Officer | To Do / In Progress / Completed |

| Vulnerability Management | Conduct vulnerability scans of on-premises systems, identify vulnerabilities, and plan remediation steps prior to migration. | Security Analyst, System Administrator | To Do / In Progress / Completed |

| Compliance and Governance | Assess compliance requirements (e.g., GDPR, HIPAA), map controls to cloud services, and define governance policies. | Compliance Officer, Legal Counsel | To Do / In Progress / Completed |

| Incident Response | Review and update incident response plan to include cloud-specific scenarios, and define communication protocols. | Security Manager, Incident Response Team | To Do / In Progress / Completed |

| Logging and Monitoring | Define logging requirements, select monitoring tools, and plan for centralized log aggregation and analysis. | Security Engineer, System Administrator | To Do / In Progress / Completed |

| Security Training | Provide security awareness training to all users and specialized training for IT staff on cloud security best practices. | Security Awareness Officer, HR | To Do / In Progress / Completed |

Identifying and Classifying Sensitive Data Prior to Cloud Migration

Identifying and classifying sensitive data is a foundational step in securing data within the cloud environment. This process involves understanding the types of data stored, the regulatory requirements applicable to that data, and the potential impact of a data breach. The classification process informs the selection of appropriate security controls, such as encryption, access controls, and data loss prevention measures.

This proactive approach minimizes the risk of data exposure and ensures compliance with relevant regulations.The process of identifying and classifying sensitive data involves several key steps:

- Data Discovery: Employing data discovery tools to scan on-premises systems and identify where sensitive data resides. This can involve searching for specific data patterns (e.g., social security numbers, credit card numbers), s, or file types.

- Data Inventory: Creating a detailed inventory of all data assets, including their location, owner, and purpose. This inventory serves as a central repository for data-related information.

- Data Classification: Categorizing data based on its sensitivity level (e.g., public, internal, confidential, highly confidential). This classification should align with organizational policies and regulatory requirements. For example, data containing Protected Health Information (PHI) must adhere to HIPAA regulations.

- Data Labeling: Applying labels to data assets to indicate their classification. This can involve tagging files, databases, or other data repositories with appropriate metadata.

- Data Handling Policies: Establishing and enforcing policies that dictate how each data classification level is handled. These policies should address access controls, storage, transmission, and disposal.

By following these steps, organizations can gain a comprehensive understanding of their sensitive data and implement appropriate security measures to protect it during and after cloud migration. For instance, if a company processes credit card information, it is imperative to implement encryption at rest and in transit, and to adhere to PCI DSS compliance requirements.

Data Security in Transit and at Rest

Securing data during cloud migration is paramount. This involves safeguarding information both while it’s moving to the cloud (in transit) and once it resides within the cloud environment (at rest). Robust security measures are crucial to protect against unauthorized access, data breaches, and compliance violations. This section details methods for encrypting data during transit, strategies for securing data at rest, and a comparison of various encryption algorithms.

Data Encryption in Transit

Data in transit, meaning data actively moving between locations (e.g., from an on-premises environment to a cloud provider), is particularly vulnerable. Interception during transmission can lead to significant security breaches. Employing encryption is a fundamental method to protect data confidentiality and integrity during transit.Methods for encrypting data during transit include:

- Transport Layer Security (TLS) / Secure Sockets Layer (SSL): TLS/SSL protocols provide encrypted communication channels. They establish a secure connection between the client (e.g., a user’s browser) and the server (e.g., a cloud service). The protocol uses cryptographic algorithms to encrypt the data exchanged. For example, when a user accesses a website using HTTPS (HTTP Secure), TLS/SSL encrypts the communication.

- Virtual Private Network (VPN): VPNs create an encrypted tunnel over a public network (like the internet). All data transmitted through the VPN tunnel is encrypted, protecting it from eavesdropping. VPNs are commonly used to connect on-premises networks to cloud environments securely. A company might use a VPN to allow employees to securely access cloud-based applications from remote locations.

- Secure Shell (SSH): SSH provides a secure channel for remote access and data transfer. It encrypts all data transmitted between the client and the server, including commands, files, and other sensitive information. SSH is frequently used for managing servers and transferring files securely.

- Encryption at the Application Layer: Some applications offer built-in encryption capabilities. For example, a database application might encrypt data before sending it over the network. This adds an extra layer of security, even if the underlying transport layer is not fully secure. This can involve encrypting data before sending it to a cloud storage service using the service’s specific encryption APIs.

Securing Data at Rest

Data at rest refers to data that is stored on a storage medium, such as hard drives, solid-state drives, or cloud storage services. Protecting data at rest is critical to prevent unauthorized access if the storage medium is compromised or stolen.Strategies for securing data at rest include:

- Encryption: Encryption is the most common method for securing data at rest. Data is transformed into an unreadable format, and only authorized users with the decryption key can access it. Cloud providers offer various encryption options, including server-side encryption (SSE) and client-side encryption. Server-side encryption is where the cloud provider handles the encryption. Client-side encryption is where the user encrypts the data before uploading it to the cloud.

- Access Controls and Identity and Access Management (IAM): Implementing strong access controls limits who can access data. IAM systems manage user identities and permissions, ensuring that only authorized individuals have access to sensitive data. Role-Based Access Control (RBAC) is a common approach, where users are assigned roles with specific permissions.

- Data Masking and Tokenization: Data masking replaces sensitive data with fictitious but realistic values, while tokenization replaces sensitive data with a non-sensitive token. These techniques are used to protect sensitive information while still allowing for data analysis and processing. For example, a credit card number can be masked or tokenized.

- Data Loss Prevention (DLP): DLP systems monitor data usage and prevent unauthorized data leakage. They can detect sensitive data and enforce security policies. DLP systems can be configured to prevent sensitive data from being copied, printed, or transmitted outside the organization.

- Regular Backups and Disaster Recovery: Regular backups ensure data availability in case of data loss or corruption. Disaster recovery plans Artikel procedures for restoring data and systems in the event of a disaster. Data backups should also be encrypted.

Comparison of Encryption Algorithms

Different encryption algorithms offer varying levels of security and performance characteristics. Choosing the appropriate algorithm depends on the specific security requirements and the resources available.Here’s a comparison of common encryption algorithms:

- Advanced Encryption Standard (AES):

- Pros: Strong security, fast performance, widely adopted, hardware-accelerated. AES is considered highly secure and is used by governments and organizations worldwide. It is also very efficient.

- Cons: Requires a key management system to securely store and manage encryption keys.

- Rivest-Shamir-Adleman (RSA):

- Pros: Used for public-key cryptography, supports both encryption and digital signatures, widely supported. RSA is used for secure key exchange.

- Cons: Slower than symmetric algorithms like AES, requires larger key sizes for equivalent security.

- Triple DES (3DES):

- Pros: Relatively strong security compared to its predecessor DES, widely supported in legacy systems.

- Cons: Slower than AES, becoming outdated, potential vulnerabilities due to the 64-bit block size. 3DES is considered less secure than AES and is being phased out.

- Blowfish:

- Pros: Fast and efficient, free for commercial use, good performance on 32-bit processors.

- Cons: Not as widely adopted as AES, key schedule is complex.

Identity and Access Management (IAM) Best Practices

Effective Identity and Access Management (IAM) is paramount in cloud migration, serving as the cornerstone for securing resources and controlling user access. A well-defined IAM strategy minimizes the attack surface, enforces compliance, and streamlines operational efficiency. Neglecting IAM can lead to unauthorized access, data breaches, and significant financial and reputational damage. The following sections detail key components and best practices for implementing a robust IAM strategy within cloud environments.

Key Components of a Robust IAM Strategy for Cloud Environments

A comprehensive IAM strategy necessitates several critical components working in concert to ensure secure and controlled access to cloud resources. The following components are fundamental to a successful implementation.

- Identity Providers (IdPs): These are the entities responsible for authenticating users and providing identity information. They can be cloud-native services (e.g., AWS IAM, Azure Active Directory, Google Cloud IAM) or external systems (e.g., on-premises Active Directory, third-party identity providers). Choosing the right IdP depends on factors such as existing infrastructure, compliance requirements, and user base size. Integration between the IdP and the cloud environment is crucial for centralized identity management.

- Authentication Mechanisms: Authentication verifies a user’s identity. Strong authentication mechanisms are essential. This involves multi-factor authentication (MFA), which requires users to provide multiple forms of verification (e.g., password and a one-time code from a mobile app). Implementations should also support password policies (complexity, length, expiration), and consider biometric authentication where applicable for enhanced security.

- Authorization and Access Control: Authorization determines what a user is permitted to do after they have been authenticated. This typically involves defining roles, permissions, and policies that govern access to resources. Granular access control is vital, allowing administrators to specify precisely which actions users can perform on which resources.

- Role-Based Access Control (RBAC): RBAC simplifies access management by assigning users to roles that define their responsibilities and associated permissions. This approach reduces the complexity of managing individual user permissions and makes it easier to adapt to changes in user roles or responsibilities.

- Monitoring and Auditing: Continuous monitoring and auditing of access activities are crucial for detecting and responding to security incidents. Implement logging of all access attempts, both successful and unsuccessful, and regularly review logs for suspicious activity. Utilize security information and event management (SIEM) systems to aggregate and analyze logs from various sources.

- Privileged Access Management (PAM): PAM solutions provide secure access to privileged accounts (e.g., administrators). PAM involves controlling, monitoring, and auditing access to these accounts, including password management, session recording, and approval workflows. This helps to prevent unauthorized access and misuse of privileged credentials.

Implementing the Principle of Least Privilege

The principle of least privilege (PoLP) is a fundamental security concept stating that users and processes should only have the minimum necessary access rights to perform their tasks. Implementing PoLP significantly reduces the attack surface by limiting the potential damage from compromised accounts or malicious insiders.

- Define Roles and Permissions: Carefully define roles based on job functions and responsibilities. Assign permissions to each role that are strictly necessary for the tasks associated with that role. Avoid assigning broad permissions that grant unnecessary access.

- Regularly Review Permissions: Periodically review user permissions to ensure they remain appropriate. Remove unnecessary permissions or adjust them as job roles evolve. Conduct access reviews at least annually, or more frequently in high-risk environments.

- Use Automation: Automate the process of granting and revoking access to streamline access management and ensure consistency. Use Infrastructure as Code (IaC) to define and manage IAM configurations, ensuring consistent application of PoLP across environments.

- Implement Just-in-Time (JIT) Access: JIT access grants temporary access to resources only when needed. This reduces the risk associated with long-lived privileged accounts. For example, a developer might require access to production servers only during specific maintenance windows.

- Employ Access Request Workflows: Implement workflows that require users to request access to resources and have their requests approved by the appropriate authorities. This provides an additional layer of control and accountability.

Designing a Role-Based Access Control (RBAC) Model for a Sample Cloud Application

A well-designed RBAC model simplifies access management and enhances security. This example illustrates a RBAC model for a hypothetical e-commerce application deployed in a cloud environment.

Application: E-commerce Platform

Roles:

- Administrator: Full access to all application resources and administrative functions (e.g., user management, configuration, deployments).

- Developer: Access to development environments, code repositories, and deployment pipelines.

- Operations: Access to production environments, monitoring tools, and incident response procedures.

- Customer Service Representative: Access to customer data, order management, and communication tools.

- Auditor: Read-only access to logs and audit trails for compliance purposes.

Permissions (Examples):

- Administrator: Create/modify users, manage application configuration, deploy code, access all data.

- Developer: Access code repositories (read/write), deploy code to development environments, view logs.

- Operations: Monitor production environments, restart servers, respond to incidents, access production logs.

- Customer Service Representative: View customer data, manage orders, communicate with customers.

- Auditor: Read logs, view audit trails, access reports.

Implementation Considerations:

- Cloud Provider Specifics: The specific implementation will depend on the cloud provider (e.g., AWS IAM, Azure RBAC, Google Cloud IAM). Each provider has its own set of tools and features for managing roles, permissions, and policies.

- Principle of Least Privilege: Ensure that each role only has the minimum permissions necessary to perform its duties.

- Regular Reviews: Regularly review and update the RBAC model to reflect changes in the application, user roles, and security requirements.

- Automated Provisioning: Automate the process of assigning users to roles and provisioning the necessary permissions.

Example Scenario: A developer needs to deploy a code change to the staging environment. The developer, assigned to the “Developer” role, would have the necessary permissions to access the code repository, build the code, and deploy it to the staging environment. The Operations team would have separate permissions to deploy to production. This separation of duties is critical for maintaining the integrity of the production environment.

Network Security Configuration

Network security configuration is a critical component of a secure cloud migration strategy. It encompasses the design and implementation of network controls to protect cloud resources from unauthorized access, data breaches, and other threats. This section delves into the essential aspects of network security, focusing on firewalls, intrusion detection/prevention systems, VPNs, network segmentation, and secure VPC configuration. The goal is to provide a robust and defensible network architecture within the cloud environment.

Firewalls, Intrusion Detection/Prevention Systems (IDS/IPS), and VPNs in Cloud Environments

Cloud environments necessitate a layered approach to network security. This approach leverages a combination of technologies to protect data and resources. These technologies include firewalls, intrusion detection/prevention systems, and virtual private networks (VPNs).Firewalls are fundamental security tools that act as a barrier between a trusted network and an untrusted network (e.g., the internet). They control network traffic based on predefined rules, allowing or denying traffic based on source and destination IP addresses, ports, and protocols.

Cloud providers offer both network firewalls and web application firewalls (WAFs). Network firewalls typically operate at the network layer (Layer 3) and transport layer (Layer 4) of the OSI model, while WAFs operate at the application layer (Layer 7) and are designed to protect web applications from common attacks such as SQL injection and cross-site scripting (XSS).Intrusion Detection Systems (IDS) monitor network traffic for suspicious activity, alerting administrators to potential security breaches.

Intrusion Prevention Systems (IPS) build upon IDS capabilities by actively blocking malicious traffic based on predefined rules or signatures. Both IDS and IPS are crucial for detecting and mitigating threats in real-time. They analyze network traffic for known attack patterns and anomalies.VPNs create secure, encrypted connections over public networks, such as the internet. They are used to establish secure communication channels between on-premises networks and cloud environments, or between cloud resources themselves.

VPNs are essential for remote access, site-to-site connectivity, and securing sensitive data in transit. They provide confidentiality and integrity by encrypting data and authenticating users.

Network Segmentation

Network segmentation is the practice of dividing a network into smaller, isolated segments. This approach significantly enhances security by limiting the impact of a security breach. If one segment is compromised, the attacker’s access is restricted to that segment, preventing lateral movement to other critical areas of the network.

- Benefits of Network Segmentation:

- Reduced Attack Surface: By isolating critical assets, segmentation reduces the area vulnerable to attack.

- Improved Threat Containment: Segmentation limits the spread of malware and other threats.

- Enhanced Compliance: Segmentation facilitates compliance with regulatory requirements by isolating sensitive data.

- Simplified Security Management: Segmentation makes it easier to apply and manage security policies.

- Increased Performance: Segmentation can improve network performance by reducing broadcast traffic and optimizing routing.

- Implementation Strategies:

- Virtual LANs (VLANs): VLANs logically segment a network at the data link layer (Layer 2) by grouping devices into broadcast domains.

- Subnetting: Subnetting divides a network into smaller, more manageable IP address ranges.

- Firewalls: Firewalls are used to control traffic flow between segments, enforcing security policies.

- Micro-segmentation: Micro-segmentation is a more granular approach that isolates individual workloads or applications.

Network segmentation can be illustrated by comparing it to a physical building. Each floor could represent a different segment, with access controlled by security personnel (firewalls) and access cards (authentication mechanisms). If one floor is breached, the attacker is contained within that floor, and other floors remain protected.

Secure Virtual Private Cloud (VPC) Configuration

A Virtual Private Cloud (VPC) provides an isolated section of the cloud provider’s network where users can launch cloud resources. Configuring a secure VPC is paramount for protecting cloud-based applications and data. This involves setting up the VPC, subnets, security groups, and network access control lists (NACLs).The configuration process involves several steps:

- VPC Creation: Define the VPC’s CIDR block, which determines the IP address range for the VPC. Example: A VPC with CIDR 10.0.0.0/16 provides a range of 65,536 IP addresses.

- Subnet Creation: Create subnets within the VPC. Each subnet belongs to a specific availability zone and has its own CIDR block. Example: Two subnets with CIDR 10.0.1.0/24 and 10.0.2.0/24.

- Security Group Configuration: Security groups act as virtual firewalls for instances within a VPC. They control inbound and outbound traffic based on rules. Example: A security group for web servers might allow inbound traffic on port 80 (HTTP) and port 443 (HTTPS) from the internet, while allowing outbound traffic to all destinations.

- Network Access Control List (NACL) Configuration: NACLs are another layer of security that acts as a firewall at the subnet level. They provide more granular control over network traffic than security groups. Example: A NACL might deny all inbound traffic to a subnet except for specific ports used by authorized applications.

- Routing Configuration: Configure routing tables to direct traffic between subnets and to the internet.

Example of a simplified secure VPC configuration:

| Component | Configuration | Description |

|---|---|---|

| VPC | CIDR: 10.0.0.0/16 | Defines the overall IP address space for the VPC. |

| Subnet 1 | CIDR: 10.0.1.0/24, Availability Zone: us-east-1a | A subnet for web servers. |

| Subnet 2 | CIDR: 10.0.2.0/24, Availability Zone: us-east-1b | A subnet for database servers. |

| Security Group (Web Servers) | Inbound: Allow HTTP (port 80), HTTPS (port 443) from 0.0.0.0/0; Outbound: Allow all. | Controls traffic to web servers. |

| Security Group (Database Servers) | Inbound: Allow MySQL (port 3306) from Web Servers SG; Outbound: Allow all. | Controls traffic to database servers. |

| NACL (Subnet 1) | Inbound: Allow HTTP (port 80), HTTPS (port 443) from 0.0.0.0/0; Outbound: Allow all. | Additional security layer for the web server subnet. |

| NACL (Subnet 2) | Inbound: Allow MySQL (port 3306) from Web Server Subnet; Outbound: Allow all. | Additional security layer for the database server subnet. |

In this example, the web servers are in one subnet, and the database servers are in another. Security groups and NACLs are configured to allow only necessary traffic between the subnets, minimizing the attack surface and enhancing security. This configuration demonstrates the implementation of the principle of least privilege.

Compliance and Governance

In cloud migration, establishing robust compliance and governance is crucial for maintaining data security, meeting regulatory requirements, and ensuring operational efficiency. Cloud environments introduce unique challenges in these areas, necessitating a proactive approach to ensure that data and systems remain protected throughout the migration process and beyond. A comprehensive strategy integrates compliance frameworks, well-defined security policies, and rigorous procedures to mitigate risks and uphold the integrity of cloud resources.

Common Cloud Security Compliance Frameworks

Organizations must navigate various compliance frameworks depending on their industry, geographic location, and the nature of the data they handle. Understanding and adhering to these frameworks are essential for avoiding penalties, maintaining customer trust, and ensuring business continuity.

- Health Insurance Portability and Accountability Act (HIPAA): Primarily applicable to healthcare providers, health plans, and their business associates in the United States, HIPAA mandates the protection of protected health information (PHI). It sets standards for the security and privacy of electronic health records. Compliance requires stringent access controls, data encryption, and audit trails.

- Payment Card Industry Data Security Standard (PCI DSS): This standard is relevant for any organization that processes, stores, or transmits credit card information. PCI DSS compliance involves implementing security controls to protect cardholder data, including firewalls, encryption, and access restrictions. Regular security assessments and vulnerability scans are also required.

- General Data Protection Regulation (GDPR): The GDPR, enacted by the European Union, governs the processing of personal data of individuals within the EU. It sets broad requirements for data protection, including obtaining consent for data processing, providing data subject rights, and implementing appropriate security measures. Failure to comply can result in significant fines.

- ISO 27001: An internationally recognized standard for information security management systems (ISMS). It provides a framework for establishing, implementing, maintaining, and continually improving an ISMS. Certification to ISO 27001 demonstrates an organization’s commitment to information security best practices.

- Federal Risk and Authorization Management Program (FedRAMP): This U.S. government program provides a standardized approach to security assessment, authorization, and continuous monitoring for cloud products and services. FedRAMP compliance is required for cloud service providers (CSPs) that serve federal agencies.

Establishing Security Policies and Procedures for Cloud Environments

Developing and implementing well-defined security policies and procedures are fundamental to a secure cloud environment. These policies should cover various aspects of cloud security, from access management and data encryption to incident response and disaster recovery. They should be regularly reviewed and updated to address evolving threats and changes in the cloud environment.

- Policy Development: Begin by creating clear and concise security policies. These policies should define the organization’s security objectives, acceptable use of cloud resources, and responsibilities of all stakeholders. Policies should be aligned with relevant compliance frameworks.

- Procedure Implementation: Develop detailed procedures to operationalize the security policies. Procedures should Artikel specific steps for implementing and maintaining security controls. This includes procedures for access provisioning, incident response, vulnerability management, and data backup.

- Role-Based Access Control (RBAC): Implement RBAC to grant users only the necessary access to cloud resources based on their roles and responsibilities. This minimizes the attack surface and reduces the risk of unauthorized access.

- Data Encryption: Encrypt sensitive data both in transit and at rest. Use strong encryption algorithms and manage encryption keys securely. This protects data from unauthorized access even if the cloud infrastructure is compromised.

- Monitoring and Logging: Implement comprehensive monitoring and logging to track all activities in the cloud environment. This includes logging access attempts, security events, and system changes. Regular review of logs helps detect and respond to security incidents.

- Incident Response Plan: Develop and maintain an incident response plan to address security incidents effectively. The plan should define roles, responsibilities, and procedures for identifying, containing, eradicating, and recovering from security incidents.

- Regular Audits and Assessments: Conduct regular security audits and assessments to evaluate the effectiveness of security controls and identify vulnerabilities. These audits should be performed by qualified personnel and should cover all aspects of the cloud environment.

- Training and Awareness: Provide regular security training and awareness programs to all employees and contractors. This helps to educate users about security threats and best practices, reducing the risk of human error.

Comparison of Compliance Frameworks

The following table provides a comparison of several common compliance frameworks, highlighting their scope, key requirements, and the impact they have on cloud migration.

| Framework | Scope | Key Requirements | Impact on Cloud Migration |

|---|---|---|---|

| HIPAA | Protected Health Information (PHI) |

|

|

| PCI DSS | Cardholder Data (CHD) |

|

|

| GDPR | Personal Data of EU Residents |

|

|

| ISO 27001 | Information Security Management Systems (ISMS) |

|

|

| FedRAMP | Cloud Services for U.S. Federal Agencies |

|

|

Incident Response and Disaster Recovery

A robust incident response and disaster recovery plan is paramount for maintaining the security and availability of cloud-based systems. Cloud environments, while offering scalability and resilience, are still susceptible to security incidents and unforeseen outages. Without proactive measures, organizations risk data loss, service disruptions, and reputational damage. A well-defined plan ensures a swift and effective response to security breaches and enables rapid restoration of critical services in the event of a disaster.

Incident Response Plan Components

An effective incident response plan Artikels the procedures and responsibilities for handling security incidents. This includes clear steps for detection, containment, eradication, recovery, and post-incident activities.

- Preparation: This phase involves establishing a dedicated incident response team, defining roles and responsibilities, and ensuring the availability of necessary tools and resources. This includes conducting regular training exercises, such as tabletop exercises or simulated attacks, to familiarize the team with the plan and improve their response capabilities. For example, a company might simulate a ransomware attack to test its response plan and identify any weaknesses.

- Detection and Analysis: This stage focuses on identifying potential security incidents through continuous monitoring of security logs, network traffic, and system activity. Security Information and Event Management (SIEM) systems play a crucial role in this process, aggregating and analyzing data from various sources to detect anomalies and potential threats. Analyzing the data to determine the scope and impact of the incident is essential.

- Containment: The goal here is to isolate the affected systems or network segments to prevent further damage or spread of the incident. This may involve disconnecting compromised systems from the network, changing passwords, or implementing firewall rules. The containment strategy should balance the need to protect the environment with the need to minimize disruption to business operations.

- Eradication: This phase involves removing the cause of the incident. This could include removing malware, patching vulnerabilities, or restoring systems from backups. A thorough understanding of the root cause is essential to ensure that the incident is fully resolved and that similar incidents are prevented in the future.

- Recovery: The recovery phase focuses on restoring affected systems and services to their normal operational state. This may involve restoring data from backups, re-imaging servers, or implementing failover mechanisms. Thorough testing is necessary to ensure that the systems are functioning correctly and that data integrity is maintained.

- Post-Incident Activity: This involves analyzing the incident to identify lessons learned and to improve the incident response plan. This may include conducting a post-mortem analysis, updating security policies and procedures, and implementing additional security controls to prevent similar incidents from occurring in the future.

Data Backup and Recovery Procedures

Data backup and recovery are critical components of any cloud security strategy. Regular backups ensure that data can be restored in the event of data loss or corruption, while effective recovery procedures minimize downtime and business disruption.

- Backup Strategies: Various backup strategies are available, including full backups, incremental backups, and differential backups. Full backups create a complete copy of all data, while incremental backups only back up data that has changed since the last backup. Differential backups back up all data that has changed since the last full backup. The choice of backup strategy depends on factors such as the frequency of data changes, the required recovery time objective (RTO), and the available storage capacity.

- Backup Location and Redundancy: Backups should be stored in a separate, secure location, preferably offsite. This ensures that data is protected even if the primary data center is unavailable. Cloud providers offer various options for data redundancy, such as replicating data across multiple availability zones or regions.

- Backup Testing: Regular testing of backup and recovery procedures is essential to ensure that data can be successfully restored. This involves simulating data loss scenarios and verifying that the recovery process works as expected. The frequency of testing should be determined based on the criticality of the data and the RTO.

- Data Encryption: Encrypting data at rest and in transit is crucial for protecting the confidentiality of backups. Encryption ensures that even if backups are compromised, the data remains unreadable without the appropriate decryption keys.

Disaster Recovery Strategies and Implementation

Disaster recovery (DR) strategies aim to ensure business continuity in the event of a major outage or disaster. These strategies involve replicating data and applications to a secondary site or environment, enabling rapid failover and recovery. The choice of DR strategy depends on the organization’s RTO and recovery point objective (RPO).

- Recovery Time Objective (RTO): RTO defines the maximum acceptable downtime after a disaster. A lower RTO indicates a greater need for rapid recovery. For example, a mission-critical application might have an RTO of a few minutes, while a less critical application might have an RTO of several hours or even days.

- Recovery Point Objective (RPO): RPO defines the maximum acceptable data loss after a disaster. A lower RPO indicates a greater need for frequent backups and data replication. For example, a financial institution might have an RPO of a few seconds to ensure minimal data loss, while a non-critical application might have an RPO of several hours.

- Disaster Recovery Strategies:

- Backup and Restore: This is the simplest and most cost-effective DR strategy, involving restoring data from backups. However, it typically has a higher RTO.

- Pilot Light: This involves maintaining a minimal set of resources in the secondary site, which can be quickly scaled up in the event of a disaster. This strategy offers a faster RTO than backup and restore.

- Warm Standby: This involves maintaining a fully configured, but idle, environment in the secondary site. Data is replicated to the secondary site, but the applications are not running. This provides a faster RTO than pilot light.

- Hot Standby: This involves maintaining a fully functional, real-time environment in the secondary site. Data is replicated continuously, and applications are running. This offers the lowest RTO but is the most expensive strategy.

- Implementation Considerations:

- Automated Failover: Automating the failover process is crucial for minimizing downtime. This can involve using DNS failover, load balancers, or other automation tools.

- Regular Testing: Regularly testing the DR plan is essential to ensure that it works as expected. This involves simulating disaster scenarios and verifying that the failover process is successful.

- Documentation: Maintaining comprehensive documentation of the DR plan, including procedures, roles, and responsibilities, is essential for ensuring a smooth recovery.

Monitoring and Logging

Effective monitoring and logging are cornerstones of robust cloud security, providing critical visibility into system behavior and enabling proactive threat detection and incident response. These practices are not merely administrative overhead; they are essential for maintaining the confidentiality, integrity, and availability of cloud-based resources. Comprehensive monitoring and logging strategies allow organizations to identify and mitigate security threats, optimize resource utilization, and ensure compliance with regulatory requirements.

Significance of Comprehensive Monitoring and Logging

The dynamic and distributed nature of cloud environments necessitates a proactive and comprehensive approach to monitoring and logging. Without adequate visibility, organizations are essentially operating blind, unable to detect or respond to security incidents effectively. The importance of these practices stems from several key factors:

- Real-time Threat Detection: Monitoring tools analyze real-time data streams, identifying suspicious activities such as unauthorized access attempts, unusual network traffic patterns, or anomalous user behavior.

- Incident Response and Forensics: Detailed logs provide invaluable data for incident investigation, enabling security teams to understand the scope and impact of security breaches, identify the root cause, and take appropriate remediation steps.

- Compliance and Auditing: Comprehensive logging facilitates compliance with regulatory requirements, such as GDPR, HIPAA, and PCI DSS, by providing an audit trail of system activities and demonstrating adherence to security policies.

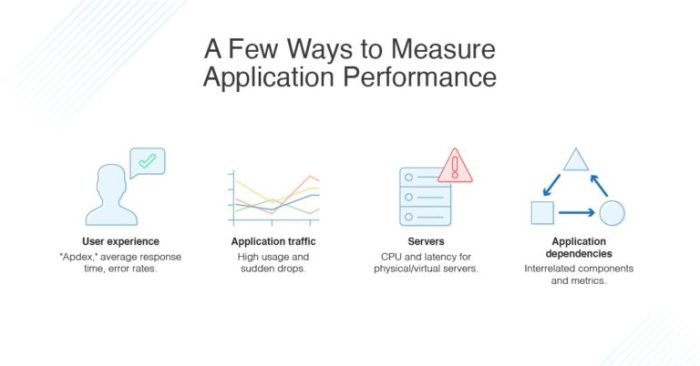

- Performance Optimization: Monitoring tools provide insights into system performance, enabling organizations to identify bottlenecks, optimize resource allocation, and improve overall application performance.

- Security Posture Improvement: By analyzing historical log data, organizations can identify trends, assess the effectiveness of security controls, and proactively improve their security posture.

Designing a Monitoring System for Security Breaches

Designing a robust monitoring system involves careful consideration of data sources, alerting mechanisms, and response strategies. The goal is to create a system that proactively identifies and alerts on unusual activities or potential security breaches, enabling timely intervention and mitigation. A well-designed monitoring system typically includes the following components:

- Data Sources: The monitoring system should collect data from various sources, including:

- Virtual Machine (VM) Logs: System logs, application logs, and security logs generated by individual VMs.

- Network Logs: Network traffic logs, firewall logs, and intrusion detection/prevention system (IDS/IPS) logs.

- Cloud Provider Logs: Logs generated by the cloud provider, such as AWS CloudTrail, Azure Activity Log, and Google Cloud Audit Logs, providing information on API calls, resource creation/deletion, and user activity.

- Application Logs: Logs generated by applications, including authentication attempts, error messages, and user activity.

- Centralized Logging: All logs should be centralized in a secure and scalable logging platform, such as the ELK stack (Elasticsearch, Logstash, Kibana), Splunk, or cloud-native logging services. This facilitates aggregation, analysis, and correlation of data from different sources.

- Alerting Rules: Define clear and specific alerting rules based on known threats, security policies, and industry best practices. Alerting rules should trigger notifications based on predefined thresholds or patterns. For example:

- Failed Login Attempts: Alert when a user fails to log in multiple times within a short period, which could indicate a brute-force attack.

- Unusual Network Traffic: Alert when there is a significant increase in network traffic to or from a specific IP address or port, which could indicate a denial-of-service (DoS) attack or data exfiltration.

- Unauthorized API Calls: Alert when a user or application makes an API call that is not authorized or that deviates from normal behavior.

- Resource Changes: Alert when resources are created, modified, or deleted in an unusual manner, such as the creation of a new virtual machine in a specific region.

- Alerting Mechanisms: Configure alerting mechanisms to notify security teams promptly when alerts are triggered. This could include email notifications, SMS messages, or integration with incident response platforms.

- Automated Response: Implement automated response actions for specific alerts, such as blocking malicious IP addresses, isolating compromised VMs, or disabling compromised user accounts.

- Dashboard and Reporting: Create dashboards and reports to visualize security events, track key metrics, and provide insights into security trends.

Example of Log Analysis and Threat Identification

Log analysis plays a crucial role in identifying and responding to security threats. By examining log data, security teams can uncover malicious activities, understand attack vectors, and mitigate potential damage. Consider the following example:

Scenario: A security team detects a spike in network traffic to an unusual external IP address. The team investigates using log analysis.

Analysis Steps:

- Log Aggregation: The team collects relevant logs from various sources, including firewall logs, network traffic logs, and web server access logs.

- Filtering and Correlation: The team filters the logs to focus on traffic related to the suspicious IP address. They correlate events across different log sources to identify patterns and relationships.

- Pattern Identification: The team identifies a pattern of activity:

- Multiple requests originating from a compromised internal server to the external IP address.

- The requests involve attempts to upload malicious files to the external IP address.

- Threat Assessment: Based on the analysis, the team determines that the internal server has likely been compromised and is attempting to exfiltrate data or establish a command-and-control channel.

- Remediation: The team takes immediate action to contain the threat:

- Isolating the compromised server from the network.

- Identifying and removing malicious files.

- Investigating the root cause of the compromise to prevent future incidents.

Example: In 2020, a major cloud provider experienced a significant data breach due to a misconfigured storage bucket. The attackers were able to access sensitive data stored in the bucket. Detailed log analysis, including API call logs and access logs, revealed the unauthorized access patterns, allowing the cloud provider to identify the vulnerability and implement necessary remediation steps. The incident underscored the importance of meticulous logging and proactive analysis in detecting and responding to security threats in cloud environments.

Vulnerability Management and Patching

Effectively managing vulnerabilities and implementing a robust patching strategy are critical for maintaining the security posture of cloud environments. These practices proactively mitigate risks associated with known vulnerabilities, reducing the attack surface and protecting against potential exploits. Neglecting these areas can expose cloud resources to significant threats, including data breaches, service disruptions, and compliance violations.

Vulnerability Scanning and Penetration Testing in the Cloud

Vulnerability scanning and penetration testing are integral components of a comprehensive security strategy in cloud environments. They provide a proactive approach to identifying and addressing security weaknesses before they can be exploited by malicious actors.Vulnerability scanning involves the automated assessment of systems and applications for known vulnerabilities. The process typically includes:

- Automated Scanning: Utilizing vulnerability scanners to identify weaknesses based on a database of known vulnerabilities. These scanners check for misconfigurations, outdated software, missing patches, and other potential security flaws. Tools like Nessus, OpenVAS, and AWS Inspector are commonly used for this purpose.

- Configuration Review: Evaluating the configuration of cloud services and resources against security best practices. This includes verifying settings related to access control, encryption, and network segmentation.

- Reporting and Analysis: Generating detailed reports that highlight identified vulnerabilities, their severity levels, and recommended remediation steps. Analysis helps prioritize remediation efforts based on risk.

Penetration testing, on the other hand, simulates real-world attacks to assess the effectiveness of security controls. This process involves:

- Information Gathering: Collecting information about the target system, including its infrastructure, services, and potential vulnerabilities.

- Vulnerability Exploitation: Attempting to exploit identified vulnerabilities to gain unauthorized access or compromise the system. This may involve techniques such as exploiting software flaws, misconfigurations, or weak credentials.

- Post-Exploitation: After successful exploitation, the penetration tester attempts to maintain access, escalate privileges, and gather sensitive information to simulate the actions of a real attacker.

- Reporting and Remediation: Documenting the findings, including the vulnerabilities exploited, the impact of the attacks, and recommendations for remediation.

The frequency of vulnerability scanning and penetration testing should be determined based on the sensitivity of the data, the complexity of the cloud environment, and regulatory requirements. Regular scanning, coupled with periodic penetration testing, provides a proactive and ongoing approach to identifying and mitigating security risks.

Patching Strategy for Cloud-Based Systems and Applications

Implementing a well-defined patching strategy is essential for mitigating vulnerabilities and maintaining the security of cloud-based systems and applications. This strategy should encompass the following key elements:

- Patch Management Policy: A documented policy outlining the organization’s approach to patching, including roles and responsibilities, patch prioritization, and timelines for deployment. This policy should align with the organization’s risk tolerance and compliance requirements.

- Patch Identification and Assessment: Establishing a process for identifying and assessing available patches. This involves monitoring vendor announcements, security advisories, and industry news for new vulnerabilities and patch releases. Prioritization should be based on the severity of the vulnerability, the criticality of the affected system, and the potential impact of an exploit.

- Patch Testing and Deployment: Implementing a testing process to ensure patches do not disrupt critical services or introduce new vulnerabilities. This typically involves testing patches in a non-production environment before deploying them to production systems. The deployment process should be automated as much as possible to ensure consistency and reduce the risk of human error.

- Automation and Orchestration: Leveraging automation tools to streamline the patching process. This includes automating patch deployment, validation, and rollback procedures. Cloud providers offer various services for automating patching, such as AWS Systems Manager, Azure Update Management, and Google Cloud OS Patch Management.

- Rollback Plan: Creating a rollback plan in case a patch causes unexpected issues. This plan should include steps to quickly revert to the previous version of the software and minimize the impact of the failure.

The frequency of patching depends on the nature of the system and the severity of the vulnerabilities. Critical security patches should be deployed as quickly as possible, often within days or even hours of release. Other patches can be deployed on a more regular schedule, such as monthly or quarterly.

Remediating Common Vulnerabilities Identified in Cloud Environments

Remediating vulnerabilities is a crucial step in strengthening the security posture of cloud environments. The specific remediation steps will vary depending on the nature of the vulnerability, but some common vulnerabilities and their remediation strategies include:

- Unpatched Software: Vulnerabilities often arise from outdated software with known flaws.

- Remediation: Implement a robust patching strategy, including automated patch deployment, and regularly update all systems and applications.

- Misconfigured Security Groups/Network ACLs: Inadequate network security controls can expose cloud resources.

- Remediation: Review and tighten security group and network ACL configurations to restrict access to only necessary ports and protocols. Implement the principle of least privilege.

- Weak or Default Credentials: Weak or default credentials provide easy entry points for attackers.

- Remediation: Enforce strong password policies, require multi-factor authentication (MFA), and regularly review and rotate credentials.

- Insecure Storage Configuration: Incorrectly configured storage can lead to data exposure.

- Remediation: Configure storage buckets and other storage services with appropriate access controls, encryption, and versioning. Regularly audit storage configurations for misconfigurations.

- Lack of Encryption: Data transmitted without encryption is vulnerable to interception.

- Remediation: Enable encryption in transit (e.g., TLS/SSL) and at rest (e.g., using KMS or similar services) for all sensitive data.

Regularly reviewing and addressing identified vulnerabilities, combined with a proactive patching strategy, significantly reduces the risk of successful attacks and helps maintain a strong security posture in the cloud. This approach protects against data breaches, service disruptions, and compliance violations.

Security Awareness and Training

Security awareness and training are critical components of a robust cloud security posture. The shared responsibility model inherent in cloud computing places a significant portion of security responsibility on the cloud user. Educating users on security best practices, common threats, and their role in protecting data and resources is paramount to minimizing risks and maintaining a secure cloud environment. Without adequate training, even the most sophisticated technical controls can be circumvented by human error.

Importance of Security Awareness Training for Cloud Users

Effective security awareness training empowers users to become the first line of defense against cyber threats. Cloud environments, due to their accessibility and distributed nature, are particularly vulnerable to attacks targeting human weaknesses. Users who are not adequately trained are more likely to fall victim to phishing, social engineering, and other attacks that can compromise cloud resources.

- Reduced Risk of Data Breaches: Properly trained users are better equipped to identify and avoid phishing emails, malicious links, and other attack vectors that could lead to data breaches. Data breaches can result in significant financial losses, reputational damage, and legal consequences.

- Improved Compliance: Many regulatory frameworks, such as HIPAA, GDPR, and PCI DSS, mandate security awareness training for employees who handle sensitive data. Providing this training helps organizations meet compliance requirements and avoid penalties.

- Enhanced Security Culture: Consistent security awareness training fosters a culture of security within an organization. This culture encourages employees to be vigilant about security, report suspicious activities, and follow security best practices.

- Protection of Cloud Resources: By understanding the threats they face and the security measures in place, users can help protect cloud resources from unauthorized access, data loss, and other security incidents.

- Cost Savings: Preventing security incidents through proactive training is far more cost-effective than responding to and recovering from breaches. The costs associated with data breaches, including investigation, remediation, legal fees, and lost business, can be substantial.

Best Practices for Educating Users on Cloud Security Threats

Effective security awareness training should be comprehensive, engaging, and tailored to the specific threats and risks faced by an organization. Training should be regularly updated to reflect the evolving threat landscape and changes in cloud environments.

- Comprehensive Curriculum: The training program should cover a wide range of topics, including:

- Phishing and social engineering attacks.

- Password security and multi-factor authentication (MFA).

- Malware and ransomware threats.

- Data privacy and data loss prevention (DLP).

- Cloud-specific threats, such as misconfigured storage buckets and compromised API keys.

- Incident reporting procedures.

- Engaging Content: Use interactive training modules, videos, quizzes, and real-world examples to keep users engaged and reinforce key concepts. Avoid dry, text-heavy presentations.

- Regular Training: Conduct training sessions at least annually, and consider more frequent training for high-risk roles or departments. Reinforce training with regular phishing simulations and security reminders.

- Role-Based Training: Tailor training content to the specific roles and responsibilities of users. For example, administrators require more in-depth training on cloud security controls than general users.

- Phishing Simulations: Regularly conduct phishing simulations to test user awareness and identify areas for improvement. Provide feedback and training to users who fail the simulations.

- Policy Acknowledgement: Require users to acknowledge their understanding of security policies and procedures. This can be done through quizzes, sign-off forms, or other methods.

- Cloud-Specific Training: Ensure the training covers the specific cloud platforms and services used by the organization, including AWS, Azure, and Google Cloud.

- Metrics and Evaluation: Track training completion rates, phishing simulation results, and other metrics to measure the effectiveness of the training program. Use this data to identify areas for improvement and refine the training content.

Sample Training Module on Phishing and Social Engineering Attacks

This module provides an overview of phishing and social engineering attacks, including how they work, how to identify them, and how to avoid becoming a victim.

Module Objectives:

- Define phishing and social engineering.

- Identify common phishing techniques.

- Recognize the signs of a phishing email or website.

- Understand the importance of strong passwords and MFA.

- Learn how to report phishing attempts.

Module Content:

Introduction to Phishing and Social Engineering:

Phishing and social engineering are tactics used by attackers to trick individuals into revealing sensitive information, such as usernames, passwords, credit card details, or other personal data. Attackers often impersonate trusted entities, such as banks, tech companies, or colleagues, to gain the victim’s trust.

Phishing Techniques:

- Email Phishing: Attackers send deceptive emails that appear to be from legitimate sources. These emails often contain malicious links or attachments.

- Spear Phishing: Targeted phishing attacks that focus on specific individuals or groups. Attackers gather information about their targets to make the attacks more convincing.

- Whaling: Spear phishing attacks that target high-profile individuals, such as executives or celebrities.

- Smishing (SMS Phishing): Phishing attacks conducted via text messages.

- Vishing (Voice Phishing): Phishing attacks conducted via phone calls.

Recognizing Phishing Attempts:

- Suspicious Sender: Be wary of emails from unknown or unexpected senders. Check the sender’s email address carefully.

- Urgency and Threats: Phishing emails often create a sense of urgency or threaten negative consequences if the recipient doesn’t take immediate action.

- Poor Grammar and Spelling: Phishing emails may contain grammatical errors, spelling mistakes, and awkward phrasing.

- Generic Greetings: Phishing emails often use generic greetings, such as “Dear Customer” or “Dear Sir/Madam.”

- Suspicious Links and Attachments: Hover over links before clicking them to see where they lead. Do not open attachments from unknown senders.

- Requests for Personal Information: Legitimate organizations rarely ask for sensitive information, such as passwords or credit card details, via email.

Protecting Yourself from Phishing:

- Be Skeptical: Always be skeptical of unsolicited emails, text messages, and phone calls.

- Verify Requests: If you receive a suspicious request, contact the organization directly using a verified phone number or website.

- Use Strong Passwords: Create strong, unique passwords for all your accounts.

- Enable Multi-Factor Authentication (MFA): Use MFA whenever possible to add an extra layer of security.

- Keep Software Updated: Regularly update your operating system, web browser, and other software to patch security vulnerabilities.

- Report Phishing Attempts: Report suspicious emails and websites to your IT department or security team.

Reporting Phishing Attempts:

If you suspect you have received a phishing email or encountered a phishing website, report it to your IT department or security team immediately. They can investigate the attack and take steps to protect other users.

Quiz/Assessment:

Include a quiz or assessment to test user understanding of the material. The quiz should include questions that test the user’s ability to identify phishing attempts and take appropriate action.

Example Scenario:

Imagine you receive an email that appears to be from your bank. The email states that your account has been compromised and asks you to click on a link to reset your password. What should you do?

Answer: Do not click on the link. Instead, go directly to your bank’s website by typing the address in your browser or use a bookmarked link. Contact your bank’s customer service to verify the email’s authenticity.

Cloud-Specific Security Services

Cloud providers offer a suite of security services designed to enhance the security posture of migrated workloads. These services automate security tasks, provide centralized visibility, and offer advanced threat detection capabilities. Leveraging these services is crucial for maintaining a robust security posture in the cloud environment.

Identifying Cloud Provider Security Services

Cloud providers offer various security services that address different aspects of security, including security posture management, threat detection, incident response, and compliance. Understanding these services and their capabilities is essential for designing and implementing a comprehensive security strategy.

- AWS Security Hub: A centralized security service that provides a comprehensive view of an organization’s security posture across its AWS accounts. It aggregates security findings from various AWS services and third-party tools, enabling security teams to prioritize and remediate security issues.

- Azure Security Center: A unified security management platform for Azure resources. It provides security recommendations, threat protection, and vulnerability management. Azure Security Center helps organizations improve their security posture by identifying and addressing security gaps.

- Google Cloud Security Command Center (SCC): A centralized security and risk management platform for Google Cloud. It offers visibility into data, threats, and vulnerabilities. SCC helps organizations to identify, analyze, and respond to security threats across their Google Cloud environment.

Leveraging Cloud-Specific Security Services for Enhanced Security

These cloud-specific services provide several advantages for enhanced security, including automated security assessments, centralized security management, and integrated threat detection. Effective utilization of these services can significantly improve an organization’s ability to protect its cloud resources.

- Automated Security Assessments: Services like AWS Security Hub, Azure Security Center, and Google Cloud Security Command Center automate security assessments, continuously monitoring cloud resources for vulnerabilities and misconfigurations. They provide regular reports and recommendations for improving the security posture. For instance, Azure Security Center can automatically identify and recommend remediation for misconfigured network security groups, which helps to prevent unauthorized access to resources.

- Centralized Security Management: These services offer a centralized platform for managing security across multiple cloud accounts and services. This centralized view simplifies security operations, allowing security teams to monitor, analyze, and respond to security events more efficiently. AWS Security Hub, for example, allows organizations to view and manage security findings from various AWS services in a single dashboard.

- Integrated Threat Detection: Cloud providers integrate threat detection capabilities into their security services. These services analyze logs and other telemetry data to identify suspicious activities and potential threats. Google Cloud Security Command Center, for instance, integrates with Chronicle Security, providing advanced threat detection capabilities based on threat intelligence and behavioral analysis.

- Compliance and Governance: Many cloud security services also support compliance and governance initiatives. They provide tools and features to help organizations meet regulatory requirements and maintain a secure cloud environment. For example, Azure Security Center offers built-in compliance assessments for various industry standards, such as PCI DSS and HIPAA.

Integrating Cloud-Specific Security Services into a Comprehensive Security Strategy

Integrating cloud-specific security services requires careful planning and execution. A well-defined strategy should consider the specific security needs of the organization and the capabilities of the cloud provider’s security services. Effective integration ensures that these services work together to provide a robust and comprehensive security posture.

- Establish a Baseline Security Posture: Define a baseline security posture that Artikels the organization’s security requirements and goals. This baseline should include security policies, standards, and best practices. This baseline serves as a foundation for implementing and configuring cloud-specific security services.

- Configure and Customize Security Services: Configure the cloud provider’s security services to align with the established baseline security posture. This involves setting up security alerts, defining security rules, and integrating with other security tools. For instance, in AWS Security Hub, you can customize the security findings to match the specific security requirements of your organization.

- Implement Security Automation: Automate security tasks using the cloud provider’s automation tools. This includes automating security assessments, incident response, and vulnerability remediation. Automating security tasks reduces manual effort and improves the speed and efficiency of security operations. For example, you can use AWS Lambda functions to automatically remediate security findings identified by AWS Security Hub.

- Monitor and Analyze Security Data: Continuously monitor security data from cloud-specific security services and other security tools. Analyze the data to identify security threats, vulnerabilities, and trends. Use the insights gained from data analysis to improve the security posture and refine the security strategy.

- Regularly Review and Update the Security Strategy: Regularly review and update the security strategy to adapt to changes in the cloud environment, emerging threats, and evolving security requirements. This includes reviewing the configuration of cloud-specific security services and making necessary adjustments.

Outcome Summary

In conclusion, securing a cloud migration requires a multi-faceted approach. From pre-migration planning to ongoing monitoring and incident response, each step is crucial. By implementing the security best practices for cloud migration Artikeld in this document, organizations can confidently embrace the cloud’s potential while minimizing their exposure to security threats, ensuring data integrity, and maintaining compliance.

FAQ Insights

What is the most critical step in securing a cloud migration?

A thorough security assessment conducted before migration is the most critical step. This assessment helps identify vulnerabilities, classify sensitive data, and establish a baseline for security controls.

How does the principle of least privilege apply to cloud environments?

The principle of least privilege dictates that users and applications should only be granted the minimum necessary access to perform their tasks. In cloud environments, this translates to carefully defining roles and permissions to limit potential damage from compromised accounts.

What is the difference between RTO and RPO in disaster recovery?

RTO (Recovery Time Objective) is the maximum acceptable downtime after a disaster, while RPO (Recovery Point Objective) is the maximum acceptable data loss measured in time. Both are critical considerations in disaster recovery planning.

What are the benefits of network segmentation in the cloud?

Network segmentation isolates different parts of your cloud environment, limiting the impact of a security breach. If one segment is compromised, the attacker’s access is restricted, preventing lateral movement and protecting sensitive data.