Data migration, a critical undertaking for any organization undergoing system upgrades, consolidation, or cloud adoption, demands a structured approach. The cornerstone of a successful data migration strategy is a well-defined data migration plan template. This document serves as a blueprint, guiding the entire process from initial planning to post-migration activities, ensuring data integrity, minimizing downtime, and mitigating potential risks. This detailed analysis will delve into the core components, methodologies, and best practices associated with these indispensable templates.

This exploration will systematically dissect the elements of a data migration plan template, highlighting the significance of each component. We’ll examine the essential pre-migration planning activities, including data profiling, cleansing, and tool selection. Furthermore, we’ll explore data mapping and transformation techniques, illustrating how to document these processes effectively. The execution and testing phases will be scrutinized, emphasizing the importance of validation, rollback strategies, and quality assurance.

Post-migration considerations, template customization, and illustrative examples will further enhance understanding, alongside discussions on future trends and advanced topics like cloud migration.

Understanding Data Migration Plan Templates

Data migration plan templates serve as structured frameworks for the complex process of transferring data from one system or storage location to another. They provide a standardized approach, ensuring consistency and minimizing the potential for errors and disruptions. These templates are crucial for managing the multifaceted challenges inherent in data migration projects, encompassing aspects such as data mapping, transformation, validation, and reconciliation.

Core Purpose of a Data Migration Plan Template

The primary function of a data migration plan template is to guide the migration process, ensuring a systematic and controlled transfer of data. It Artikels the scope, objectives, and procedures, providing a roadmap for the entire project lifecycle. This roadmap encompasses pre-migration activities, the migration itself, and post-migration validation and optimization.The template aims to achieve the following objectives:

- Define the Scope: Clearly delineates the data to be migrated, the source and target systems, and the project’s boundaries. This includes identifying the data types, volume, and criticality.

- Establish Objectives: Sets specific, measurable, achievable, relevant, and time-bound (SMART) goals for the migration, such as data accuracy, minimal downtime, and cost-effectiveness.

- Artikel Methodology: Specifies the step-by-step procedures for data extraction, transformation, and loading (ETL), including data cleansing, validation, and mapping rules.

- Allocate Resources: Identifies the necessary personnel, tools, and infrastructure required for the migration, including budget allocation and timelines.

- Mitigate Risks: Proactively addresses potential risks and challenges, such as data loss, downtime, and security breaches, with contingency plans and mitigation strategies.

- Ensure Compliance: Addresses data governance, privacy, and regulatory requirements, ensuring compliance with relevant standards and regulations.

Benefits of Using a Data Migration Plan Template Over Ad-Hoc Approaches

Employing a data migration plan template offers significant advantages over ad-hoc, unstructured approaches. This structured methodology enhances efficiency, reduces risks, and improves the overall success rate of data migration projects.

- Increased Efficiency: Templates streamline the migration process by providing a pre-defined framework, reducing the time and effort required for planning and execution. They eliminate the need to start from scratch, allowing teams to focus on specific project requirements.

- Reduced Risk: A well-defined template incorporates best practices and risk mitigation strategies, minimizing the potential for data loss, corruption, and downtime. This structured approach also facilitates the identification and resolution of potential issues before they impact the migration process.

- Improved Data Quality: Templates often include data validation and cleansing steps, ensuring data accuracy and consistency throughout the migration. This results in a higher quality of data in the target system, improving the reliability of business intelligence and decision-making.

- Enhanced Consistency: Templates promote consistency across multiple migration projects, ensuring that the same standards and procedures are applied. This simplifies project management, reduces training requirements, and improves the overall predictability of migration outcomes.

- Better Communication: Templates serve as a central point of reference for all stakeholders, facilitating communication and collaboration. They provide a clear understanding of the project’s scope, objectives, and timelines, ensuring that everyone is aligned on the project’s goals.

Potential Risks of Not Utilizing a Data Migration Plan Template

Failing to utilize a data migration plan template exposes projects to a range of significant risks, potentially leading to costly delays, data loss, and business disruptions. The absence of a structured plan increases the likelihood of errors and inefficiencies, jeopardizing the success of the migration.

- Data Loss or Corruption: Without a well-defined plan, data may be lost or corrupted during the migration process. This can occur due to improper data mapping, inadequate validation, or insufficient testing.

- Extended Downtime: Ad-hoc approaches often lead to prolonged downtime, disrupting business operations and impacting productivity. This is especially critical for systems that support critical business functions.

- Increased Costs: Poorly planned migrations can result in unexpected costs, including additional resources, extended timelines, and remediation efforts.

- Non-Compliance: Failure to address data governance and regulatory requirements can lead to non-compliance issues, resulting in penalties and legal ramifications.

- Project Failure: In the worst-case scenario, a poorly planned migration can lead to project failure, resulting in significant financial losses and reputational damage.

- Inefficient Resource Allocation: Without a clear plan, resources may be misallocated, leading to delays and inefficiencies. The lack of a defined budget and timeline can also lead to cost overruns.

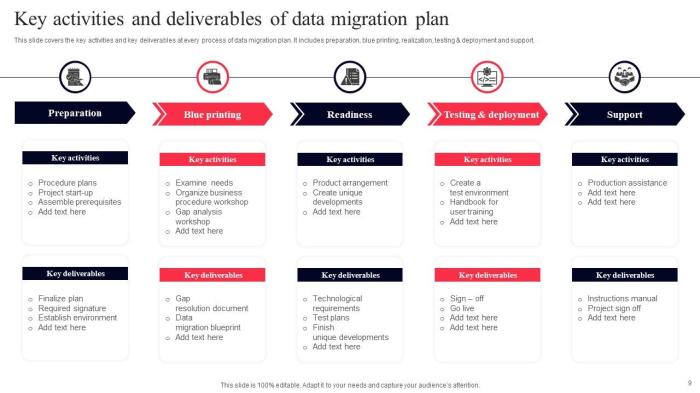

Key Components of a Data Migration Plan Template

A robust data migration plan template is not merely a checklist; it’s a structured framework designed to guide the entire migration process, from initial assessment to post-migration validation. The effectiveness of such a template hinges on the inclusion of several critical components, each playing a vital role in ensuring a successful and efficient data transfer. These components, when meticulously defined and executed, minimize risks, reduce downtime, and ultimately, contribute to the integrity and usability of the migrated data.

Scope Definition

Defining the scope is the foundational element of any data migration plan. This section meticulously Artikels what data will be migrated, the specific systems involved, and the overall objectives of the migration. A well-defined scope provides clarity and prevents scope creep, which can significantly impact the project’s timeline, budget, and overall success.The importance of a comprehensive scope definition is paramount for several reasons:

- Data Inventory and Profiling: This involves a detailed cataloging of all data sources, data types, and data volumes. It also includes data profiling, which assesses data quality, identifies potential inconsistencies, and helps to determine data cleansing requirements. For example, if migrating customer data, the scope must specify which customer attributes (e.g., name, address, contact information, purchase history) are included and how data quality will be assessed (e.g., using data validation rules to identify invalid email addresses or missing postal codes).

- System Identification: Clearly identify the source and target systems. Specify the version, architecture, and relevant interfaces of each system. Consider the impact of system interdependencies. For example, migrating data from an older CRM system to a new cloud-based CRM system requires specifying both systems’ characteristics and how they will interact during and after the migration.

- Data Transformation Rules: Defining how data will be transformed during the migration process is crucial. This involves specifying the mapping of data elements from the source to the target system, as well as any data cleansing, standardization, and enrichment rules. For example, mapping the “customer_id” field from the source system to the “account_number” field in the target system, and defining the rules for handling duplicate records.

- Migration Timeline and Deliverables: Establishing a realistic timeline with clearly defined milestones and deliverables is essential for tracking progress and managing expectations. This includes setting deadlines for each phase of the migration, from data extraction to validation. For example, the migration plan might define milestones for data extraction, data transformation, data loading, and data validation, with specific deadlines for each.

Risk Assessment and Mitigation Strategy

Data migration projects inherently involve risks. These risks can range from data loss and corruption to project delays and budget overruns. A robust risk assessment and mitigation strategy section proactively identifies potential risks, assesses their likelihood and impact, and defines strategies to minimize their occurrence or mitigate their effects. This proactive approach is crucial for ensuring the project’s success.This section should include:

- Risk Identification: This involves identifying potential risks associated with the data migration. These risks can be categorized as technical, operational, or business-related. For example, technical risks might include data loss during the transfer, data corruption due to incompatibility issues, or system downtime. Operational risks might involve resource constraints or lack of expertise. Business risks could include regulatory compliance issues or impact on business operations.

- Risk Assessment: For each identified risk, assess its likelihood of occurrence and the potential impact on the project. This can be done using a risk matrix, which plots the likelihood of a risk against its potential impact. Risks can be ranked based on their severity.

- Mitigation Strategies: For each identified risk, develop specific mitigation strategies. These strategies should aim to reduce the likelihood of the risk occurring or minimize its impact. For example:

- Data Loss: Implement robust backup and recovery procedures, conduct thorough data validation before and after migration, and perform multiple test migrations.

- Data Corruption: Ensure data compatibility between source and target systems, implement data cleansing and transformation rules, and perform thorough data validation.

- System Downtime: Plan for downtime during off-peak hours, conduct extensive testing to minimize downtime, and have a rollback plan in place.

- Contingency Plans: Develop contingency plans for high-impact risks. These plans Artikel the steps to be taken if a risk occurs, including how to recover from data loss or system failures. For example, a contingency plan for data loss might involve restoring data from backups.

- Communication Plan: Define a communication plan to keep stakeholders informed about potential risks and mitigation efforts. This includes identifying key stakeholders, defining communication channels, and establishing a regular reporting schedule.

Pre-Migration Planning & Activities

Prior to the commencement of any data migration, meticulous planning is paramount. This phase establishes the foundation for a successful migration, mitigating risks and ensuring a smooth transition. It involves a comprehensive assessment of the existing data landscape, defining migration objectives, and selecting appropriate tools and strategies. Thorough pre-migration planning minimizes disruption, reduces the likelihood of data loss or corruption, and optimizes the overall efficiency of the migration process.

Data Profiling and Cleansing Checklist

Data profiling and cleansing are crucial steps in pre-migration planning, aimed at understanding the quality, structure, and content of the source data. This process identifies and rectifies data quality issues, ensuring data accuracy and consistency in the target system. The following checklist Artikels key tasks involved in data profiling and cleansing.

- Data Discovery and Analysis: The initial phase involves comprehensive data discovery and analysis to understand the current data landscape. This includes identifying all data sources, data types, data volumes, and data relationships.

- Data Profiling: This step assesses data quality dimensions, such as completeness, accuracy, consistency, validity, and timeliness. Tools are employed to generate statistical summaries, identify anomalies, and detect data quality issues. Examples include:

- Completeness checks: Identifying missing values in critical fields. For example, checking for null values in a ‘customer name’ field.

- Accuracy checks: Validating data against predefined rules and business rules. For instance, verifying that email addresses conform to a standard format (e.g., using regular expressions).

- Consistency checks: Comparing data across different sources to identify discrepancies. For example, ensuring that customer addresses match in both CRM and billing systems.

- Validity checks: Ensuring data values fall within acceptable ranges. For instance, verifying that ages are within a reasonable range (e.g., 0-120 years).

- Data Cleansing Strategy Development: Based on the profiling results, a data cleansing strategy is developed. This involves defining the rules and processes for correcting data quality issues. This includes defining data standardization rules (e.g., address standardization), data de-duplication rules, and data enrichment rules.

- Data Cleansing Execution: The data cleansing strategy is executed using data cleansing tools or custom scripts. This step involves applying the defined rules to correct, standardize, and transform the data. This may involve:

- Data Standardization: Converting data to a consistent format. For example, standardizing date formats (e.g., MM/DD/YYYY).

- Data De-duplication: Identifying and removing duplicate records. For example, using fuzzy matching algorithms to identify similar customer records.

- Data Enrichment: Adding missing or incomplete information. For instance, appending postal codes based on addresses.

- Data Validation and Testing: After cleansing, the data is validated and tested to ensure the cleansing processes were effective and that data quality has improved. This involves sampling data and verifying that the cleansed data meets predefined quality metrics.

- Data Quality Monitoring: Establishing ongoing data quality monitoring processes to detect and prevent future data quality issues. This includes implementing automated data quality checks and establishing regular data quality reporting.

Choosing the Right Migration Tools

Selecting the appropriate migration tools is a critical decision that significantly impacts the efficiency, cost, and success of a data migration project. The choice of tools depends on various factors, including the complexity of the migration, the volume of data, the data sources and target systems, and the budget.

Several categories of migration tools are available, each offering distinct capabilities:

- Extraction, Transformation, and Load (ETL) Tools: These tools are widely used for data integration and migration. They provide functionalities for extracting data from various sources, transforming it according to the target system’s requirements, and loading it into the target system. Examples include Informatica PowerCenter, Talend, and Apache NiFi. They offer:

- Data Connectors: Pre-built connectors for a wide range of data sources and targets (databases, cloud storage, etc.).

- Data Transformation Capabilities: Support for data cleansing, data mapping, data aggregation, and data enrichment.

- Workflow Management: Automation of data migration processes through workflow design and scheduling.

- Data Replication Tools: These tools focus on replicating data from source to target systems in real-time or near real-time. They are suitable for migrations that require minimal downtime or continuous data synchronization. Examples include Oracle GoldenGate, IBM InfoSphere Replication, and AWS Database Migration Service. They offer:

- Change Data Capture (CDC): Capture and replicate only the changes made to the source data, minimizing the impact on the source system.

- High Availability and Disaster Recovery: Support for setting up replicated databases for failover and disaster recovery scenarios.

- Real-time Synchronization: Ensuring data consistency between source and target systems with minimal latency.

- Database Migration Tools: These tools are specifically designed for migrating databases between different platforms or versions. They automate many aspects of the migration process, including schema conversion, data transfer, and validation. Examples include AWS Schema Conversion Tool, Microsoft SQL Server Migration Assistant, and Ispirer Migration Toolkit. They provide:

- Schema Conversion: Automatically converting database schemas from source to target platforms.

- Data Transfer: Efficient data transfer mechanisms optimized for database environments.

- Validation and Testing: Capabilities for validating the migrated data and ensuring data integrity.

- Custom Scripting: For specific and unique migration requirements, custom scripts may be developed using programming languages such as Python, Java, or SQL. This approach offers flexibility and control but requires specialized skills and can be time-consuming.

The selection process involves several key considerations:

- Data Volume and Complexity: Large data volumes and complex data structures often necessitate more robust and scalable tools.

- Data Source and Target Systems: The compatibility of the tool with the source and target systems is crucial.

- Data Transformation Requirements: The tool should offer the necessary data transformation capabilities to meet the project’s requirements.

- Downtime Requirements: Real-time replication tools are ideal for migrations requiring minimal downtime.

- Budget and Resources: The cost of the tool, as well as the required skills and resources, must be considered.

- Vendor Support and Documentation: Adequate vendor support and comprehensive documentation are essential for successful tool implementation and troubleshooting.

Example: A company migrating from an on-premises Oracle database to Amazon Aurora PostgreSQL might use AWS Schema Conversion Tool (SCT) for schema conversion and AWS Database Migration Service (DMS) for data transfer and replication. Another company migrating a large data warehouse might opt for an ETL tool like Informatica PowerCenter, leveraging its robust transformation capabilities.

Data Mapping & Transformation Strategies

Data mapping and transformation are crucial steps in a successful data migration, ensuring data from the source system is accurately translated and adapted to fit the structure and requirements of the target system. This phase directly impacts data integrity and usability post-migration. It involves understanding the source data, defining the target data structure, and establishing the rules for converting data from one format to another.

Data Mapping Process

Data mapping is the process of defining the relationships between data elements in the source system and the corresponding elements in the target system. It is a fundamental step in data migration, acting as a blueprint for how data will be transformed and moved.The data mapping process typically involves these steps:

- Data Discovery and Analysis: This initial step involves a comprehensive understanding of the source data. Analyze data sources, including data types, formats, and relationships. Identify any data quality issues, such as missing values, inconsistencies, and duplicates. Understanding the data’s current state is crucial for planning the transformation process.

- Target System Definition: Define the structure of the target database or system, including data types, data constraints, and data relationships. This step requires a clear understanding of the target system’s data model and business requirements.

- Mapping Design: Create a detailed mapping document or spreadsheet. This document Artikels the relationship between source and target data elements, specifying how data will be transformed. This involves mapping each source field to its corresponding target field and defining any necessary transformation rules.

- Rule Definition: Specify the transformation rules required to convert data from the source format to the target format. These rules can involve simple data type conversions, complex calculations, data cleansing, or data enrichment.

- Mapping Validation: Validate the mapping rules to ensure accuracy and completeness. This involves testing the mappings with sample data to verify that the data is transformed correctly and meets the target system’s requirements.

- Documentation: Document the mapping process, including the source and target data elements, transformation rules, and validation results. This documentation serves as a reference for future migrations and troubleshooting.

Data Transformation Techniques

Data transformation involves converting data from its source format to the format required by the target system. Several techniques are commonly used to transform data effectively:

- Data Cleansing: This involves correcting or removing errors, inconsistencies, and inaccuracies in the data. Examples include standardizing address formats, correcting spelling errors, and removing duplicate records.

- Data Conversion: This involves converting data from one format or data type to another. For instance, converting dates from one format to another (e.g., MM/DD/YYYY to YYYY-MM-DD), or converting text to numeric values.

- Data Aggregation: This involves summarizing or combining data from multiple sources. For example, calculating the total sales for a particular product category or region.

- Data Enrichment: This involves adding additional information to the data to improve its value and completeness. Examples include adding geographic coordinates to addresses or appending product descriptions to product codes.

- Data Filtering: This involves selecting only specific data based on certain criteria. For example, filtering out records that do not meet certain conditions, such as only including customers from a specific country.

- Data Standardization: This involves applying consistent formatting and values across all data elements. Examples include standardizing units of measurement (e.g., using metric units) or using consistent naming conventions.

Documenting Data Transformation Rules

Documenting data transformation rules clearly and concisely is crucial for ensuring the accuracy and maintainability of the data migration process. A well-documented set of rules allows for easy review, modification, and troubleshooting. A common way to document these rules is using a table format. The following table illustrates how to document data transformation rules.

| Source Column | Target Column | Transformation Rule | Validation |

|---|---|---|---|

| Source_Customer_Name | Target_Customer_Name | Trim leading/trailing spaces and convert to title case. | Verify the target name matches the source name after transformation for a sample of 100 records. |

| Source_Order_Date | Target_Order_Date | Convert date format from MM/DD/YYYY to YYYY-MM-DD. | Validate that the target date format is consistent and valid after the conversion. |

| Source_Product_Price | Target_Product_Price | Multiply by 1.1 (10% increase). | Verify that the transformed price is calculated correctly, within an acceptable margin of error. |

| Source_City | Target_City | Lookup in a city standardization table to ensure consistency. | Verify that the standardized city name is accurate by cross-referencing with a geographical database. |

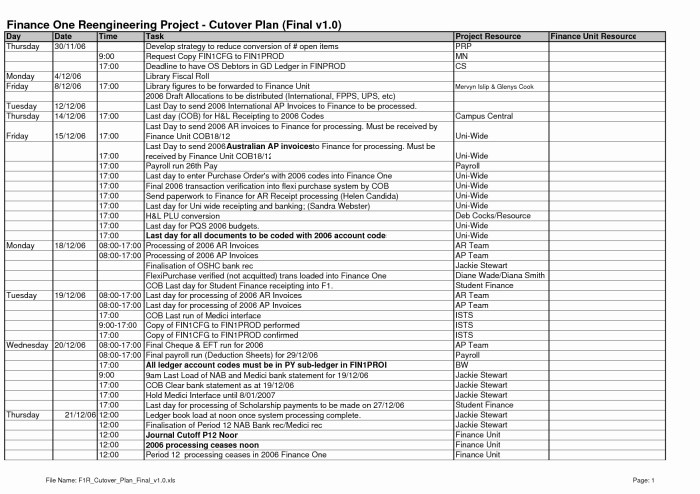

Migration Execution and Testing Phase

The execution and testing phase represents the culmination of all prior planning and preparation in a data migration project. This phase involves the actual transfer of data, the implementation of transformations, and rigorous validation to ensure data integrity and accuracy. A well-defined execution plan and a comprehensive testing strategy are critical to minimizing risks and guaranteeing a successful migration.

Execution Phase Implementation

The execution phase in a data migration plan template Artikels the specific steps and procedures required to move data from the source system to the target system. This section details the operational aspects, including the scheduling, resource allocation, and monitoring processes.

- Detailed Timeline and Schedule: A precise timeline, often visualized using Gantt charts, is essential. The timeline should delineate each task, its duration, dependencies, and assigned resources. The schedule must account for potential delays and include buffer time for unforeseen issues. For instance, a migration involving a large database might schedule the bulk data transfer during off-peak hours to minimize disruption to business operations.

- Resource Allocation and Responsibilities: Clearly define the roles and responsibilities of each team member involved in the migration process. This includes data migration specialists, database administrators, application developers, and business users. The template should specify who is responsible for executing each task, validating data, and handling any issues that arise. An example would be assigning a dedicated data migration specialist to oversee the execution of data transformation scripts and a database administrator to monitor the server performance during the migration.

- Data Transfer Methods and Tools: Specify the data transfer methods and tools to be used. This includes identifying the technologies employed for extracting, transforming, and loading (ETL) data. Common tools include commercial ETL software (e.g., Informatica PowerCenter, Talend), custom scripts, or database-specific utilities. The template should document the configuration settings, version numbers, and any specific parameters required for each tool.

- Monitoring and Reporting: Establish a robust monitoring system to track the progress of the migration. This involves setting up automated alerts for critical events, such as errors, performance bottlenecks, or data validation failures. The template should define the key performance indicators (KPIs) to be monitored, such as data transfer rate, error rates, and data quality metrics. Regular reporting, including status updates and issue logs, is crucial for keeping stakeholders informed.

- Communication Plan: A well-defined communication plan is essential for keeping all stakeholders informed throughout the execution phase. This plan should specify the frequency and format of communication, the recipients, and the escalation procedures for critical issues. For instance, daily status meetings might be scheduled during the active migration period, with regular email updates and issue logs.

Testing and Validation Procedures

Testing and validation are crucial for ensuring data accuracy, completeness, and consistency in the target system. This section Artikels the procedures to be followed to verify the migrated data.

- Test Data Selection and Preparation: The testing phase requires the selection of representative test data sets. This data should encompass various scenarios, including positive and negative test cases, boundary conditions, and edge cases. Test data should be carefully prepared to ensure that it reflects the complexity and diversity of the production data. For example, selecting a subset of customer records, transaction data, and product information to test the migration process.

- Data Validation Checks: Define a comprehensive set of data validation checks to verify the integrity of the migrated data. These checks can include:

- Data Completeness: Ensure that all required data fields have been populated in the target system.

- Data Accuracy: Verify that the data values are correct and consistent with the source system.

- Data Consistency: Check that data relationships and dependencies are maintained.

- Data Integrity: Validate that the data meets predefined business rules and constraints.

- Testing Levels and Types: Implement different levels of testing, including:

- Unit Testing: Testing individual data transformation scripts or components.

- Integration Testing: Testing the interaction between different components or systems.

- System Testing: Testing the entire migration process from end-to-end.

- User Acceptance Testing (UAT): Involving business users to validate the data and functionality in the target system.

- Defect Tracking and Resolution: Establish a system for tracking and resolving any defects or issues identified during the testing phase. This system should include a defect tracking tool, clear escalation procedures, and a process for verifying that defects have been successfully resolved.

- Performance Testing: Conduct performance testing to ensure that the target system can handle the volume of data and the anticipated workload. This involves measuring response times, throughput, and resource utilization.

Rollback Plan Incorporation

A rollback plan is a critical component of a data migration plan, providing a contingency strategy in case the migration fails or encounters significant issues. It Artikels the steps to revert to the pre-migration state, minimizing downtime and data loss.

- Rollback Triggers and Conditions: Define the specific conditions that would trigger a rollback. These might include:

- Critical data validation failures.

- Unexpected performance issues.

- Major system errors or outages.

- Rollback Procedures: Detail the steps required to restore the source system to its pre-migration state. This might involve:

- Restoring data from backups.

- Reverting any changes made to the target system.

- Deactivating the migrated data.

- Data Backup and Recovery: Establish a robust data backup and recovery strategy to ensure that data can be restored in the event of a failure. This includes:

- Creating backups of the source and target systems before the migration.

- Testing the backup and recovery procedures.

- Documenting the backup schedule and retention policies.

- Communication and Notification: Define the communication plan for a rollback scenario. This includes:

- Notifying all stakeholders of the rollback.

- Providing regular updates on the progress of the rollback.

- Coordinating the rollback activities.

- Testing the Rollback Plan: The rollback plan should be tested before the actual migration to ensure that it functions correctly. This involves simulating a rollback scenario and verifying that the data can be restored to its original state.

Data Validation & Quality Assurance

Data validation and quality assurance (QA) are critical components of a data migration plan, ensuring the integrity and reliability of the data throughout the migration process. Rigorous validation and QA activities are essential to minimize data loss, inconsistencies, and errors, ultimately contributing to a successful migration and a trustworthy data environment. The goal is to verify that the migrated data accurately reflects the source data and meets predefined quality standards.

Data Validation Techniques

Data validation encompasses a range of techniques used to verify the accuracy, completeness, and consistency of data. These techniques are applied at various stages of the migration process, from pre-migration data profiling to post-migration data reconciliation.

- Data Profiling: Data profiling involves analyzing the source data to understand its structure, content, and quality. This includes identifying data types, formats, ranges, null values, and potential anomalies. The information gathered during data profiling informs the design of validation rules and transformation strategies.

- Data Type Validation: This validation verifies that data conforms to the expected data types (e.g., integer, string, date). It ensures that data in each field adheres to the specified format. For example, validating a ‘Date of Birth’ field to ensure it contains a valid date in the expected format (YYYY-MM-DD).

- Format Validation: Format validation checks that data adheres to specific formatting rules, such as email addresses, phone numbers, or postal codes. Regular expressions are often used to define and enforce these formats. For instance, validating an email address using the regular expression:

^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]2,$. - Range Validation: This checks that data values fall within predefined acceptable ranges. For example, validating a ‘Salary’ field to ensure the values are within a minimum and maximum salary range.

- Constraint Validation: This verifies that data conforms to business rules and constraints, such as referential integrity (ensuring foreign keys reference existing primary keys), uniqueness constraints (ensuring that a field contains unique values), and mandatory fields (ensuring that required fields are populated).

- Completeness Validation: This assesses whether all required data fields are populated. It identifies missing or null values in critical fields.

- Cross-Field Validation: This involves validating the relationship between different data fields. For instance, verifying that the ‘Order Date’ is earlier than the ‘Ship Date’.

- Data Comparison: This involves comparing data between the source and target systems to identify discrepancies. This can be done through sampling, reconciliation reports, or full data comparisons.

- Business Rule Validation: Applying specific business rules to data to ensure its accuracy and adherence to business logic. This could involve calculating derived values or checking for inconsistencies based on complex business rules.

Importance of Data Quality Assurance

Data Quality Assurance (DQA) is crucial for ensuring that the migrated data meets the required standards of accuracy, completeness, consistency, and timeliness. Implementing a robust DQA process is essential for maintaining data integrity and preventing the propagation of errors.

- Data Accuracy: DQA ensures that the migrated data accurately reflects the source data, minimizing errors and discrepancies. This includes verifying data values, formats, and relationships.

- Data Completeness: DQA ensures that all required data fields are populated and that no data is lost during the migration process.

- Data Consistency: DQA ensures that data is consistent across different systems and applications, reducing the risk of conflicting information. This includes standardizing data formats, applying consistent business rules, and resolving data conflicts.

- Data Validity: DQA validates data against predefined rules and constraints to ensure its integrity and reliability. This includes checking data types, formats, ranges, and relationships.

- Data Integrity: DQA helps to maintain the integrity of the data by identifying and correcting errors, inconsistencies, and anomalies. This includes enforcing data validation rules, implementing data cleansing procedures, and performing data reconciliation.

- Data Reliability: DQA contributes to the reliability of the data by ensuring its accuracy, completeness, and consistency. This is crucial for making informed business decisions.

- Regulatory Compliance: DQA helps to ensure that data complies with relevant regulations and standards, such as GDPR, HIPAA, or industry-specific requirements.

Common Data Quality Issues

Data quality issues can arise from various sources, including errors in data entry, system limitations, data integration challenges, and changes in business processes. Identifying and addressing these issues is critical for ensuring data integrity.

- Inaccurate Data: This includes data that is incorrect or contains errors. Examples include incorrect names, addresses, or financial figures.

- Incomplete Data: This refers to missing data in required fields. Examples include missing contact information or product descriptions.

- Inconsistent Data: This involves conflicting or contradictory data across different systems or within the same system. Examples include different address formats or conflicting product descriptions.

- Invalid Data: This includes data that does not conform to predefined rules or constraints. Examples include invalid dates, incorrect data types, or values outside of acceptable ranges.

- Duplicate Data: This refers to multiple instances of the same data within a system. Examples include duplicate customer records or product entries.

- Outdated Data: This involves data that is no longer current or relevant. Examples include obsolete contact information or outdated product pricing.

- Incorrect Formatting: This includes data that does not adhere to the required formatting rules. Examples include inconsistent date formats or incorrect use of capitalization.

- Data Entry Errors: These are errors introduced during data entry, such as typos, transposed numbers, or incorrect codes.

- System Errors: These are errors caused by system limitations or bugs, such as data corruption or incorrect data transformations.

- Data Integration Issues: These are issues that arise during the integration of data from different sources, such as data mapping errors or data transformation failures.

Post-Migration Activities & Considerations

The completion of data migration is not the endpoint; it signifies a transition to a new operational state. Post-migration activities are critical for ensuring data integrity, system stability, and user acceptance. These activities focus on validating the migration’s success, establishing data governance practices, and continuously monitoring the environment for potential issues. Thorough planning and execution of these steps are vital for realizing the full benefits of the data migration project.

Data Reconciliation and Auditing

Data reconciliation and auditing are essential post-migration processes designed to verify the accuracy and completeness of the migrated data. This involves comparing data sets between the source and target systems to identify discrepancies and ensure that all data has been successfully transferred. Regular audits help maintain data quality and identify potential issues that could compromise data integrity.

- Data Reconciliation Techniques: Various techniques can be employed for data reconciliation. These include:

- Record Counts: Comparing the total number of records in each table or data set between the source and target systems. This provides a high-level check of completeness.

- Checksum Comparisons: Calculating checksums (e.g., MD5, SHA-256) for data files or records in both systems and comparing the results. This detects any changes in the data during migration.

- Field-Level Validation: Comparing the values of specific fields in individual records between the source and target systems. This identifies data transformation errors or data quality issues.

- Data Profiling: Using data profiling tools to analyze the data in both systems and identify any inconsistencies in data types, formats, or ranges.

- Auditing Processes: Implementing robust auditing processes is crucial for maintaining data integrity and ensuring accountability.

- Audit Logs: Maintaining detailed audit logs that record all data access, modifications, and deletions. These logs should include information such as the user, timestamp, action performed, and the data affected.

- Regular Audits: Conducting regular audits of the migrated data to identify and address any data quality issues. These audits should be performed at predetermined intervals, such as daily, weekly, or monthly, depending on the criticality of the data.

- Automated Monitoring: Implementing automated monitoring tools to detect anomalies or inconsistencies in the data. These tools can alert data stewards to potential issues that require investigation.

Data Governance and Security Post-Migration

Data governance and security are paramount considerations following a data migration. Establishing and enforcing robust data governance policies and security measures ensures that the migrated data is protected from unauthorized access, misuse, and loss. This involves defining roles and responsibilities, implementing access controls, and establishing procedures for data management and protection.

- Data Governance Framework: Implementing a comprehensive data governance framework is essential for managing and protecting the migrated data. This framework should include:

- Data Ownership: Defining clear data ownership roles and responsibilities. This ensures that there is a designated individual or team responsible for the quality, integrity, and security of the data.

- Data Policies: Establishing clear data policies that define how data should be managed, accessed, and used. These policies should cover data quality, data security, data privacy, and data retention.

- Data Standards: Defining data standards that specify how data should be formatted, stored, and processed. This ensures consistency and interoperability across different systems.

- Security Measures: Implementing appropriate security measures is critical for protecting the migrated data from unauthorized access, misuse, and loss.

- Access Controls: Implementing robust access controls that restrict access to the data based on the principle of least privilege. This means that users should only have access to the data they need to perform their job functions.

- Encryption: Encrypting sensitive data both in transit and at rest. This protects the data from unauthorized access even if the storage media or network is compromised.

- Data Loss Prevention (DLP): Implementing DLP measures to prevent sensitive data from leaving the organization’s control. This includes monitoring network traffic, email, and other communication channels for potential data breaches.

- Regular Security Audits: Conducting regular security audits to identify and address any vulnerabilities in the data security infrastructure.

Template Customization and Adaptation

Data migration plan templates provide a robust foundation for managing complex data transfer projects. However, their inherent generic nature necessitates customization to align with the specific requirements of each migration initiative. Effective adaptation ensures the template remains a valuable asset, streamlining processes and mitigating potential risks. This section Artikels strategies for tailoring a data migration plan template, focusing on adapting to diverse project needs, data types, and the scalability necessary for large-scale migrations.

Adapting to Different Project Needs

Adapting a data migration plan template involves modifying its components to reflect the unique characteristics of the project. This ensures the plan is relevant, practical, and effectively guides the migration process.

- Project Scope Definition: The initial step involves clearly defining the project’s scope. This includes identifying the source and target systems, the data to be migrated, and the overall objectives. For example, a migration from a legacy system to a cloud-based CRM requires a different focus than migrating data between two relational databases. The template should be modified to reflect these differences in scope.

- Risk Assessment: Each project presents unique risks. The template should be customized to include a detailed risk assessment section, identifying potential issues (data loss, downtime, security breaches) and their corresponding mitigation strategies. This section is vital for anticipating and addressing potential challenges.

- Resource Allocation: The template needs to be adapted to reflect the resources available, including personnel, budget, and infrastructure. This may involve adjusting timelines, defining roles and responsibilities, and outlining the required tools and technologies. For example, a project with limited IT staff requires a more streamlined approach, possibly leveraging automated migration tools.

- Timeline and Milestones: Customizing the timeline is critical. The template should be adjusted to incorporate project-specific milestones, deadlines, and dependencies. A Gantt chart, embedded within the template, visually represents the project’s schedule and allows for easy tracking of progress. The level of detail should align with the project’s complexity; more complex projects require a more granular timeline.

- Communication Plan: A robust communication plan is essential. The template needs to be tailored to specify communication channels, frequency, and stakeholders. This includes defining who needs to be informed about project progress, issues, and decisions. For instance, in a migration involving sensitive customer data, communication protocols must adhere to strict data privacy regulations.

Tailoring to Specific Data Types

Data migration projects often involve diverse data types, each requiring specific handling and transformation strategies. The template should be adapted to accommodate these nuances.

- Structured Data: Relational databases (e.g., SQL Server, Oracle) contain structured data organized in tables with predefined schemas. The template should incorporate detailed data mapping specifications, transformation rules (e.g., data type conversions, value lookups), and validation criteria to ensure data integrity. The use of ETL (Extract, Transform, Load) tools should be explicitly mentioned.

- Semi-Structured Data: Formats like JSON and XML represent semi-structured data. The template should include instructions for parsing and transforming these data formats. This might involve defining XSLT transformations for XML or using JSON processing libraries. Consider the data’s hierarchical structure and the need for schema validation.

- Unstructured Data: Files, images, and documents constitute unstructured data. The template must address data migration strategies such as content indexing, metadata extraction, and the preservation of file formats. Consider the use of Optical Character Recognition (OCR) for text extraction from images or documents.

- Data Volume and Velocity: The template should account for the volume and velocity of data. For large datasets, consider techniques like parallel processing, data partitioning, and incremental migration to optimize performance. The chosen migration tools should be capable of handling the expected data throughput.

- Data Security and Compliance: The template should incorporate specific sections related to data security, including data encryption, access controls, and compliance with relevant regulations (e.g., GDPR, HIPAA). This might involve defining data masking techniques or specifying security audits.

Scaling the Template for Large-Scale Migrations

Large-scale data migrations require a template that can handle increased complexity and volume. Scalability is achieved by incorporating specific features and approaches.

- Modular Design: The template should be designed in a modular fashion, allowing for the breakdown of the migration process into manageable components. This facilitates parallel processing, team collaboration, and easier management of individual tasks. Each module should be well-defined and have clear dependencies.

- Automated Processes: The template should emphasize the automation of key tasks, such as data validation, data transformation, and testing. Scripting languages (e.g., Python, PowerShell) can automate repetitive tasks and improve efficiency. Consider using automation tools like Ansible or Chef for infrastructure provisioning and configuration.

- Version Control: Implement version control for the data migration plan template itself, along with all associated scripts, configurations, and documentation. This allows for tracking changes, reverting to previous versions, and collaborating effectively. Tools like Git are essential.

- Performance Monitoring: The template should include sections for monitoring the performance of the migration process. This involves defining key performance indicators (KPIs) like data throughput, error rates, and system resource utilization. Real-time monitoring dashboards can provide valuable insights into the migration’s progress.

- Phased Rollout: For large-scale migrations, a phased rollout approach is often beneficial. The template should include detailed plans for staging data migration, testing in a pilot environment, and gradually migrating data in batches. This minimizes risks and allows for adjustments based on early results.

- Disaster Recovery Plan: A comprehensive disaster recovery plan is essential for large-scale migrations. The template should incorporate strategies for data backup, failover mechanisms, and procedures for recovering from unexpected events. Regular testing of the disaster recovery plan is crucial.

Choosing the Right Template Format

The selection of an appropriate template format is crucial for the successful execution of a data migration plan. The format chosen significantly impacts the plan’s clarity, organization, and ease of collaboration. The ideal format should align with the project’s complexity, team size, and reporting requirements.

Template Format Options

Several template formats are commonly employed for data migration plans, each offering distinct advantages and disadvantages. Understanding these differences is fundamental to making an informed decision.

- Word Documents: Word documents are suitable for creating comprehensive, narrative-driven plans. They are excellent for detailing the context, objectives, and strategic aspects of the migration.

- Advantages: They offer robust formatting options, enabling the creation of well-structured documents with clear headings, subheadings, and sections. They support extensive text and descriptions, allowing for detailed explanations of migration processes, risks, and mitigation strategies. Word documents are also easily shared and reviewed, facilitating collaboration among team members.

- Disadvantages: They can become cumbersome for managing large datasets or complex project timelines. Data visualization capabilities are limited compared to other formats, and version control can be challenging if not managed carefully. Tracking changes and revisions can be time-consuming, especially in large collaborative projects.

- Excel Spreadsheets: Excel is well-suited for data-centric aspects of the migration plan, such as data mapping, transformation rules, and data validation checks.

- Advantages: They excel at organizing and manipulating data through formulas, functions, and pivot tables. Excel provides excellent data visualization capabilities through charts and graphs, aiding in presenting complex data relationships and trends. They are highly flexible, allowing for customization of data layouts and the inclusion of formulas for automated calculations and analysis.

- Disadvantages: They can become unwieldy for documenting the overall narrative and strategic elements of the plan. Collaboration can be difficult, especially with multiple users editing the same spreadsheet simultaneously. Complex spreadsheets can be prone to errors if not designed and maintained meticulously.

- Project Management Software (e.g., Microsoft Project, Asana, Jira): Project management software is ideal for creating and managing project timelines, task dependencies, and resource allocation.

- Advantages: They provide powerful features for scheduling tasks, assigning resources, tracking progress, and managing dependencies. They offer Gantt charts and other visual tools for visualizing project timelines and identifying potential bottlenecks. These tools facilitate collaboration through shared task assignments, progress updates, and communication features.

- Disadvantages: They might lack the flexibility for detailed data mapping or comprehensive documentation of data transformation rules. They can be more complex to set up and use than simpler formats like Word or Excel, especially for smaller projects. The cost of project management software can be a factor, especially for projects with limited budgets.

- Specialized Data Migration Planning Tools: Some specialized tools combine features of project management, data mapping, and documentation, providing a more integrated approach.

- Advantages: They offer features tailored to the specific needs of data migration, streamlining the planning process. They often automate data mapping, transformation, and validation tasks. They provide centralized dashboards for monitoring progress and managing risks.

- Disadvantages: They can be expensive and may require specialized training to use effectively. They may not be as flexible as general-purpose tools for tasks outside of data migration planning. Integration with existing systems might be limited.

Selecting the Appropriate Format

The selection of the most appropriate template format hinges on a careful assessment of the project’s specific requirements. Several factors should be considered during this evaluation process.

- Project Scope and Complexity: For simple migrations with a limited scope, a Word document or a basic Excel spreadsheet might suffice. Complex projects involving multiple data sources, intricate transformations, and a large team might necessitate a combination of formats, such as Word for documentation, Excel for data mapping, and project management software for scheduling.

- Team Size and Collaboration Requirements: If the project involves a large team, project management software or a collaborative document platform (like Google Docs) is essential. This allows for efficient task assignment, progress tracking, and communication. Excel can be used for data mapping if the team is comfortable with collaborative editing.

- Data Volume and Complexity: For migrations involving large volumes of data and complex data transformations, Excel is a good option for mapping and transformation, and specialized tools could offer automation and enhanced capabilities. Word is suitable for documenting the overall data migration plan.

- Reporting Requirements: If detailed progress reports and visual representations of project timelines are required, project management software is crucial. Excel can generate charts and graphs for data analysis and presentation.

- Budget and Resource Constraints: The cost of software licenses and the availability of trained personnel should be considered. Free or low-cost options might be sufficient for smaller projects, while larger projects may require investment in specialized tools.

Illustrative Examples & Case Studies

Data migration projects, regardless of their scale, are inherently complex endeavors. Success hinges on meticulous planning, robust execution, and comprehensive testing. Examining real-world case studies provides valuable insights into the application of data migration plan templates and their impact on project outcomes. These examples illustrate how the templates adapt to diverse scenarios and address common challenges.

Sample Data Migration Project Overview

The case study focuses on the migration of a legacy customer relationship management (CRM) system to a modern, cloud-based CRM platform. This project involved migrating customer data, sales records, and marketing campaign information. The legacy system, developed in-house, suffered from data inconsistencies, limited reporting capabilities, and scalability issues. The new platform promised improved data accuracy, enhanced analytics, and greater accessibility.The project’s scope included:

- Data Extraction from the Legacy System: Retrieving data from various database tables and file formats.

- Data Transformation: Cleaning, standardizing, and mapping data to align with the new CRM platform’s schema.

- Data Loading: Uploading the transformed data into the target system.

- Testing and Validation: Verifying data integrity and functionality in the new system.

Key Sections of the Template Used in the Project

The data migration plan template employed in this project was adapted from a standard template, focusing on several critical sections:

- Project Scope and Objectives: This section defined the project’s boundaries, outlining which data elements were to be migrated and the desired outcomes, such as improved data accuracy and enhanced reporting capabilities.

- Data Inventory and Analysis: A detailed assessment of the source data was conducted, identifying data types, formats, and potential quality issues. This analysis formed the basis for the transformation strategy.

- Data Mapping and Transformation Rules: This section documented the rules for transforming data from the source to the target system, including data cleansing, standardization, and field mapping specifications. This involved a detailed table that mapped source fields to their corresponding target fields.

- Migration Strategy: The chosen approach was a “big bang” migration, with a phased approach for testing and validation. This involved detailed schedules and timelines.

- Testing and Validation Plan: Comprehensive testing procedures were established to ensure data integrity and application functionality, including unit testing, integration testing, and user acceptance testing (UAT).

- Rollback Plan: A contingency plan was developed in case of migration failures, detailing procedures for reverting to the legacy system.

Challenges Faced and Solutions Implemented

The project encountered several significant challenges:

- Data Quality Issues: The legacy system contained numerous data quality problems, including missing values, inconsistent formatting, and duplicate records. The template’s “Data Inventory and Analysis” section guided the team to identify and address these issues. Solutions implemented included data cleansing scripts, data standardization rules, and deduplication processes.

- Data Mapping Complexity: The differences in data schemas between the legacy and target systems necessitated complex data mapping rules. The template’s “Data Mapping and Transformation Rules” section facilitated the creation of detailed mapping specifications, which were implemented using data transformation tools. The use of these tools reduced the number of errors during the transformation process.

- Downtime Minimization: The “big bang” migration approach required minimizing downtime. The “Migration Strategy” section provided detailed procedures for minimizing downtime during the migration process, including the use of pre-migration data validation, parallel runs, and data synchronization strategies.

- Unexpected Data Anomalies: During testing, unexpected data anomalies were identified that were not initially apparent during data analysis. The template’s “Testing and Validation Plan” section was used to incorporate additional testing rounds and refine the data transformation rules. This resulted in the discovery of further errors.

The template’s structured approach and detailed sections were instrumental in mitigating these challenges. The template served as a central repository for all project-related information, facilitating communication and collaboration among the project team. The template also helped to ensure consistency in processes and documentation, contributing significantly to the project’s successful completion.

Advanced Topics & Future Trends

Data migration is constantly evolving, driven by technological advancements and changing business needs. This section delves into advanced aspects, specifically cloud migration, and explores emerging technologies and future trends shaping data migration plan templates. Understanding these elements is crucial for developing robust and adaptable migration strategies.

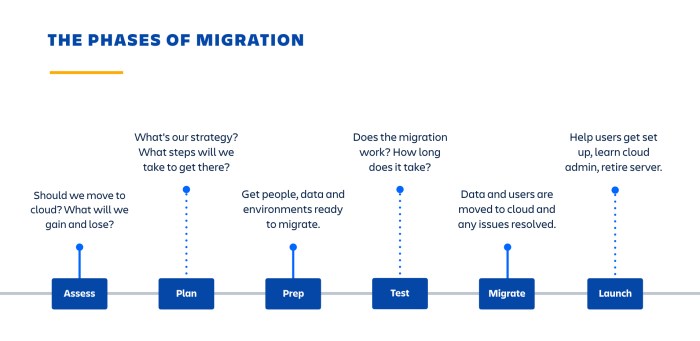

Cloud Migration Strategies

Cloud migration represents a significant shift in data management, offering scalability, cost-efficiency, and enhanced accessibility. Successfully navigating cloud migration necessitates a well-defined strategy.Cloud migration strategies typically involve several approaches:

- Rehosting (Lift and Shift): This involves moving applications and data to the cloud with minimal changes. It’s a rapid approach, suitable for immediate cost savings and infrastructure simplification, but may not fully leverage cloud-native features.

- Replatforming: This involves making some modifications to applications to optimize them for the cloud, such as changing the database or using a managed service. This approach offers better performance and cost optimization than rehosting.

- Refactoring/Re-architecting: This involves redesigning and rebuilding applications to fully leverage cloud-native services and architectures. This approach provides the most significant benefits in terms of scalability, agility, and cost optimization but requires the most time and resources.

- Repurchasing: This involves replacing existing applications with cloud-based Software-as-a-Service (SaaS) solutions. This approach can be a cost-effective and efficient way to migrate applications but requires careful evaluation of SaaS provider capabilities and data integration requirements.

- Retiring: This involves decommissioning applications that are no longer needed. This approach can free up resources and reduce costs.

A well-defined cloud migration plan template should incorporate the following considerations:

- Assessment and Planning: Thoroughly assess existing infrastructure, applications, and data. Define migration goals, select the appropriate cloud migration strategy, and create a detailed migration plan.

- Data Migration Tools and Techniques: Utilize specialized tools and techniques for data transfer, transformation, and validation. Cloud providers offer native tools, and third-party solutions provide additional capabilities.

- Security and Compliance: Implement robust security measures to protect data during migration and in the cloud environment. Address compliance requirements, such as GDPR or HIPAA, throughout the process.

- Cost Optimization: Optimize cloud resource usage to minimize costs. Implement cost monitoring and management tools.

- Testing and Validation: Conduct thorough testing and validation to ensure data integrity and application functionality in the cloud environment.

- Monitoring and Management: Establish comprehensive monitoring and management practices to ensure optimal performance and availability in the cloud.

Cloud migration necessitates a data migration plan template that is highly adaptable and allows for iterative refinements based on experience and evolving business requirements.

Emerging Technologies in Data Migration

Several emerging technologies are transforming data migration processes, improving efficiency, accuracy, and speed.

- Artificial Intelligence (AI) and Machine Learning (ML): AI and ML are used to automate data mapping, transformation, and validation processes. ML algorithms can identify patterns in data and recommend optimal transformation strategies, reducing manual effort and improving accuracy. They can also assist in identifying data quality issues and anomalies.

- Data Virtualization: Data virtualization provides a unified view of data across multiple sources without physically moving the data. This approach can simplify data migration by reducing the need for complex data transformations and enabling access to data during migration.

- Blockchain: Blockchain technology can be used to enhance data integrity and security during migration. It can provide an immutable audit trail of data changes, ensuring data provenance and preventing unauthorized modifications.

- Serverless Computing: Serverless computing allows for the execution of code without managing servers. This can streamline data migration processes by automating tasks such as data transformation and validation.

- Low-Code/No-Code Platforms: Low-code/no-code platforms empower citizen developers to participate in data migration tasks. They provide user-friendly interfaces for building data pipelines, simplifying data transformation, and reducing the reliance on specialized coding skills.

These technologies are not mutually exclusive and can be combined to create more powerful and efficient data migration solutions.

Future Trends in Data Migration Plan Templates

The future of data migration plan templates will be shaped by several trends, aiming for greater automation, adaptability, and integration.

- Automation and Orchestration: Data migration plans will increasingly incorporate automation and orchestration tools to streamline processes. This includes automated data mapping, transformation, validation, and testing. The goal is to minimize manual intervention and reduce the time and cost of migration.

- Data Governance Integration: Data migration plans will be tightly integrated with data governance frameworks. This ensures that data quality, security, and compliance are maintained throughout the migration process. Data lineage and metadata management will become increasingly important.

- Cloud-Native Architectures: Data migration plans will be designed specifically for cloud-native architectures. This involves leveraging cloud-specific services and features, such as serverless computing, containerization, and managed databases.

- Real-Time Data Migration: Real-time data migration will become more prevalent, enabling continuous data synchronization between source and target systems. This is crucial for applications that require up-to-date data and minimizes downtime during migration.

- Data Migration as a Service (DMaaS): DMaaS will offer a managed service approach to data migration, where providers handle the entire migration process, including planning, execution, and support. This approach can simplify data migration and reduce the burden on internal IT teams.

- Template Customization and Version Control: Templates will become more customizable and include robust version control features. This enables organizations to tailor templates to their specific needs and track changes over time.

These trends highlight the ongoing evolution of data migration plan templates, emphasizing adaptability, automation, and integration with broader data management strategies. As data volumes continue to grow and business requirements become more complex, these advancements will be crucial for successful data migration initiatives.

Conclusion

In conclusion, the data migration plan template emerges as a vital instrument in ensuring the smooth and efficient transfer of data across systems. By adhering to a structured framework, organizations can minimize disruptions, maintain data integrity, and ultimately achieve their strategic objectives. The adoption of a comprehensive template, tailored to specific project requirements, not only streamlines the migration process but also fosters a culture of proactive planning and risk management.

As data landscapes continue to evolve, the data migration plan template remains an indispensable tool for navigating the complexities of data management and transformation.

FAQ Section

What is the primary difference between a data migration plan and a data backup?

A data migration plan focuses on moving data from one system to another, including transformation and validation. A data backup, conversely, is primarily for data recovery in case of loss or corruption, creating a copy of existing data.

How much time should be allocated to data migration planning?

Planning should ideally consume between 10% and 30% of the total project time, depending on the complexity and volume of data involved. Thorough planning significantly reduces risks and ensures a smoother execution.

What are the common challenges encountered during data migration?

Common challenges include data quality issues, data mapping complexities, downtime constraints, compatibility problems between systems, and unforeseen data transformation requirements.

What level of technical expertise is required to use a data migration plan template?

While a template provides structure, successful implementation necessitates a moderate level of technical expertise. This includes knowledge of data structures, database systems, data transformation techniques, and project management principles.

How can a data migration plan template be customized to accommodate specific industry regulations (e.g., GDPR, HIPAA)?

Customization involves incorporating sections that address compliance requirements, such as data masking, encryption, access controls, and audit trails. The plan should also include validation procedures to ensure compliance throughout the migration process.