Serverless Step Functions represent a paradigm shift in workflow orchestration, offering a robust and scalable solution for managing complex processes. This technology enables the creation of state machines that define the sequence of tasks, transitions, and error handling mechanisms necessary for automating various operations. From simple data processing pipelines to intricate business workflows, Step Functions provide a powerful framework for building resilient and cost-effective applications.

This exploration will delve into the core concepts of Step Functions, dissecting their components and illustrating their application in real-world scenarios. We will examine the advantages of adopting a serverless approach, including scalability, cost optimization, and simplified management. Furthermore, we will explore the integration capabilities of Step Functions with other AWS services and third-party APIs, providing a comprehensive understanding of their versatility and potential.

Defining Serverless Step Functions

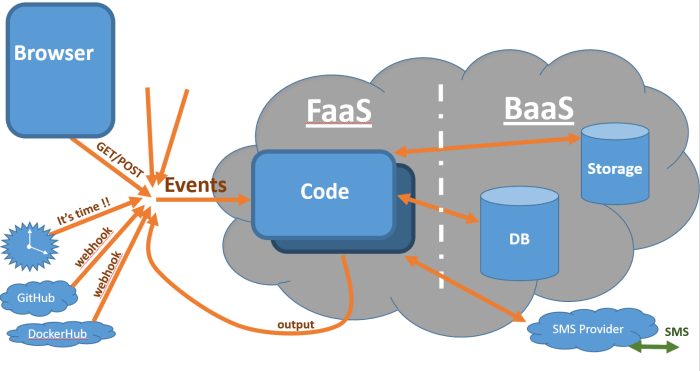

Serverless Step Functions represent a powerful approach to orchestrating workflows within a cloud-native environment. They enable the creation of complex, stateful processes without the need for managing underlying infrastructure. This paradigm shift allows developers to focus on the logic of their applications rather than the operational overhead of servers.

Core Concept of Serverless Step Functions in Workflow Orchestration

Serverless Step Functions are fundamentally state machines that manage the execution of discrete tasks, or “steps,” in a predefined sequence. These steps can be any action that can be invoked via an API, such as calling a Lambda function, interacting with databases, or integrating with third-party services. The orchestration is handled by the Step Function service, which ensures that each step is executed in the correct order, handles failures, and manages state transitions.

- State Management: Step Functions maintain the state of the workflow, allowing it to track progress and resume from where it left off in case of interruptions or failures. This is achieved through a persistent state engine.

- Task Coordination: The service coordinates the execution of tasks, including their dependencies and the passing of data between them.

- Error Handling: Step Functions provide built-in mechanisms for handling errors, such as retries, error handling branches, and dead-letter queues.

- Scalability and Reliability: The underlying infrastructure is fully managed, providing automatic scaling and high availability. This ensures that workflows can handle fluctuating workloads without manual intervention.

Simplified Analogy of Step Function Operation

Consider a simplified scenario: processing an online order. A Step Function could orchestrate the following steps:

- Receive Order: The system receives the order details.

- Validate Payment: The payment information is verified.

- Check Inventory: The system checks if the ordered items are in stock.

- Process Order: If the items are in stock and the payment is validated, the order is processed.

- Ship Order: The order is shipped to the customer.

- Send Confirmation: A confirmation email is sent.

Each step can be a separate function or service. If the “Check Inventory” step fails (e.g., items are out of stock), the Step Function can automatically branch to a “Notify Customer” step and/or retry the “Check Inventory” step a defined number of times. This is analogous to a real-world assembly line, where each station performs a specific task and passes the partially completed product to the next station.

Benefits of Serverless Step Functions over Traditional Workflow Systems

Compared to traditional workflow systems, Serverless Step Functions offer several significant advantages.

- Reduced Operational Overhead: Developers are freed from managing servers, scaling, and patching infrastructure, allowing them to focus on application logic.

- Cost-Effectiveness: Pay-per-use pricing models eliminate the need to provision and pay for idle resources.

- Increased Agility: Rapid development and deployment cycles are enabled by the ability to quickly build, test, and iterate on workflows.

- Scalability and Reliability: Serverless platforms automatically scale to handle varying workloads, ensuring high availability and fault tolerance.

- Improved Visibility: Step Functions provide detailed execution logs and monitoring capabilities, enabling better debugging and performance optimization.

For instance, in a scenario where a large e-commerce platform experiences a surge in orders during a holiday sale, traditional workflow systems might struggle to scale quickly enough to handle the increased load, potentially leading to delays and customer dissatisfaction. Serverless Step Functions, however, can automatically scale to meet the demand, ensuring that orders are processed efficiently and reliably. This scalability advantage is especially crucial in unpredictable environments.

Core Components of a Step Function

Step Functions, at their core, are designed to orchestrate complex workflows by breaking them down into a series of discrete, manageable steps. These steps, or states, are linked together to form a directed acyclic graph (DAG), allowing for conditional branching, parallel execution, and error handling. Understanding the fundamental components is crucial for designing and implementing effective serverless workflows.

States and State Machines

A Step Function workflow is defined as a state machine. A state machine comprises a series of states, each representing a specific task or decision within the workflow. The overall state machine definition is typically described using the Amazon States Language (ASL), a JSON-based language. The ASL definition specifies the states, their type, and the transitions between them. The execution of a state machine begins at a designated start state and progresses through the states based on the defined transitions until it reaches a terminal state (e.g., `Succeed` or `Fail`).

Task States

Task states are the fundamental building blocks of a Step Function workflow, representing the execution of a specific unit of work. This unit of work can be a call to another AWS service (e.g., Lambda function, ECS task), an API call to a third-party service, or a direct integration with various AWS services. The `Type` field in the ASL definition is set to `Task` for these states.

- Invocation: Task states are invoked by specifying the `Resource` field, which identifies the service or function to be executed. For example, to invoke a Lambda function, the `Resource` field would contain the ARN (Amazon Resource Name) of the function.

- Input and Output Processing: Task states often involve processing input data and transforming output data. The `InputPath` and `ResultPath` fields are used to control which parts of the input are passed to the invoked service and how the service’s result is incorporated into the state’s output. The `OutputPath` field then controls how the state’s final output is structured.

- Error Handling: Robust error handling is a critical feature of task states. The `Retry` and `Catch` fields enable the definition of retry strategies for transient errors and the handling of specific error types by transitioning to alternative states. This ensures resilience and fault tolerance in the workflow.

- Example: Consider a workflow that processes image uploads. A task state might invoke a Lambda function to resize an image. The input to the Lambda function could be the image’s S3 object key, and the output could be the resized image’s S3 object key.

Choice States

Choice states introduce conditional logic into a Step Function workflow, enabling branching based on the evaluation of conditions. These conditions are evaluated against the state’s input, allowing the workflow to take different paths depending on the data. The `Type` field in the ASL definition is set to `Choice` for these states.

- Conditions: Choice states use a series of conditions to determine the next state to transition to. These conditions are defined using operators such as `StringEquals`, `NumericGreaterThan`, `BooleanEquals`, and others.

- Branching: Based on the evaluation of the conditions, the workflow transitions to a specific state. The `Next` field specifies the next state if a condition is met, and the `Default` field specifies the next state if no conditions are met.

- Nested Conditions: Choice states can be nested to create complex decision trees.

- Example: In an order processing workflow, a choice state could evaluate the order total. If the total is greater than a certain threshold, the workflow might transition to a state that requires manager approval; otherwise, it might transition to a state that processes the order directly.

Parallel States

Parallel states enable the concurrent execution of multiple branches of a workflow. This is useful for tasks that can be performed independently, significantly reducing the overall execution time. The `Type` field in the ASL definition is set to `Parallel` for these states.

- Branches: A parallel state contains a list of branches, each representing a separate sub-workflow. These branches are executed concurrently.

- Synchronization: The parallel state waits for all branches to complete before transitioning to the next state. The results from each branch are combined into a single output.

- Error Handling: Parallel states provide error handling at both the branch level and the overall parallel state level. If a branch fails, the parallel state can be configured to retry the branch or transition to an error handling state.

- Example: In a video processing workflow, a parallel state could be used to transcode a video into multiple formats concurrently. Each branch would be responsible for transcoding the video into a specific format. Once all transcoding branches are complete, the workflow could proceed to a state that publishes the video.

Pass States

Pass states are simple states that pass their input to their output without performing any other action. They are often used for injecting data, adding comments, or creating intermediate steps in a workflow. The `Type` field in the ASL definition is set to `Pass` for these states.

- Input and Output: Pass states can modify their input data using the `Parameters` field. The `Result` field can be used to inject a fixed value into the output.

- Purpose: Pass states can be used for a variety of purposes, such as creating a constant value, inserting a timestamp, or adding metadata to the workflow’s execution history.

- Example: A pass state could be used to add a unique identifier to the workflow’s input data before passing it to the next state.

Wait States

Wait states pause the execution of a workflow for a specified duration or until a specific time. The `Type` field in the ASL definition is set to `Wait` for these states.

- Duration: Wait states can specify a duration in seconds (`Seconds`), a specific timestamp (`Timestamp`), or a duration relative to a timestamp (`SecondsPath` or `TimestampPath`).

- Use Cases: Wait states are commonly used for tasks such as polling for a resource to become available, implementing time-based triggers, or delaying the execution of a specific step.

- Example: A wait state could be used to pause the workflow for a specified amount of time before sending a notification.

Succeed and Fail States

Succeed and Fail states are terminal states that signal the successful or unsuccessful completion of a workflow, respectively. The `Type` field in the ASL definition is set to `Succeed` or `Fail`.

- Succeed State: The `Succeed` state marks the successful completion of the workflow. It does not perform any actions.

- Fail State: The `Fail` state marks the unsuccessful completion of the workflow. It can include an `Error` and `Cause` field to provide information about the failure.

- Workflow Termination: These states terminate the execution of the state machine.

- Example: A workflow might end with a `Succeed` state if all steps complete successfully or with a `Fail` state if an error occurs.

Connections and Workflow Formation

These components are interconnected to create a complete workflow. Transitions between states are defined using the `Next` field in the state definition. Each state, except terminal states (Succeed and Fail), must specify the next state to transition to. Choice states determine the next state based on conditions, and parallel states execute multiple branches concurrently.

- Example: Image Processing Workflow:

1. Start State (Task)

Receives the image file’s S3 object key.

2. Resize Image (Task)

Invokes a Lambda function to resize the image.

3. Check Resize Status (Choice)

Checks the status of the resize operation. If successful, transitions to the next step; if not, transitions to a retry state.

4. Upload Image (Task)

Uploads the resized image to S

5. Notify User (Task)

Sends a notification to the user.

6. Succeed (Terminal)

Marks the successful completion of the workflow.

This example illustrates how different state types (task, choice) can be combined to orchestrate a complex process. The choice state introduces conditional logic, allowing the workflow to handle potential errors and ensure the image processing is completed successfully.

Workflow Orchestration with Step Functions

Serverless Step Functions excel at orchestrating complex workflows by managing the execution of multiple AWS services in a coordinated manner. They provide a robust mechanism for defining and executing state machines, allowing developers to model intricate business processes as a series of steps. This approach offers several advantages, including improved reliability, scalability, and observability, ultimately simplifying the development and maintenance of distributed applications.

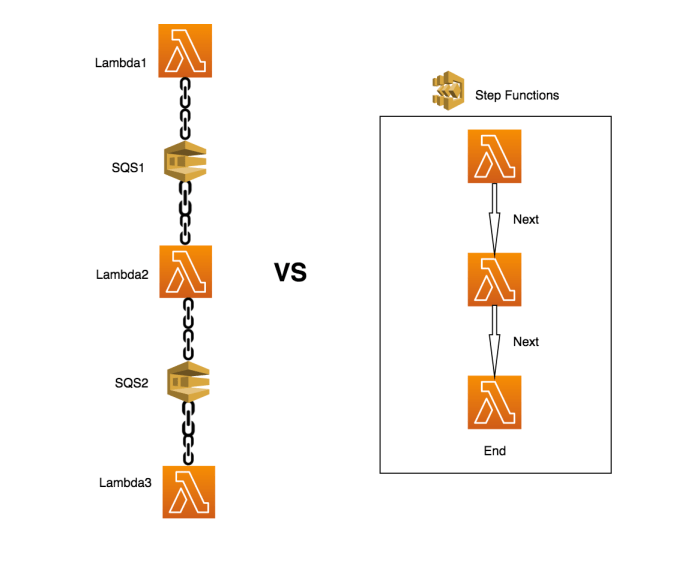

Orchestration Patterns Comparison

Different orchestration patterns are available for structuring workflows, each suited to different use cases. The choice of pattern depends on the specific requirements of the application, considering factors such as the dependencies between tasks, the need for parallel execution, and the complexity of the control flow. The following table provides a comparison of common orchestration patterns.

| Pattern | Description | Use Cases | Advantages & Disadvantages |

|---|---|---|---|

| Sequential | Tasks are executed one after another in a predefined order. Each task completes before the next begins. | Simple, linear processes; tasks with strict dependencies. Examples: order processing, user onboarding. | Advantages: Easy to understand and implement. Predictable execution flow. Disadvantages: Slowest pattern; a failure in one step stops the whole process. |

| Parallel | Multiple tasks are executed concurrently. Useful for tasks that do not depend on each other. | Tasks that can run independently; data processing, batch jobs. Examples: generating thumbnails, sending notifications. | Advantages: Improved speed; efficient use of resources. Disadvantages: Requires careful management of concurrency; potential for resource contention. |

| Conditional (Branching) | The workflow’s path is determined based on the outcome of a task. Uses `Choice` states to evaluate conditions and branch accordingly. | Decision-making processes; workflows that require conditional logic. Examples: error handling, A/B testing. | Advantages: Allows for flexible and adaptive workflows. Disadvantages: Can increase complexity; requires careful design to avoid infinite loops. |

| Iterative (Loops) | A set of tasks are repeated a specified number of times or until a condition is met. Uses `Map` states for iterative execution. | Processing data in batches; performing actions on a list of items. Examples: processing a list of files, data transformations. | Advantages: Efficient for repetitive tasks. Disadvantages: Can be complex to manage; potential for performance bottlenecks if not optimized. |

Designing an Image Processing Workflow

A Step Function can be designed to orchestrate an image processing workflow, automating several tasks from image upload to final delivery. This workflow can include tasks such as image resizing, format conversion, and watermarking. The state machine’s design involves defining the states, transitions, and inputs/outputs for each step.The workflow could be structured as follows:

- Image Upload: A user uploads an image to an Amazon S3 bucket. This triggers the Step Function via an S3 event.

- Image Retrieval: The Step Function retrieves the image from S3.

- Image Processing: The image is processed using AWS Lambda functions. This might include:

- Resizing the image to different dimensions.

- Converting the image to different formats (e.g., JPEG, PNG).

- Adding a watermark to the image.

- Image Storage: The processed images are stored back in S3, potentially in a different bucket or folder.

- Notification: A notification (e.g., an email) is sent to the user indicating that the image processing is complete, perhaps including links to the processed images. This could be implemented using Amazon SNS.

The state machine would use different state types to achieve this:

- Task States: Invoke Lambda functions for image processing operations (resize, convert, watermark).

- Pass States: To pass data between states, such as the image’s S3 key.

- Choice States: To handle potential errors or conditional processing (e.g., if the original image format is already the desired output format, skip conversion).

- Wait States: (Optional) To introduce delays, such as waiting for an image processing task to complete before proceeding.

The workflow’s efficiency and cost-effectiveness depend on several factors, including the size of the images, the complexity of the processing tasks, and the choice of AWS services. For example, parallelizing the resizing and format conversion steps can significantly reduce processing time. Monitoring the execution of the state machine using CloudWatch allows for performance optimization and cost management. Real-world examples include image-sharing platforms like Unsplash, which uses similar workflows for image management and processing, and various e-commerce sites that need to resize images for product listings.

Advantages of Serverless Approach

The adoption of serverless architecture for workflow orchestration, specifically utilizing Step Functions, presents significant advantages over traditional, on-premise solutions. These advantages primarily revolve around scalability, cost-effectiveness, and simplified management, contributing to increased agility and reduced operational overhead.

Scalability and Elasticity of Serverless Step Functions

Serverless Step Functions inherently possess the ability to scale automatically. This dynamic adjustment to workload demands eliminates the need for manual provisioning and management of infrastructure resources.

- Step Functions automatically scale to handle fluctuating workloads. The service provisions and manages the underlying compute resources, ensuring the workflow can handle increased loads without requiring manual intervention. This is in stark contrast to on-premise solutions, which require pre-provisioning resources, often leading to over-provisioning to handle peak loads.

- Elasticity is a key characteristic. Serverless Step Functions can rapidly scale up or down based on real-time demand. This ensures that workflows can handle unexpected spikes in traffic or data processing requirements without performance degradation. The service adapts to the workload, minimizing the risk of bottlenecks or delays.

- This scalability translates directly into improved performance and responsiveness. Users experience faster execution times, especially during periods of high demand. The architecture is designed to distribute workloads across multiple compute units, improving overall throughput.

Cost-Effectiveness of Serverless Step Functions

The pay-per-use pricing model of serverless Step Functions dramatically impacts the cost structure compared to on-premise solutions. This model charges users only for the resources consumed during workflow execution.

- Serverless Step Functions eliminate the need for upfront capital expenditures associated with on-premise infrastructure. Businesses are no longer required to invest in hardware, software licenses, and the associated maintenance costs.

- The pay-per-use pricing model optimizes resource utilization. Users are charged only for the state transitions, the duration of function executions, and the data transfer involved in the workflow. This contrasts with on-premise solutions, where resources are often underutilized, leading to wasted expenditure.

- The cost structure is predictable and transparent. Users can accurately forecast their workflow costs based on their usage patterns. The service provides detailed cost reports, allowing for granular analysis and optimization of workflow expenses.

- Consider a hypothetical scenario: a company processes 1 million transactions per month. An on-premise solution might require a fixed infrastructure cost of $10,000 per month. Using Step Functions, the cost could be a fraction of that, potentially as low as $1,000-$2,000, depending on the complexity of the workflow and the execution time. This difference can be significant.

Simplified Management and Deployment of Serverless Workflows

Serverless Step Functions simplify the management and deployment processes compared to traditional workflow solutions. The service handles the underlying infrastructure, allowing developers to focus on the business logic of the workflow.

- Serverless Step Functions provide a fully managed service, eliminating the need for manual server provisioning, patching, and maintenance. The service automatically handles infrastructure management, reducing the operational burden on IT teams.

- Deployment is simplified through the use of declarative configurations. Developers define workflows using a JSON-based state machine definition, which is then deployed to the service. This approach simplifies the deployment process and reduces the risk of errors.

- Monitoring and debugging are streamlined. Step Functions provides built-in monitoring and logging capabilities, allowing users to track workflow executions, identify errors, and diagnose performance bottlenecks. This visibility is crucial for maintaining workflow health and optimizing performance.

- Updates and rollbacks are simplified. Because the service manages the infrastructure, updates can be deployed quickly and easily. The service supports versioning, allowing users to roll back to previous versions of their workflows if necessary.

Integration with Other Services

Serverless Step Functions derive significant power from their seamless integration with a wide array of AWS services. This capability allows for the construction of complex, distributed applications and workflows without the need for extensive infrastructure management. The orchestration engine itself acts as a central coordinator, managing the execution of tasks across these services, enabling developers to focus on application logic rather than underlying infrastructure.

Integration with AWS Services

Serverless Step Functions integrate with various AWS services to build and execute complex workflows. The integration is facilitated through the use of state machine states that invoke these services. This enables the execution of various tasks, such as processing data, interacting with databases, and triggering notifications. The following are key examples:

- AWS Lambda: Lambda functions are a core component of Step Function workflows.

- Lambda functions provide the ability to execute custom code.

- Lambda functions perform tasks such as data transformation, business logic execution, and API calls.

- Step Functions trigger Lambda functions based on the state machine’s definition.

- Lambda’s output is then passed to the next state, allowing for data flow and decision-making within the workflow.

- Example: A Step Function might use a Lambda function to resize an image uploaded to S3.

- Amazon S3: S3 provides durable object storage.

- Step Functions can interact with S3 to store and retrieve data.

- Step Functions can initiate tasks such as uploading, downloading, and deleting objects.

- S3 can act as a data source or a destination for data processing within a workflow.

- Example: A Step Function could trigger when a new file is uploaded to an S3 bucket, initiating a workflow to process that file.

- Amazon DynamoDB: DynamoDB is a fully managed NoSQL database service.

- Step Functions can interact with DynamoDB to read and write data.

- This allows for the storage and retrieval of state information, configuration data, or application data.

- DynamoDB can be used to persist data between steps in a workflow, allowing for data to be used across several steps.

- Example: A Step Function might update a DynamoDB table to track the progress of a workflow or store results.

- Amazon SNS and SQS: These services are used for messaging and queuing.

- SNS (Simple Notification Service) allows Step Functions to send notifications.

- SQS (Simple Queue Service) allows Step Functions to send messages to queues for asynchronous processing.

- These services enable decoupling of workflow steps and handling asynchronous operations.

- Example: A Step Function could send a notification via SNS upon the completion of a task or enqueue a task to SQS for later processing.

- AWS Glue: Glue is a serverless data integration service.

- Step Functions can invoke Glue jobs to perform ETL (Extract, Transform, Load) operations.

- Glue allows data to be transformed, cleaned, and loaded into data warehouses or data lakes.

- Example: A Step Function might use Glue to process data from S3 and load it into Amazon Redshift.

Integrating with Third-Party APIs

Step Functions are not limited to integrating only with AWS services; they can also interact with external APIs, expanding their capabilities beyond the AWS ecosystem. This is usually achieved through Lambda functions that make API calls using HTTP requests. The integration process typically involves the following steps:

- Create a Lambda function: The Lambda function acts as a proxy between the Step Function and the third-party API.

- The Lambda function handles the API request and response.

- The function is responsible for constructing the HTTP request, including the URL, headers (e.g., API keys, authentication tokens), and payload.

- The Lambda function also handles error handling and response parsing.

- Configure API Credentials: Securely store API keys or authentication tokens.

- Use AWS Secrets Manager or environment variables within the Lambda function to store sensitive information.

- Avoid hardcoding credentials in the Lambda function code.

- Define the State Machine: Create a Step Function state machine that invokes the Lambda function.

- The state machine defines the workflow and the order of operations.

- Pass input data to the Lambda function.

- Handle the Lambda function’s output.

- Handle API Responses: Parse and process the API response within the Lambda function.

- The Lambda function should parse the API response, extracting relevant data.

- The function can transform the data as needed before passing it to the next state.

- Handle any errors returned by the API.

- Error Handling: Implement robust error handling to manage potential API issues.

- Use retry mechanisms in the Step Function state machine to handle transient API errors.

- Log errors and implement error handling within the Lambda function.

Example: Integrating with a weather API.

- A Lambda function is created to call a weather API. This function receives the city name as input and makes an HTTP GET request to the weather API (e.g., OpenWeatherMap).

- The Lambda function then parses the JSON response from the API to extract the current temperature.

- The Lambda function returns the temperature to the Step Function.

- The Step Function receives the temperature and uses it in subsequent steps, such as sending a notification if the temperature is above a certain threshold.

Step Function State Machines

State machines are the core of AWS Step Functions, representing a directed graph that defines the workflow of a serverless application. They orchestrate a series of discrete steps, or “states,” each performing a specific task. These states transition based on the outcome of the previous state, allowing for conditional branching, error handling, and parallel execution, thereby providing a robust and scalable mechanism for managing complex business processes.

State Machines in Serverless Step Functions

Step Functions utilize state machines to define and execute workflows. A state machine is a representation of a process, consisting of a finite number of states and transitions between those states. Each state performs a specific function, such as calling a Lambda function, passing data, or making a decision based on input. The transitions are governed by the state’s outcome, dictating the next state to be executed.

This structure enables the creation of complex workflows that are resilient to failures and easily manageable.

Designing a State Machine for Order Processing

Order processing is a common business process well-suited for orchestration with Step Functions. A state machine can automate the entire process, from order placement to shipment and delivery. This example demonstrates a state machine designed to manage the different stages of order processing.The following is a breakdown of the state machine designed for automating the order processing business process. This state machine utilizes several state types, including Task states (to execute Lambda functions), Choice states (for conditional logic), and Pass states (for data manipulation).

- Start: This is the initial state, triggering the workflow. It typically receives order details as input.

- Validate Order: A Task state that invokes a Lambda function to validate the order details (e.g., checking for sufficient inventory, valid shipping address, and payment information). The Lambda function returns a success or failure status.

- Check Inventory: This Task state calls a Lambda function to check if the items ordered are in stock. It then returns a success or failure result.

- Process Payment: This Task state invokes a Lambda function to process the payment using a payment gateway. It receives order and payment details and returns a payment confirmation or rejection.

- Allocate Inventory: A Task state that calls a Lambda function to allocate the inventory to the order. This step reduces the available stock levels in the database.

- Prepare Shipment: This Task state calls a Lambda function to generate a shipping label and prepare the order for shipment.

- Ship Order: This Task state invokes a Lambda function that triggers the shipment process with the shipping carrier.

- Notify Customer: This Task state calls a Lambda function to send a notification to the customer confirming the order status and shipment details.

- Order Completed: This is a final state, marking the successful completion of the order processing workflow.

- Order Failed: This is a final state indicating the order processing workflow has failed, usually due to an error in one of the previous steps.

- Choice States: Several Choice states are integrated to handle the conditional logic of the process. These states evaluate the results of previous Task states and transition to different states based on the outcome. For example:

- If the order validation fails, the workflow transitions to the “Order Failed” state.

- If inventory is unavailable, the workflow transitions to the “Order Failed” state.

- If payment fails, the workflow transitions to the “Order Failed” state.

Diagram of the Order Processing State Machine

The following diagram illustrates the flow of the order processing state machine. The diagram visually represents the steps and transitions, making it easier to understand the workflow’s logic and how data flows between states.

The diagram is structured as follows:

1. Start State

Represented by a rounded rectangle labeled “Start”. This is the entry point of the workflow, receiving the initial order details.

2. Validate Order State

A rounded rectangle, connected to “Start” with an arrow, labeled “Validate Order”. This state calls a Lambda function to validate the order information.

3. Choice State

Order Valid? : A diamond-shaped state, connected to “Validate Order”. This is a Choice state. Two arrows emerge from this state, labeled “Yes” and “No.”

- The “Yes” arrow points to “Check Inventory”.

- The “No” arrow points to “Order Failed”.

4. Check Inventory State

A rounded rectangle, connected to “Order Valid?” (Yes) and labeled “Check Inventory”. This state invokes a Lambda function to check the inventory availability.

5. Choice State

Inventory Available? : A diamond-shaped state, connected to “Check Inventory”. Two arrows emerge from this state, labeled “Yes” and “No.”

- The “Yes” arrow points to “Process Payment”.

- The “No” arrow points to “Order Failed”.

6. Process Payment State

A rounded rectangle, connected to “Inventory Available?” (Yes) and labeled “Process Payment”. This state processes the payment.

7. Choice State

Payment Successful? : A diamond-shaped state, connected to “Process Payment”. Two arrows emerge from this state, labeled “Yes” and “No.”

- The “Yes” arrow points to “Allocate Inventory”.

- The “No” arrow points to “Order Failed”.

8. Allocate Inventory State

A rounded rectangle, connected to “Payment Successful?” (Yes) and labeled “Allocate Inventory”. This state allocates the inventory to the order.

9. Prepare Shipment State

A rounded rectangle, connected to “Allocate Inventory” and labeled “Prepare Shipment”.1

0. Ship Order State

A rounded rectangle, connected to “Prepare Shipment” and labeled “Ship Order”.

- 1

- 1

- 1

1. Notify Customer State

A rounded rectangle, connected to “Ship Order” and labeled “Notify Customer”.

2. Order Completed State

A rounded rectangle, connected to “Notify Customer” and labeled “Order Completed”. This is a final state.

3. Order Failed State

A rounded rectangle, connected to “Order Valid?” (No), “Inventory Available?” (No), and “Payment Successful?” (No) and labeled “Order Failed”. This is a final state.

The diagram clearly illustrates the sequential execution of steps, the conditional branching based on outcomes, and the error handling paths that lead to the “Order Failed” state. This structure enables effective monitoring and management of the order processing workflow.

Error Handling and Retry Mechanisms

Error handling is a critical aspect of building robust and reliable serverless step functions. Due to the distributed nature of serverless architectures, failures can occur at any stage of a workflow. Implementing effective error handling and retry mechanisms ensures that workflows are resilient to transient issues and can recover gracefully from failures, minimizing data loss and maintaining service availability. This section details methods for managing exceptions and implementing retry strategies within Step Functions.

Importance of Error Handling in Serverless Step Functions

The significance of error handling stems from the inherent characteristics of serverless environments. Transient errors, such as temporary network outages or service throttling, are common. Without proper error handling, these issues can lead to workflow failures and data inconsistencies. Effective error handling ensures that these issues are addressed and mitigated, and the workflow is able to continue as intended.

Methods for Implementing Retry Mechanisms for Failed Tasks

Implementing retry mechanisms is essential for dealing with transient errors in Step Functions. Several strategies can be employed to automatically retry failed tasks, enhancing the resilience of the workflow.

- Built-in Retries: Step Functions provides built-in retry mechanisms that can be configured within each state definition. These retries are triggered automatically when a task fails.

- Retry Parameters: When configuring a retry, several parameters can be defined, including:

- ErrorEquals: Specifies the error codes or error types that trigger a retry.

- IntervalSeconds: Defines the delay between retry attempts.

- MaxAttempts: Sets the maximum number of retry attempts.

- BackoffRate: Determines the exponential backoff rate for increasing the delay between attempts.

- Custom Retry Logic: For more complex retry scenarios, custom retry logic can be implemented using the `Catch` state. This allows for handling specific error types differently, such as retrying certain errors immediately while implementing longer delays for others.

Examples of Handling Exceptions and Implementing Compensation Logic

Exception handling and compensation logic are crucial for managing errors and ensuring data consistency. Step Functions allows the use of the `Catch` state to handle exceptions and implement compensating transactions to undo any partially completed operations.

- Exception Handling with `Catch`: The `Catch` state allows for specifying what to do when a task fails. This can include:

- Error Filtering: The `ErrorEquals` parameter can be used to specify which errors to catch.

- State Transitions: When an error is caught, the workflow can transition to another state, such as a retry state or a compensation state.

- Implementing Compensation Logic: Compensation logic is essential when a workflow involves multiple steps that update resources. If a step fails, compensation logic ensures that any previous changes are undone. This is typically achieved by:

- Identifying Resources: Determine which resources were affected by the failed step.

- Reverting Changes: Implement compensating actions to revert changes to the resources. This might involve deleting records, updating data to previous states, or other undo operations.

- Using Parallel States: Parallel states can be used to execute compensation logic concurrently, speeding up the recovery process.

Monitoring and Logging

Effective monitoring and logging are critical for maintaining the health, performance, and reliability of serverless Step Functions. They provide insights into workflow execution, enabling proactive identification and resolution of issues. Robust monitoring and logging capabilities are essential for troubleshooting, performance optimization, and ensuring compliance with service level agreements (SLAs).

Available Monitoring and Logging Capabilities

Serverless Step Functions offer several built-in monitoring and logging features. These tools allow for comprehensive tracking of workflow executions, facilitating a deeper understanding of system behavior.

- AWS CloudWatch Logs: CloudWatch Logs provides detailed logs for each state machine execution. These logs capture the input and output of each state, timestamps, execution status (e.g., success, failure, timeout), and any error messages. This granular logging is invaluable for diagnosing problems.

- AWS CloudWatch Metrics: CloudWatch Metrics automatically collects metrics for Step Functions, providing insights into performance and usage. These metrics include:

- Executions: Total number of executions, successful executions, and failed executions.

- Execution Time: Average and maximum execution times, providing a measure of workflow performance.

- Throttled Executions: Number of throttled executions, indicating potential capacity issues.

- Errors: Number of errors, categorized by type, allowing for rapid identification of recurring issues.

- AWS X-Ray: AWS X-Ray can be integrated with Step Functions to provide end-to-end tracing of requests as they flow through the workflow. X-Ray allows you to visualize the flow of requests across different services, identifying bottlenecks and performance issues. This is particularly useful for complex workflows that involve multiple services.

- AWS Step Functions Console: The AWS Step Functions console provides a visual representation of workflow executions, including a graphical view of state transitions and detailed execution history. This console allows for easy browsing of execution details and identification of problem areas.

Troubleshooting Workflow Issues Using Monitoring and Logging

Leveraging monitoring and logging effectively is key to troubleshooting issues within Step Functions. The combination of detailed logs, performance metrics, and tracing capabilities provides a comprehensive toolkit for diagnosing and resolving problems.

- Identifying Errors: Analyzing CloudWatch Logs allows you to pinpoint the exact state where an error occurred. The logs contain detailed error messages, including the error type, error cause, and any relevant stack traces. Reviewing the logs will quickly determine the root cause of the failure.

- Performance Analysis: CloudWatch Metrics provide insights into workflow performance, such as average execution time and the number of throttled executions. If execution times are consistently high, or if there are frequent throttling errors, this may indicate a need for optimization, such as adjusting resource allocations or optimizing state configurations.

- Debugging with X-Ray: X-Ray helps visualize the flow of requests through a workflow, revealing bottlenecks and performance issues across different services. By tracing the execution path, developers can identify where a request is spending the most time, helping to pinpoint performance problems.

- Reviewing Execution History: The Step Functions console provides a graphical representation of workflow executions, enabling the review of individual execution steps. This allows for the visual inspection of state transitions, input/output data, and error details.

Setting Up Monitoring and Alerting for a Step Function

Implementing monitoring and alerting is a proactive measure to ensure the reliability and responsiveness of Step Functions. This process involves configuring CloudWatch alarms to detect specific conditions and trigger notifications.

- Creating CloudWatch Alarms: Define CloudWatch alarms based on relevant metrics. For example, you could create an alarm to trigger when the number of failed executions exceeds a specific threshold or when the average execution time exceeds a certain limit.

- Defining Alarm Thresholds: Set appropriate thresholds for each alarm. These thresholds should be based on expected performance and error rates. Regularly review and adjust these thresholds as needed.

- Configuring Notifications: Configure notifications for the alarms. These notifications can be sent via email, SMS, or other channels using Amazon Simple Notification Service (SNS). Notifications should be directed to the appropriate individuals or teams responsible for addressing issues.

- Setting up Dashboards: Create CloudWatch dashboards to visualize key metrics and alarms. These dashboards provide a centralized view of the Step Function’s health and performance. This allows for quick identification of potential problems.

- Automated Remediation (Optional): Integrate with AWS Lambda to create automated remediation actions. For example, if an alarm triggers due to a high error rate, a Lambda function could be invoked to automatically retry failed executions or take other corrective actions.

Use Cases and Applications

Serverless Step Functions offer a robust framework for orchestrating complex workflows across various domains. Their flexibility, scalability, and integration capabilities make them suitable for a wide array of real-world applications. This section will explore specific use cases, focusing on data processing pipelines and providing a detailed example of workflow implementation.

Real-World Use Cases

Step Functions find application in numerous industries and scenarios, demonstrating their versatility. They excel at coordinating disparate tasks and managing the flow of data, making them a powerful tool for building efficient and scalable applications.

- Data Processing Pipelines: Automating the ingestion, transformation, and loading (ETL) of data from various sources. This includes data cleansing, enrichment, and preparing data for analytics.

- Microservices Orchestration: Coordinating the execution of multiple microservices to build complex business applications. This enables the creation of modular and scalable systems.

- API Orchestration: Managing the execution of API calls, handling dependencies, and managing retries. This is crucial for integrating different services and ensuring reliability.

- Machine Learning Workflows: Automating the training, evaluation, and deployment of machine learning models. This streamlines the entire model lifecycle.

- Business Process Automation: Automating business processes such as order processing, customer onboarding, and approval workflows. This reduces manual effort and improves efficiency.

- IoT Device Management: Orchestrating the collection, processing, and analysis of data from IoT devices. This enables real-time monitoring and control of devices.

Data Processing Pipelines Examples

Data processing pipelines are a primary area where Step Functions shine. These pipelines typically involve a series of steps, such as data ingestion, transformation, cleansing, and loading into a data warehouse or data lake. The ability to handle errors, retry failed steps, and monitor the entire process makes Step Functions an ideal solution for building reliable and scalable data pipelines.

- ETL Pipelines: Extracting data from various sources (databases, APIs, files), transforming it (cleaning, filtering, aggregating), and loading it into a data warehouse.

- Log Processing: Ingesting log data, parsing it, performing analysis (e.g., identifying anomalies), and storing the results.

- Image and Video Processing: Processing images and videos, including tasks such as resizing, transcoding, and applying machine learning models for object detection or facial recognition.

Specific Use Case: Automated Image Processing Pipeline

This use case illustrates how Step Functions can be used to build an automated image processing pipeline. This pipeline takes an uploaded image, performs several transformations, and then stores the processed image.

The workflow begins with a ‘Receive Image’ step, triggered by an event such as an object upload to an Amazon S3 bucket. This step retrieves the image from S3. The workflow then proceeds to a ‘Resize Image’ step, which uses an AWS Lambda function to resize the image to a predefined size. Next, a ‘Apply Filters’ step applies various image filters using another Lambda function. This function might include operations such as contrast adjustments, color corrections, or the application of specific artistic filters. After applying filters, the workflow moves to a ‘Detect Objects’ step. This step leverages Amazon Rekognition to identify objects within the image. Finally, the ‘Store Results’ step saves the processed image and the object detection results to a designated Amazon S3 bucket. Each step is designed to handle potential errors and includes retry mechanisms to ensure the workflow’s robustness. The workflow also incorporates logging and monitoring capabilities, allowing for detailed tracking of the pipeline’s progress and performance. The complete workflow, from image upload to the storage of processed results, is fully automated and scalable, providing an efficient and reliable solution for image processing tasks. The step functions are highly useful when building image processing applications, particularly when combined with other services like Amazon S3, Lambda, and Rekognition.

Best Practices for Implementation

Designing and implementing serverless Step Functions requires a strategic approach to ensure optimal performance, cost-effectiveness, and security. Following established best practices minimizes potential pitfalls and maximizes the benefits of serverless orchestration. This section details these practices, covering performance optimization, cost minimization, and security considerations.

Designing for Scalability and Resilience

Effective Step Function design emphasizes scalability and resilience to handle varying workloads and potential failures gracefully. This involves careful consideration of state transitions, error handling, and resource allocation.

- Define Clear State Boundaries: Each state within a Step Function should perform a single, well-defined task. This modularity enhances readability, maintainability, and facilitates independent scaling. Complex operations should be broken down into smaller, reusable states.

- Implement Idempotent Actions: Actions that can be executed multiple times without unintended side effects are crucial for resilience. This is especially important for retry mechanisms. If a task fails and is retried, an idempotent action ensures the system remains in a consistent state. For example, updating a database record with a specific version number, rather than simply updating the record without version control.

- Utilize Parallelism Strategically: Step Functions allow for parallel execution of states. This can significantly reduce execution time for tasks that are not dependent on each other. However, overuse of parallelism can lead to increased costs if not managed carefully. For example, processing multiple image files concurrently using a Map state, rather than sequentially.

- Design for Error Handling: Implement robust error handling and retry mechanisms. Configure retries with exponential backoff to avoid overwhelming downstream services during transient failures. Define appropriate error handling within each state to gracefully manage exceptions. Use `Catch` states to handle specific errors and route to alternative paths.

- Employ Timeouts: Set appropriate timeouts for each state to prevent long-running tasks from blocking the workflow. Timeouts can also help prevent runaway costs.

- Version Control: Implement version control for your Step Function definitions. This enables tracking changes, rolling back to previous versions, and managing deployments.

Optimizing Performance

Optimizing Step Function performance is essential for achieving low latency and efficient resource utilization. This involves choosing the right AWS services and configuring them appropriately.

- Choose the Right Service Integrations: Step Functions integrates with various AWS services. Select the most appropriate service for each task based on its performance characteristics and cost. For example, using AWS Lambda for short-lived, compute-intensive tasks and AWS Batch for large-scale batch processing.

- Optimize Lambda Functions: Lambda functions are frequently used within Step Functions. Optimize Lambda function performance by:

- Reducing function code size.

- Optimizing code for execution speed.

- Configuring appropriate memory allocation.

- Using provisioned concurrency to reduce cold start times.

- Minimize Data Transfer: Reduce the amount of data passed between states to minimize latency and costs. Use `ResultPath` and `ResultSelector` to selectively process and filter data.

- Monitor Performance Metrics: Regularly monitor Step Function execution metrics using Amazon CloudWatch. Track execution times, error rates, and resource utilization to identify performance bottlenecks and areas for optimization.

- Use Distributed Tracing: Implement distributed tracing using AWS X-Ray to gain insights into the performance of individual states and service integrations. This helps identify slow-performing components within a workflow.

Minimizing Costs

Cost optimization is a critical aspect of serverless architecture. Step Functions, while cost-effective, can still incur significant costs if not managed carefully.

- Choose Appropriate Execution Types: Step Functions offer two execution types: Standard and Express. Standard executions are suitable for workflows that require reliability and long-running tasks, while Express executions are designed for high-volume, short-duration workflows. Choose the execution type that aligns with your workflow requirements to minimize costs.

- Optimize State Transitions: Each state transition incurs a cost. Minimize the number of state transitions by designing workflows that efficiently chain tasks together.

- Right-Size Resources: Ensure that the resources used by your Step Functions, such as Lambda functions and other integrated services, are appropriately sized for the workload. Avoid over-provisioning resources, which can lead to unnecessary costs.

- Implement Cost Alerts: Set up cost alerts using AWS Budgets to monitor your Step Function costs and receive notifications when spending exceeds a predefined threshold.

- Review and Refactor Regularly: Periodically review your Step Function definitions and identify areas for cost optimization. Refactor workflows to improve efficiency and reduce resource consumption.

Securing Serverless Step Functions

Security is paramount in serverless environments. Securing Step Functions involves implementing various security best practices to protect data, prevent unauthorized access, and ensure the integrity of workflows.

- Follow the Principle of Least Privilege: Grant Step Functions and their associated resources only the necessary permissions to perform their tasks. Avoid granting overly permissive permissions, which can increase the risk of security breaches. Use IAM roles to manage permissions effectively.

- Encrypt Sensitive Data: Encrypt sensitive data stored in Step Functions, such as data passed between states and data stored in integrated services. Utilize AWS Key Management Service (KMS) for key management.

- Securely Store Credentials: Avoid hardcoding credentials within your Step Function definitions. Store credentials securely using AWS Secrets Manager or other secure credential management solutions.

- Validate Input Data: Implement input validation at the beginning of your workflows to prevent malicious input from compromising your system. Validate data types, formats, and ranges.

- Monitor and Audit Access: Enable logging and monitoring for your Step Functions to track execution events, errors, and access attempts. Regularly review logs to identify and address potential security threats. Use AWS CloudTrail to audit API calls made to Step Functions and other AWS services.

- Use VPC Endpoints: If your Step Functions need to access resources within a Virtual Private Cloud (VPC), configure VPC endpoints for AWS services to avoid sending traffic over the public internet.

- Regularly Update Dependencies: Keep your Lambda function dependencies and other components up-to-date to patch security vulnerabilities.

Final Summary

In conclusion, serverless Step Functions offer a compelling solution for orchestrating workflows, providing a scalable, cost-effective, and manageable approach to automating complex processes. By leveraging the power of state machines and seamless integration with other services, organizations can build robust and resilient applications that adapt to evolving business needs. The adoption of Step Functions signifies a significant advancement in workflow automation, empowering developers to focus on building innovative solutions rather than managing infrastructure.

Query Resolution

What are the primary benefits of using serverless Step Functions?

Step Functions offer several key benefits, including scalability, cost-effectiveness (pay-per-use model), simplified management (no server provisioning), improved reliability (built-in error handling and retries), and increased developer productivity (focus on business logic rather than infrastructure).

How does error handling work in serverless Step Functions?

Step Functions provide robust error handling capabilities, allowing you to define retry mechanisms, implement compensation logic (e.g., undoing previous actions), and handle exceptions gracefully. This ensures the resilience of your workflows and prevents failures from cascading.

Can serverless Step Functions integrate with external APIs?

Yes, Step Functions can easily integrate with external APIs using the HTTP task state. This allows you to call third-party services, retrieve data, and incorporate external actions into your workflows, expanding the functionality of your applications.

What is the role of state machines in Step Functions?

State machines define the workflow logic in Step Functions. They specify the sequence of tasks, transitions between states, and error handling mechanisms. Each state machine represents a specific process, allowing you to visually model and manage complex workflows.