What is continuous data replication for migration? It represents a paradigm shift in how we approach data movement, transforming the often-painful process of migration into a streamlined and minimally disruptive operation. This method moves data in real-time or near real-time, ensuring data consistency and availability throughout the transition.

Traditional migration methods often involve significant downtime and risk data loss, continuous data replication (CDR) offers a more robust and efficient alternative. CDR minimizes downtime by continuously replicating data from the source to the target environment, allowing for a seamless cutover. This approach is crucial for modern migration strategies, driven by the need for uninterrupted business operations and the desire to minimize the impact on users and applications.

CDR’s advantages extend beyond mere efficiency; it also enhances data integrity and provides a more reliable path to achieving migration goals, particularly in complex environments like cloud migrations, database upgrades, and application modernizations.

Introduction to Continuous Data Replication (CDR)

Continuous Data Replication (CDR) represents a critical methodology in modern data migration, ensuring minimal downtime and data loss during the transition process. CDR involves the ongoing synchronization of data from a source system to a target system, maintaining a consistent and up-to-date copy of the data in the new environment. This real-time or near-real-time synchronization is crucial for maintaining business continuity and operational efficiency during migrations.CDR is essential for modern migration strategies due to its ability to mitigate risks associated with traditional, batch-oriented approaches.

By continuously replicating data, CDR minimizes the impact of downtime, reduces the potential for data loss, and allows for a phased migration, reducing overall project complexity. This is particularly vital for organizations that require constant data availability and those operating in environments with stringent compliance requirements.

Core Concept of CDR in Data Migration

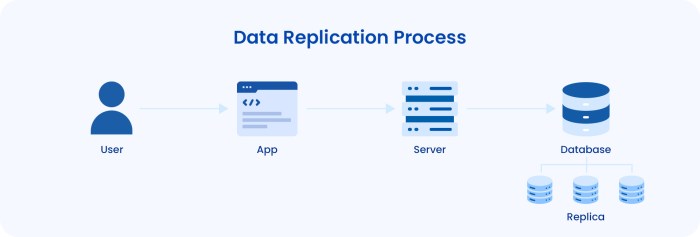

The fundamental principle of CDR revolves around capturing and transmitting data changes from a source system to a target system in a continuous manner. This process ensures that the target system always reflects the most current state of the source system’s data.

The basic mechanism can be described as: Source System Changes → Change Data Capture (CDC) → Replication Engine → Target System Updates.

The Change Data Capture (CDC) component plays a crucial role in identifying and capturing changes made to the source data. The replication engine then transmits these changes to the target system, applying them in a consistent and ordered manner. This process can occur in near real-time, with latency often measured in seconds or minutes, depending on the specific CDR implementation and network conditions.

Key Benefits of Using CDR Over Traditional Migration Methods

CDR offers several significant advantages over traditional data migration methods, particularly those involving bulk data transfers and downtime windows. These benefits contribute to improved business outcomes and reduced operational risks.

- Reduced Downtime: By continuously replicating data, CDR allows for a phased cutover to the target system. This minimizes the duration of downtime required for the migration, which is critical for businesses that operate 24/7. For instance, a financial institution migrating its core banking system can use CDR to minimize downtime, ensuring continuous access to critical financial data for its customers.

- Minimized Data Loss: CDR ensures that the target system is always up-to-date with the source data. In the event of a failure or unexpected issue during migration, the target system can be quickly brought online, minimizing data loss. For example, in healthcare, where patient data integrity is paramount, CDR ensures that the migrated system reflects the most recent patient records, minimizing the risk of incorrect diagnoses or treatments.

- Improved Business Continuity: CDR enables organizations to maintain business operations during the migration process. The ability to failover to the target system at any point in time ensures that critical business functions remain operational, even during the migration. A retail company, for instance, can use CDR to migrate its e-commerce platform while maintaining online sales, ensuring that customers can continue to place orders without interruption.

- Phased Migration Approach: CDR supports a phased migration strategy, allowing organizations to migrate data in manageable chunks. This approach reduces the complexity of the migration process and allows for testing and validation at each stage. For example, a large enterprise can migrate its customer relationship management (CRM) system in phases, migrating specific departments or geographical regions at a time, reducing the overall risk.

- Enhanced Data Integrity: CDR systems often incorporate mechanisms to ensure data consistency and integrity during replication. This includes features like conflict resolution and data validation, which ensure that data is accurately replicated to the target system. In a manufacturing environment, CDR can ensure that production data is consistently replicated, enabling real-time monitoring and control of manufacturing processes.

Understanding the Need for Data Migration

Data migration is a critical process in modern data management, driven by various technological advancements, business requirements, and strategic initiatives. It involves moving data from one system, storage, or format to another. Understanding the underlying reasons for data migration is crucial for effective planning, execution, and ensuring business continuity.

Common Scenarios That Necessitate Data Migration

Data migration is not a one-size-fits-all process; it is initiated by a variety of scenarios, each presenting unique challenges and requirements. These scenarios often overlap, requiring a comprehensive approach to data management.

- System Upgrades and Replacements: This is perhaps the most common driver for data migration. Organizations regularly upgrade their IT infrastructure, including databases, operating systems, and applications, to leverage newer features, improved performance, and enhanced security. The data stored in legacy systems must be migrated to the new platform. For example, migrating data from an outdated CRM system like Siebel to a modern cloud-based solution like Salesforce.

- Data Center Consolidation: Businesses may consolidate their data centers to reduce operational costs, improve efficiency, and enhance disaster recovery capabilities. This involves migrating data from multiple physical locations to a single, centralized data center or a cloud environment.

- Cloud Migration: The adoption of cloud computing has fueled a significant increase in data migration projects. Organizations migrate their data and applications to the cloud to benefit from scalability, cost savings, and increased flexibility. This could involve migrating on-premise databases to cloud-based databases such as Amazon RDS or Azure SQL Database.

- Mergers and Acquisitions (M&A): M&A activities often necessitate data migration to integrate data from different organizations. This involves merging disparate data structures, resolving data inconsistencies, and ensuring data compatibility across the combined entity.

- Application Modernization: Businesses modernize their applications to improve performance, enhance user experience, and support new business requirements. This may involve migrating data to a new database schema or a different application platform. For instance, re-architecting a monolithic application into a microservices architecture.

- Data Center Relocation: Companies may choose to relocate their data centers for reasons such as lower operating costs, improved network connectivity, or disaster recovery purposes. This relocation will involve moving the data from one physical location to another.

Identifying the Business Drivers Behind Data Migration Projects

Data migration projects are often undertaken to achieve specific business objectives, and these drivers influence the scope, approach, and priorities of the migration process.

- Cost Reduction: Reducing IT infrastructure costs is a significant driver. This includes lowering hardware expenses, reducing operational overhead, and optimizing resource utilization. Migrating to cloud-based solutions can often result in substantial cost savings compared to on-premise infrastructure.

- Improved Efficiency and Productivity: Data migration can improve operational efficiency by streamlining processes, automating tasks, and eliminating manual data entry. This can lead to increased productivity and faster time-to-market.

- Enhanced Scalability and Flexibility: Modern IT environments, particularly cloud-based solutions, offer enhanced scalability and flexibility. Data migration enables organizations to scale their infrastructure up or down based on demand, ensuring optimal performance and cost-effectiveness.

- Better Data Governance and Compliance: Data migration projects can improve data governance and compliance by implementing robust data quality controls, standardizing data formats, and ensuring adherence to regulatory requirements. This is crucial for maintaining data integrity and meeting industry standards.

- Increased Agility and Innovation: Migrating to modern platforms and technologies enables organizations to be more agile and responsive to changing business needs. This allows them to innovate faster and capitalize on new opportunities.

- Improved Business Intelligence and Analytics: Consolidating data from various sources into a unified data warehouse or data lake enhances business intelligence and analytics capabilities. This provides a holistic view of the business, enabling better decision-making and improved insights.

Elaborating on the Challenges Associated with Data Migration Without CDR

Data migration without Continuous Data Replication (CDR) presents several challenges that can significantly impact the success, cost, and duration of the project. These challenges often lead to downtime, data loss, and operational disruptions.

- Downtime: Without CDR, the migration process typically involves a period of downtime during which the source system is unavailable for updates. This can be detrimental to business operations, especially for systems that require 24/7 availability. The duration of downtime depends on the size and complexity of the data being migrated.

- Data Loss and Corruption: Data migration without CDR increases the risk of data loss or corruption. If the migration process is interrupted or errors occur during the data transfer, critical data may be lost or become unusable. This can have serious consequences for business operations and decision-making.

- Complexity and Cost: Traditional data migration methods are often complex and require significant manual effort. This can lead to increased costs, longer project timelines, and a higher risk of errors. The complexity increases with the size and heterogeneity of the data.

- Testing and Validation: Thorough testing and validation are crucial to ensure data integrity and accuracy after migration. Without CDR, testing the migrated data against the source data is a time-consuming process that requires significant resources and expertise.

- Risk of Business Disruption: Without CDR, any unforeseen issues during the migration process can cause significant business disruption. This can include data inconsistencies, application downtime, and loss of critical business functions.

- Difficulty in Rollback: If the migration fails or unexpected issues arise, rolling back to the original system can be challenging and time-consuming without CDR. This can further exacerbate business disruption and data loss.

CDR Technologies and Approaches

Continuous Data Replication (CDR) technologies are essential for minimizing downtime and data loss during data migration. These technologies ensure that data is consistently synchronized between source and target systems, facilitating seamless transitions. The selection of an appropriate CDR technology depends on factors such as data volume, acceptable downtime, network bandwidth, and the complexity of the source and target environments.

CDR Technologies and Mechanisms

Various technologies implement CDR, each employing distinct mechanisms to capture, transmit, and apply data changes. These mechanisms are critical for achieving real-time or near-real-time data synchronization.

- Log-Based Replication: This approach utilizes database transaction logs to capture data changes. Changes are extracted from the logs and applied to the target system. This method offers high efficiency and minimal impact on the source system’s performance because it operates asynchronously.

- Block-Level Replication: This technology replicates data at the storage block level. It monitors changes at the storage level and replicates only the modified blocks. Block-level replication is suitable for replicating entire volumes and is often used in disaster recovery scenarios. It is typically hardware-based and can be faster than log-based replication for initial synchronization.

- Trigger-Based Replication: This method uses database triggers to capture data modifications. Triggers are database objects that automatically execute a predefined set of actions in response to certain events on a table or view in a particular database. When a change occurs (e.g., an INSERT, UPDATE, or DELETE operation), the trigger captures the change and replicates it to the target system. This approach is database-specific and can introduce overhead on the source system, as each data modification requires trigger execution.

- Change Data Capture (CDC): CDC is a more general approach that identifies and tracks changes made to data in a database. It captures changes as they occur, providing a stream of modifications that can be applied to the target system. CDC can use various mechanisms, including log-based, trigger-based, or timestamp-based methods.

- File System Replication: This method replicates entire file systems. Changes made to files and directories are detected and propagated to the target system. It is often used for replicating unstructured data. It usually involves a combination of techniques, including real-time file synchronization and versioning.

Comparison of CDR Approaches

The choice of CDR approach involves a trade-off between performance, data consistency, and implementation complexity. Each approach has its advantages and disadvantages, which must be carefully considered based on the specific migration requirements. The following table provides a comparative analysis.

| Approach | Mechanism | Pros | Cons |

|---|---|---|---|

| Log-Based Replication | Uses database transaction logs to capture changes. |

|

|

| Block-Level Replication | Replicates data at the storage block level. |

|

|

| Trigger-Based Replication | Uses database triggers to capture data changes. |

|

|

| Change Data Capture (CDC) | Captures changes as they occur, using various mechanisms. |

|

|

| File System Replication | Replicates entire file systems. |

|

|

Preparing for CDR Implementation

Implementing Continuous Data Replication (CDR) for data migration is a complex undertaking that requires meticulous planning and execution. Successful implementation necessitates a thorough understanding of the source and target environments, accurate bandwidth and storage estimations, and a well-defined migration strategy. This section provides a structured approach to planning and executing a CDR-based migration project, ensuring a smooth and efficient data transfer process.

Organizing the Steps Involved in Planning a CDR-Based Migration Project

Planning a CDR-based migration project involves several distinct phases, each critical to the overall success. A phased approach, with clearly defined milestones and deliverables, helps mitigate risks and ensures that the migration progresses as planned.

- Assessment and Planning: This initial phase involves a comprehensive evaluation of the existing data environment, the target environment, and the specific migration requirements.

- Data Analysis: Analyze the volume, velocity, and variety of data to be migrated. Identify critical data sets, dependencies, and any specific data transformation needs.

- Environment Profiling: Conduct a detailed assessment of both the source and target environments, including hardware specifications, network infrastructure, operating systems, and existing applications.

- Technology Selection: Choose the appropriate CDR technology based on the specific requirements of the migration, considering factors such as data volume, latency tolerance, and budget.

- Migration Strategy Definition: Develop a detailed migration plan outlining the scope, timelines, resource allocation, and communication protocols.

- Infrastructure Setup: Configure the necessary hardware and software components for the CDR solution. This includes installing and configuring the CDR software, setting up network connectivity, and ensuring sufficient storage capacity.

- Data Preprocessing: Identify and address any data quality issues or inconsistencies in the source data. Perform data cleansing, transformation, and validation as needed.

- Security Configuration: Implement robust security measures to protect data during the migration process. This includes encrypting data in transit and at rest, configuring access controls, and monitoring for security threats.

- Full Copy/Seeding: Depending on the CDR technology and the data volume, this involves copying the entire dataset to the target environment. This can be a time-consuming process, especially for large datasets.

- Delta Synchronization: Once the initial data copy is complete, CDR technologies continuously replicate changes from the source to the target environment.

- Data Validation: Verify that all data has been successfully replicated to the target environment. Compare data sets between the source and target environments to identify any discrepancies.

- Performance Testing: Evaluate the performance of the CDR solution under various load conditions. Monitor metrics such as latency, throughput, and resource utilization.

- Failover Testing: Simulate a failover scenario to ensure that the target environment can seamlessly take over in the event of a source environment failure.

- Cutover Planning: Develop a detailed cutover plan outlining the steps required to switch over from the source to the target environment. This includes a rollback plan in case of issues.

- Cutover Execution: Execute the cutover plan, which may involve stopping applications on the source environment, redirecting traffic to the target environment, and verifying that all applications are functioning correctly.

- Post-Migration Monitoring: Continuously monitor the target environment to ensure optimal performance and data integrity.

Creating a Checklist for Assessing the Source and Target Environments for Compatibility

Assessing the compatibility of the source and target environments is crucial for the success of a CDR-based migration. This checklist helps ensure that all relevant aspects of the environments are considered.

- Operating System Compatibility: Verify that the source and target operating systems are compatible with the chosen CDR technology. Consider the specific versions and any required patches or updates.

- Database Compatibility: Determine the compatibility of the source and target databases. This includes the database versions, data types, and any specific database features that are used.

- Network Connectivity: Assess the network infrastructure, including bandwidth, latency, and network security. Ensure that there is sufficient bandwidth to support the CDR process and that the network latency is acceptable.

- Storage Capacity: Verify that the target environment has sufficient storage capacity to accommodate the migrated data. Account for data growth during the migration process.

- Hardware Resources: Evaluate the hardware resources of both the source and target environments, including CPU, memory, and disk I/O. Ensure that the hardware can handle the demands of the CDR process.

- Security Considerations: Assess the security requirements of both environments. This includes implementing data encryption, access controls, and monitoring for security threats.

- Application Compatibility: Verify that the applications running on the target environment are compatible with the migrated data and the CDR technology.

- CDR Technology Compatibility: Confirm that the chosen CDR technology supports the source and target environments and meets the specific requirements of the migration.

- Data Format and Schema Compatibility: Assess whether the data formats and schemas are compatible between the source and target systems. Any required data transformations should be identified and planned for.

- Monitoring and Logging: Ensure that both environments have adequate monitoring and logging capabilities to track the progress of the migration and identify any issues.

Demonstrating How to Estimate the Bandwidth and Storage Requirements for CDR

Accurate estimation of bandwidth and storage requirements is essential for a successful CDR implementation. Underestimating these requirements can lead to performance bottlenecks and data loss.

Bandwidth Estimation: Bandwidth requirements are primarily determined by the rate of data change in the source environment. The formula below can be used to estimate the required bandwidth:

Bandwidth (Mbps) = (Data Change Rate (GB/day)

- 8) / (24

- 60

- 60)

- 1024

Where:

- Data Change Rate is the average rate at which data changes in the source environment. This can be determined by monitoring data change rates over a period of time.

- 8 is the conversion factor from GB to bits.

- 24

– 60

– 60 is the number of seconds in a day. - 1024 is the conversion factor from MB to Mbps.

Example: If the data change rate is 100 GB per day, the required bandwidth is approximately 0.12 Mbps. In a real-world scenario, a company migrating its customer database might observe an average data change rate of 50 GB per day during peak hours. Using the formula, this translates to a required bandwidth of roughly 0.06 Mbps. However, network overhead and potential spikes in data change rates necessitate provisioning a buffer, and in this case, a bandwidth of 0.5 Mbps would be recommended to account for unexpected fluctuations.

Storage Estimation: Storage requirements are determined by the total volume of data to be migrated and the rate of data change. The formula for storage estimation is:

Target Storage = Source Data Volume + (Data Change Rate

Time of Replication)

Where:

- Source Data Volume is the total amount of data in the source environment.

- Data Change Rate is the average rate at which data changes in the source environment.

- Time of Replication is the duration of the CDR process.

Example: If the source data volume is 1 TB, the data change rate is 100 GB per day, and the replication process will take 30 days, the required storage on the target environment would be approximately 4 TB (1 TB + (100 GB/day

– 30 days)). Consider a financial institution migrating its transaction data, where the initial data volume is 5 TB, and the data change rate is 200 GB per day.

If the replication period is 60 days, the target storage requirement is approximately 17 TB. This accounts for the initial data and the changes accumulated during the replication phase. This highlights the importance of planning for data growth.

Setting Up and Configuring CDR Tools

The successful implementation of Continuous Data Replication (CDR) hinges on the correct setup and configuration of the chosen tools. This phase involves several critical steps that dictate the performance, reliability, and overall effectiveness of the data migration process. Proper configuration ensures that data is accurately and efficiently replicated, minimizing downtime and data loss. The following sections detail the typical configuration steps, replication mode selection, and performance optimization strategies.

Typical Configuration Steps for Setting Up a CDR Tool

Setting up a CDR tool typically involves a series of sequential steps to ensure proper functionality. These steps, though varying slightly depending on the specific tool, generally follow a common pattern.

- Installation and Initial Setup: This involves installing the CDR tool software on both the source and target systems. The installation process often includes setting up the necessary user accounts, permissions, and network configurations to allow communication between the source and target databases. For instance, a tool might require specific operating system dependencies like particular versions of Java or .NET Framework.

- Source and Target System Configuration: This step involves configuring the CDR tool to connect to both the source and target databases. This includes providing connection details such as database server addresses, port numbers, usernames, and passwords. It also includes specifying the database schemas, tables, or individual objects to be replicated. For example, configuring a tool to replicate data from a PostgreSQL database to an Amazon RDS instance might require providing the RDS endpoint, database name, username, and password.

- Network Configuration and Security Settings: Ensuring secure and efficient data transfer across the network is crucial. This involves configuring firewalls, setting up secure communication channels (e.g., SSL/TLS encryption), and defining network bandwidth limitations. Proper network configuration prevents data breaches and ensures optimal replication speed. For example, a secure connection between the source and target systems might be established using a VPN tunnel or SSH tunneling to encrypt data in transit.

- Data Filtering and Transformation: Many CDR tools allow for filtering and transforming data during the replication process. This involves defining rules to exclude specific data, map data types, and transform data formats to align with the target system’s requirements. This step helps to optimize storage utilization and ensure data compatibility. For instance, a rule might be set to filter out specific columns or rows based on defined criteria or to convert date formats to match the target database’s expected format.

- Replication Strategy and Scheduling: Choosing the right replication strategy (e.g., real-time, periodic) and scheduling the replication tasks are crucial. This includes determining the frequency of replication, the time windows for replication, and the handling of conflicts. For example, if the replication is intended for near real-time synchronization, the replication frequency might be set to replicate every few seconds or minutes.

- Testing and Validation: Thorough testing is vital to verify that the CDR tool is functioning correctly and that data is being replicated accurately. This involves creating test data, replicating it, and comparing the data on the source and target systems. Testing helps to identify and resolve any issues before the full migration process. For instance, test cases could involve replicating a sample set of data and then comparing the checksums of the replicated data with the source data.

- Monitoring and Alerting: Implementing robust monitoring and alerting mechanisms is crucial for proactively identifying and addressing any replication issues. This involves configuring the CDR tool to monitor replication performance, detect errors, and send alerts to administrators. Monitoring helps to ensure that replication processes run smoothly and that any problems are quickly resolved. For example, the tool could be configured to monitor latency, throughput, and error rates and send email alerts if any of these metrics exceed predefined thresholds.

Selecting the Appropriate Data Replication Mode

Choosing the correct data replication mode is critical for aligning the CDR process with specific business requirements and technical constraints. The selection process involves careful consideration of factors such as data consistency, latency tolerance, network bandwidth, and the nature of the source and target systems. The following are key replication modes and factors for consideration.

- Real-Time Replication (Synchronous or Asynchronous): This mode aims to replicate data changes immediately, typically with minimal latency. Synchronous replication ensures data consistency by waiting for confirmation from the target system before committing changes on the source. Asynchronous replication allows the source system to commit changes without waiting for the target, which can improve performance but potentially introduce some data inconsistency if the target fails.

The choice between synchronous and asynchronous replication often depends on the criticality of the data and the tolerance for potential data loss.

- Periodic Replication (Snapshot-based or Log-based): This mode replicates data at scheduled intervals, such as hourly, daily, or weekly. Snapshot-based replication copies the entire dataset at each interval, which can be resource-intensive. Log-based replication captures only the changes since the last replication, making it more efficient. Periodic replication is suitable for scenarios where real-time consistency is not critical and network bandwidth is limited.

- Transaction-based Replication: This mode replicates data changes based on database transactions. It ensures that entire transactions are replicated, maintaining data consistency. This mode is commonly used for replicating data between transactional systems.

- Change Data Capture (CDC): CDC captures and tracks changes made to the data in the source database. The CDR tool uses these changes to replicate data to the target system. CDC minimizes the impact on the source system by only capturing changed data, and it is often used for real-time or near real-time replication.

- Considerations for Selection:

- Data Consistency Requirements: If data consistency is paramount, synchronous replication or transaction-based replication is generally preferred.

- Latency Tolerance: If low latency is critical, real-time replication is the best option.

- Network Bandwidth: If network bandwidth is limited, asynchronous or periodic replication may be more suitable.

- Source and Target System Capabilities: The source and target systems’ capabilities, such as support for CDC or transaction logging, also influence the selection of the replication mode.

Best Practices for Optimizing the Performance of CDR Tools

Optimizing the performance of CDR tools is essential for minimizing latency, maximizing throughput, and ensuring data integrity. The following are best practices that can be implemented to enhance the efficiency and effectiveness of the CDR process.

- Network Optimization: Optimizing network performance is crucial for minimizing latency and maximizing throughput. This includes ensuring sufficient network bandwidth, minimizing network latency, and using compression techniques to reduce the amount of data transferred. For instance, using a dedicated network for data replication can help to avoid contention with other network traffic.

- Hardware Optimization: Ensure that both the source and target systems have sufficient hardware resources, such as CPU, memory, and disk I/O. Insufficient resources can lead to bottlenecks and slow down the replication process. For example, using high-performance storage systems, such as SSDs, can significantly improve replication performance.

- Data Filtering and Transformation: Applying data filtering and transformation rules can reduce the amount of data that needs to be replicated, improving performance. Filtering out unnecessary data and transforming data formats to align with the target system’s requirements can reduce the load on the replication process.

- Incremental Replication: Employing incremental replication techniques, such as CDC, can reduce the amount of data that needs to be replicated, especially in environments with frequent data changes. By replicating only the changes, the replication process becomes more efficient and faster.

- Batching and Parallelism: Implementing batching and parallelism can improve replication throughput. Batching involves grouping multiple data changes into a single replication unit, reducing the overhead of individual replication operations. Parallelism involves replicating data in parallel streams, allowing the tool to utilize multiple CPU cores and improve overall performance.

- Monitoring and Tuning: Continuously monitor the performance of the CDR tool and tune its configuration based on the observed metrics. Monitor metrics such as latency, throughput, and error rates to identify performance bottlenecks. Adjust the replication settings, such as the number of parallel threads or batch sizes, to optimize performance.

- Regular Maintenance and Updates: Keep the CDR tool and associated systems up-to-date with the latest patches and updates. Regular maintenance can help to resolve performance issues, improve stability, and ensure compatibility with other systems.

Data Synchronization and Initial Load

Data synchronization and the initial data load are critical phases in Continuous Data Replication (CDR) for migration. This process establishes the baseline dataset on the target environment and ensures that subsequent changes are accurately and efficiently replicated. The integrity and consistency of the initial data load are paramount for the success of the entire migration project.

Performing the Initial Data Load in CDR

The initial data load is the process of transferring the entire dataset from the source environment to the target environment before continuous replication begins. Several methods can be employed, and the optimal approach depends on factors such as data volume, network bandwidth, and the capabilities of the CDR tools.

- Bulk Data Transfer: This method involves transferring the entire dataset in one go. It’s often the fastest approach, particularly for large datasets, but requires sufficient bandwidth and downtime. Tools like `rsync`, `robocopy` (for Windows), or database-specific utilities (e.g., `mysqldump` for MySQL, `pg_dump` for PostgreSQL) can be utilized for efficient bulk transfer. The choice of tool should consider factors such as data compression, transfer protocols, and the ability to handle large files.

- Database-Specific Methods: Many databases offer built-in functionalities for data export and import, often optimized for performance. These methods leverage the database’s internal mechanisms for data storage and retrieval, which can lead to faster initial load times compared to generic file-based transfers. For instance, Oracle’s Data Pump or SQL Server’s Backup and Restore features are examples of this approach.

- Snapshotting: This technique creates a point-in-time snapshot of the source data, allowing the initial load to be performed from a consistent view of the data. This is particularly useful for databases with high transactional activity, as it minimizes the impact on the source system. The snapshot is then used to populate the target database, ensuring data consistency.

- Hybrid Approaches: Some implementations combine bulk transfer with change data capture (CDC) to minimize downtime. While the bulk data is transferred, the CDR tool captures ongoing changes at the source. After the bulk transfer is complete, the captured changes are applied to the target, bringing it up to date.

Ensuring Data Consistency During Initial Synchronization

Data consistency during the initial synchronization phase is crucial to avoid data loss or corruption. Several techniques are used to ensure that the data loaded on the target environment accurately reflects the state of the source data at the time of the initial load.

- Transaction Management: CDR tools employ transaction management to ensure that data changes are applied to the target system in a consistent and atomic manner. This involves grouping related changes into transactions, which are either fully committed or rolled back to maintain data integrity. The use of transactions prevents partial updates and ensures that the target data remains consistent.

- Data Validation: Data validation is performed to verify the integrity of the data transferred to the target system. This can involve checksum calculations, comparing row counts, or verifying data types and constraints. Validation ensures that the data on the target matches the source data, identifying any discrepancies that might arise during the transfer process.

- Conflict Resolution: In cases where changes occur on both the source and target systems during the initial load, conflict resolution mechanisms are employed. These mechanisms determine how to handle conflicting changes, such as overwriting the target data with the source data or merging the changes. The choice of conflict resolution strategy depends on the specific requirements of the migration project.

- Idempotency: Idempotency is the property of an operation that can be executed multiple times without changing the result beyond the initial application. In the context of CDR, this ensures that if a data change is applied multiple times due to network issues or other failures, the final result is the same as if the change was applied only once.

Data Synchronization Process: Step-by-Step

Step 1: Pre-load Preparation: Prepare the target environment, including setting up the database schema and configuring the CDR tool. Verify network connectivity and sufficient storage capacity on the target system.

Step 2: Data Extraction: Select the appropriate data extraction method (bulk transfer, database-specific methods, or snapshotting) based on the data volume and downtime requirements. Extract the data from the source system.

Step 3: Data Transformation (if applicable): Transform the data to match the target schema if necessary. This might involve data type conversions, field mappings, or other data cleansing operations. The transformation process is typically performed before loading data to the target environment.

Step 4: Data Loading: Load the extracted and transformed data into the target environment. Ensure the load process is efficient and optimized for performance. Consider the use of parallel loading techniques to speed up the process.

Step 5: Validation and Verification: Validate the loaded data to ensure its integrity and consistency. Compare row counts, checksums, or other data metrics between the source and target systems. Address any data discrepancies that are identified.

Step 6: Enable Change Data Capture (CDC): Configure the CDR tool to capture changes on the source system. CDC mechanisms continuously monitor the source database for data modifications, ensuring that the target environment is kept up-to-date.

Step 7: Initial Synchronization and Delta Application: Apply any changes that occurred during the initial load process. This will bring the target system into synchronization with the source at the moment CDC was enabled. Then, begin continuous replication of ongoing changes.

Step 8: Verification and Testing: Perform thorough testing to verify that the data migration was successful and that the target environment is functioning correctly. This includes data integrity checks, performance testing, and application testing.

Monitoring and Managing CDR Processes

Effective monitoring and management are critical for ensuring the reliability, performance, and data integrity of continuous data replication (CDR) processes during migration. A proactive approach, incorporating comprehensive metrics, robust issue handling strategies, and automated alerting, minimizes downtime, data loss, and performance bottlenecks. The continuous observation of these processes allows for timely intervention and ensures the successful completion of data migration projects.

Crucial Metrics for Monitoring CDR Operations

Monitoring CDR operations necessitates tracking various key performance indicators (KPIs) to assess the system’s health and identify potential issues. These metrics, when analyzed collectively, provide a comprehensive view of the replication process.

- Latency: This metric represents the time delay between data changes occurring at the source and their reflection at the target. High latency can indicate network bottlenecks, processing delays, or resource constraints. For instance, a consistently high latency exceeding several seconds, observed in a database migration involving a large volume of transactions, could signal a need for infrastructure upgrades or optimization of the replication configuration.

- Throughput: Throughput measures the rate at which data is replicated, typically expressed in data volume per unit of time (e.g., megabytes per second or transactions per minute). Low throughput suggests that the replication process is not keeping pace with the rate of data changes at the source. A drop in throughput during peak business hours could indicate insufficient network bandwidth or CPU resources allocated to the replication process.

- Replication Lag: This represents the difference between the source and target data states. A growing replication lag indicates that the target is falling behind the source, potentially leading to data inconsistencies. The replication lag, measured in terms of the number of transactions or the volume of data, is a critical metric. A significant lag might necessitate adjusting the replication schedule or increasing the resources dedicated to the replication process.

- Error Rates: Monitoring error rates is essential for identifying and addressing issues that can disrupt the replication process. Errors can arise from various sources, including network connectivity problems, data corruption, or conflicts. Tracking the types and frequency of errors enables the identification of underlying causes and the implementation of appropriate corrective measures.

- Resource Utilization: Monitoring resource utilization, such as CPU, memory, disk I/O, and network bandwidth, on both source and target systems is crucial for identifying performance bottlenecks. High resource utilization can degrade replication performance and impact overall system performance. Analyzing resource utilization patterns helps in optimizing the replication process and scaling resources as needed.

- Data Integrity Checks: Regular data integrity checks verify that the data on the target system matches the data on the source system. These checks involve comparing checksums, row counts, or other data attributes to ensure data consistency. The frequency of data integrity checks should be based on the criticality of the data and the risk tolerance. For instance, in a financial services environment, data integrity checks might be performed more frequently than in a less critical application.

Strategies for Handling Potential Issues During the Replication Process

Addressing potential issues proactively is paramount to maintaining the integrity and performance of CDR. Implementing robust strategies for issue detection, diagnosis, and resolution minimizes downtime and data loss.

- Proactive Monitoring and Alerting: Implementing comprehensive monitoring tools that track critical metrics and trigger alerts based on predefined thresholds is fundamental. For example, setting up alerts for replication lag exceeding a specific threshold or for error rates exceeding a certain percentage enables timely intervention.

- Error Handling and Resolution: Defining clear procedures for handling various types of errors is crucial. This includes logging errors, identifying the root cause, and implementing corrective actions. For instance, if a network connectivity error occurs, the system should automatically retry the replication or notify the administrator.

- Data Conflict Resolution: Implementing mechanisms to resolve data conflicts that may arise during replication is essential. This might involve using conflict resolution policies or manually resolving conflicts based on predefined rules. For example, if a data conflict occurs during a data migration between two systems, the system could be configured to prioritize updates from the source system or to automatically merge the changes.

- Failover and Recovery Mechanisms: Designing failover and recovery mechanisms to ensure business continuity is critical. In the event of a failure, the system should automatically switch to a secondary replica or backup. For example, a system might automatically switch to a standby replica if the primary replica fails.

- Performance Optimization: Regularly reviewing and optimizing the replication process to improve performance is essential. This might involve optimizing network configurations, increasing resources, or adjusting replication parameters. For example, optimizing the network configuration can involve using dedicated network links or optimizing the data transfer protocols.

Techniques for Automating Monitoring and Alerting Mechanisms

Automation plays a pivotal role in the efficient monitoring and management of CDR processes. Automating monitoring and alerting mechanisms reduces the need for manual intervention and enables proactive issue resolution.

- Using Monitoring Tools: Utilizing specialized monitoring tools, such as Prometheus, Grafana, Nagios, or Zabbix, to collect, analyze, and visualize key metrics. These tools provide dashboards, alerting capabilities, and reporting features. For example, Prometheus can be used to collect metrics from various sources, and Grafana can be used to visualize the metrics in a customizable dashboard.

- Setting Thresholds and Alerts: Defining appropriate thresholds for key metrics and configuring alerts to trigger when these thresholds are breached. For instance, setting a threshold for replication lag and configuring an alert to notify administrators when the lag exceeds a certain time or data volume.

- Automated Notification Systems: Implementing automated notification systems, such as email, SMS, or messaging platforms, to alert administrators of critical events. These systems should provide relevant information about the issue, including the metric that triggered the alert and the time the alert was generated.

- Scripting and Automation: Employing scripting languages, such as Python or Bash, to automate monitoring tasks, such as checking the status of the replication process, verifying data integrity, and generating reports. For example, a script can be used to automatically check the status of the replication process and send an email notification if the process fails.

- Integration with Orchestration Tools: Integrating monitoring and alerting systems with orchestration tools, such as Ansible or Terraform, to automate remediation actions. This allows for automatic scaling of resources, failover, and other corrective measures. For example, if the CPU utilization on a server exceeds a certain threshold, the orchestration tool could automatically provision additional resources.

Testing and Validation

Rigorous testing and validation are critical phases in a continuous data replication (CDR) migration to ensure data integrity, performance, and business continuity. These activities confirm that the migrated system functions as intended and can withstand potential disruptions. The following sections detail the procedures for validating data integrity, conducting performance testing, and simulating failover scenarios.

Validating Data Integrity After Migration

Data integrity validation confirms that data has been accurately replicated and transformed to the target system without loss or corruption. This process is crucial to ensure the migrated system’s reliability.To validate data integrity:

- Data Comparison: Implement data comparison techniques to verify the accuracy of the data migration.

- Row-by-Row Comparison: Compare individual rows between the source and target databases. This method offers the highest level of accuracy but can be resource-intensive, especially for large datasets. Utilize tools like checksum calculations or built-in database comparison utilities.

- Summary-Level Comparison: Compare aggregated data, such as sums, counts, and averages, to quickly identify discrepancies. This approach is faster than row-by-row comparison but might not pinpoint specific data errors.

- Data Profiling: Data profiling analyzes the characteristics of the data to identify anomalies, inconsistencies, and data quality issues.

- Statistical Analysis: Analyze data distributions, identify outliers, and validate data ranges to ensure data accuracy and consistency.

- Data Quality Rules: Apply predefined data quality rules to check for completeness, validity, and consistency.

- Test Data Selection: Use a combination of test data selection methodologies to validate data integrity effectively.

- Random Sampling: Select a random subset of data for validation to ensure that all areas of the dataset are examined.

- Boundary Testing: Test the data at the boundaries of the expected ranges to identify potential issues.

- Edge Case Testing: Test with extreme or unusual data values to validate the system’s handling of exceptional scenarios.

- Data Validation Tools: Employ data validation tools to automate the data comparison and profiling processes. These tools can significantly improve the efficiency and accuracy of data validation. Examples include:

- Database Comparison Tools: Tools like DB Comparer or Oracle SQL Developer provide functionalities to compare data between databases.

- Data Quality Tools: Tools such as Informatica Data Quality or IBM InfoSphere DataStage offer comprehensive data profiling and validation capabilities.

- Reconciliation Reports: Generate detailed reconciliation reports that highlight any data discrepancies or errors. These reports should include information about the source and target data, the comparison method used, and the identified discrepancies.

- Automated Testing: Automate the data validation process to ensure that data integrity is consistently maintained. Implement automated tests that run regularly and generate alerts if any discrepancies are found.

Conducting Performance Testing of the Migrated System

Performance testing assesses the migrated system’s ability to handle the expected workload and identify potential bottlenecks. This testing is essential to ensure that the migrated system meets performance requirements.To conduct performance testing:

- Define Performance Metrics: Establish clear performance metrics to measure the system’s performance. These metrics should align with business requirements.

- Response Time: The time it takes for the system to respond to user requests.

- Throughput: The number of transactions processed per unit of time.

- Resource Utilization: The utilization of system resources such as CPU, memory, and disk I/O.

- Error Rate: The rate at which errors occur during processing.

- Create Test Scenarios: Develop realistic test scenarios that simulate the expected user load and system operations.

- Load Testing: Test the system under increasing load to determine its breaking point.

- Stress Testing: Test the system under extreme load conditions to assess its stability and resilience.

- Endurance Testing: Test the system over an extended period to evaluate its long-term performance.

- Use Performance Testing Tools: Utilize performance testing tools to simulate user activity, measure performance metrics, and identify bottlenecks.

- LoadRunner: A widely used performance testing tool for simulating user load and measuring performance.

- JMeter: An open-source performance testing tool for testing various applications and protocols.

- Gatling: A modern, open-source load testing tool that provides performance metrics and real-time monitoring.

- Monitor System Resources: Monitor system resources during performance testing to identify potential bottlenecks. Track CPU usage, memory consumption, disk I/O, and network traffic.

- Analyze Test Results: Analyze the test results to identify performance bottlenecks and areas for optimization.

- Identify Bottlenecks: Determine the components or processes that are causing performance issues.

- Optimize Performance: Implement optimizations to improve performance, such as database tuning, code optimization, and hardware upgrades.

- Document Test Results: Document the performance testing results, including the test scenarios, performance metrics, and any identified issues. This documentation provides a basis for future performance testing.

Demonstrating How to Simulate Failover Scenarios to Ensure Business Continuity

Simulating failover scenarios is crucial to ensure business continuity and the ability of the migrated system to recover from failures. This testing validates the effectiveness of the failover mechanisms and ensures minimal downtime.To simulate failover scenarios:

- Define Failover Scenarios: Identify potential failure scenarios that could impact the system.

- Hardware Failure: Simulate the failure of a server or storage device.

- Network Outage: Simulate a network connectivity issue.

- Software Failure: Simulate the failure of an application or database service.

- Data Center Outage: Simulate a complete data center outage.

- Implement Failover Mechanisms: Implement failover mechanisms to ensure that the system can automatically switch to a backup system or replica in the event of a failure.

- Database Replication: Implement database replication to create a standby database that can take over in case of a primary database failure.

- Load Balancing: Use load balancers to distribute traffic across multiple servers and automatically redirect traffic to healthy servers in case of a failure.

- High Availability Clusters: Utilize high-availability clusters to provide redundancy and automatic failover for critical services.

- Test Failover Procedures: Test the failover procedures to ensure that the system can successfully switch to the backup system or replica.

- Manual Failover: Manually trigger a failover to test the failover procedures.

- Automated Failover: Simulate a failure and verify that the system automatically fails over to the backup system.

- Failback Testing: Test the process of failing back to the primary system after the original issue is resolved.

- Measure Downtime: Measure the downtime during the failover and failback processes to ensure that the system meets the required recovery time objectives (RTOs).

- Document Failover Procedures: Document the failover procedures, including the steps to trigger a failover, the expected downtime, and the steps to verify the system’s functionality after the failover.

- Regularly Test Failover Scenarios: Conduct regular failover tests to ensure that the failover mechanisms are functioning correctly and that the system can recover from failures.

CDR in Different Migration Scenarios

Continuous Data Replication (CDR) is a versatile technique, applicable across various migration scenarios to minimize downtime, reduce risk, and ensure data integrity. Its adaptability stems from its ability to synchronize data changes in near real-time, making it a critical component in modern data migration strategies. This section explores the application of CDR in cloud migrations, database migrations, and application modernization projects.

CDR in Cloud Migrations

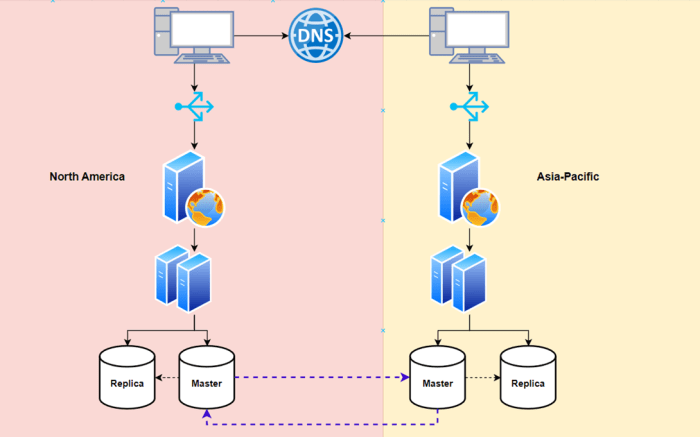

Cloud migrations often involve transferring large datasets and complex applications from on-premises infrastructure to cloud environments. CDR facilitates a smooth transition by continuously replicating data to the cloud, enabling businesses to maintain operations during the migration process.The implementation of CDR in cloud migration typically involves these steps:

- Initial Data Synchronization: An initial full data load transfers the entire dataset from the source environment to the target cloud environment. This establishes the baseline for replication.

- Ongoing Replication: After the initial load, CDR tools continuously monitor and replicate data changes, including inserts, updates, and deletes, from the source to the cloud. This ensures data consistency between the on-premises and cloud environments.

- Cutover Planning: Once the data in the cloud environment is synchronized with the source environment, a cutover plan is executed. This plan involves switching application workloads to the cloud environment, minimizing downtime.

- Testing and Validation: Before cutover, rigorous testing and validation are performed to ensure data integrity, application functionality, and performance in the cloud environment.

A practical example is a retail company migrating its customer relationship management (CRM) system to a cloud-based platform. By employing CDR, the company can replicate customer data, including contact information, purchase history, and interactions, to the cloud. This allows the company to maintain access to the CRM system throughout the migration, avoiding service disruptions and ensuring business continuity. This also provides the opportunity to perform phased migrations, where some applications or services can be migrated while others remain on-premises.

CDR in Database Migrations

Database migrations, whether moving to a new database system or upgrading an existing one, benefit significantly from CDR. It minimizes downtime and data loss risks. CDR provides a mechanism for replicating data changes to the target database.The use of CDR in database migrations can be explained through these points:

- Heterogeneous Database Migrations: CDR can facilitate migrations between different database platforms (e.g., Oracle to PostgreSQL, SQL Server to AWS Aurora). It handles the transformation of data types and structures.

- Homogeneous Database Migrations: For migrations within the same database system (e.g., upgrading an Oracle database), CDR replicates data to the new database version, minimizing downtime.

- Downtime Reduction: CDR significantly reduces downtime by continuously replicating data to the target database. During the cutover, only the delta changes need to be applied, resulting in a shorter downtime window.

- Data Integrity: CDR ensures data integrity by replicating changes in a consistent manner. Data validation checks can be implemented to confirm that data is correctly transferred to the target database.

For example, consider a financial institution migrating its core banking system from an older database to a newer, more scalable database. CDR would replicate transaction data, account information, and other critical data in near real-time to the new database. During the cutover, the institution would only need to apply the final delta changes, allowing for a seamless and minimal-downtime migration.

This also allows for failover mechanisms in case of issues during migration.

CDR in Application Modernization Projects

Application modernization projects often involve migrating data alongside application code, making CDR a vital component. CDR helps ensure that data remains synchronized between the legacy application and the modernized application.The integration of CDR into application modernization projects has these key aspects:

- Data Synchronization: CDR ensures that data is synchronized between the legacy and modernized applications, enabling the modernized application to access the latest data.

- Phased Rollout: CDR supports a phased rollout approach, where a portion of the application functionality can be modernized while the rest remains on the legacy system.

- Data Transformation: CDR can incorporate data transformation capabilities to handle changes in data structures and formats between the legacy and modernized applications.

- Risk Mitigation: CDR mitigates the risk of data loss or inconsistencies during application modernization.

An example is an e-commerce company modernizing its legacy order management system. CDR replicates order data, customer information, and inventory details from the legacy system to the new, modernized system. This enables the company to gradually migrate its users to the new system without interrupting order processing or customer service. The continuous data synchronization provided by CDR ensures that the modernized system has access to the latest data, minimizing disruptions and ensuring business continuity.

Security Considerations for CDR

Continuous Data Replication (CDR) inherently introduces security challenges due to the movement and storage of sensitive data across different environments. Protecting data integrity, confidentiality, and availability during migration is paramount. A robust security strategy is crucial to mitigate risks associated with unauthorized access, data breaches, and service disruptions. Implementing appropriate security measures throughout the CDR process is vital to ensure a secure and successful data migration.

Security Implications of CDR

Data replication introduces several security implications that must be carefully addressed. The process involves transporting data, potentially across networks, from a source system to a target system. This transit presents vulnerabilities that malicious actors can exploit. The target environment must also be secured, as it will hold a copy of the sensitive data.Data breaches are a significant concern. If the replication process or the target environment is compromised, unauthorized individuals could gain access to sensitive data.

Furthermore, data loss or corruption can occur due to unauthorized modifications or replication errors. Compliance with data privacy regulations, such as GDPR or HIPAA, is also essential, necessitating rigorous security controls to protect personal information. Finally, ensuring the availability of the replication service is critical; a denial-of-service (DoS) attack could disrupt the replication process and prevent data from being migrated.

Securing Data During Replication

Securing data during replication involves implementing a layered security approach encompassing encryption, access controls, and monitoring. The goal is to protect data at rest and in transit. This includes protecting the integrity and confidentiality of data during the replication process.Encryption is essential to protect data in transit and at rest. Use strong encryption algorithms, such as AES-256, to encrypt data before transmission and when stored on the target system.

Implement secure communication channels, such as TLS/SSL, to encrypt data during transit. Access controls are also critical. Implement robust authentication and authorization mechanisms to restrict access to the replication tools and data. Use role-based access control (RBAC) to grant users only the necessary privileges. Regularly review and update access controls to maintain security.

Monitoring and auditing are crucial to detect and respond to security incidents. Implement comprehensive logging and monitoring to track replication activity, access attempts, and system events. Regularly review logs and audit trails to identify suspicious activity.

Security Best Practices for CDR

Implementing a robust security posture requires adherence to security best practices. The following table summarizes essential security practices for CDR.

| Area | Best Practice | Description | Rationale |

|---|---|---|---|

| Encryption | Encrypt Data in Transit and at Rest | Employ strong encryption algorithms (AES-256) for data both during transmission and when stored on the target system. Use TLS/SSL for secure communication channels. | Protects data confidentiality and integrity against unauthorized access or modification. |

| Access Control | Implement Robust Authentication and Authorization | Use strong passwords, multi-factor authentication (MFA), and role-based access control (RBAC) to restrict access to replication tools and data. Regularly review and update access controls. | Prevents unauthorized access to data and replication processes. |

| Network Security | Secure Network Infrastructure | Use firewalls, intrusion detection/prevention systems (IDS/IPS), and network segmentation to protect the replication environment. Regularly patch and update network devices. | Protects the replication environment from network-based attacks. |

| Monitoring and Auditing | Implement Comprehensive Logging and Monitoring | Enable detailed logging of replication activities, access attempts, and system events. Regularly review logs and audit trails for suspicious activity. Implement real-time monitoring and alerting. | Enables early detection of security incidents and facilitates incident response. |

Future Trends in Continuous Data Replication

Continuous Data Replication (CDR) is poised for significant evolution, driven by advancements in cloud computing, big data analytics, and the ever-increasing demand for real-time data access and availability. These trends are reshaping how organizations approach data migration, disaster recovery, and overall data management strategies. The future of CDR will be characterized by greater automation, enhanced performance, and broader applicability across diverse data environments.

Emerging Trends in CDR Technologies

Several key technological advancements are shaping the future of CDR. These trends are not isolated but rather interact and influence each other, leading to a more sophisticated and integrated approach to data replication.

- Cloud-Native CDR Solutions: The rise of cloud computing has led to the development of CDR solutions specifically designed for cloud environments. These solutions leverage cloud-native services, such as object storage, serverless computing, and managed databases, to provide scalable, cost-effective, and highly available data replication. Examples include solutions that integrate directly with AWS S3, Azure Blob Storage, or Google Cloud Storage.

- AI and Machine Learning Integration: Artificial intelligence (AI) and machine learning (ML) are being incorporated into CDR processes to automate tasks, optimize performance, and proactively identify potential issues. For instance, ML algorithms can analyze data replication patterns to predict bandwidth requirements, detect anomalies in data streams, and automatically adjust replication parameters for optimal performance.

- Edge Computing and CDR: With the proliferation of edge computing, CDR is extending beyond traditional data centers and cloud environments to edge devices and remote locations. This enables real-time data replication and processing at the edge, reducing latency and enabling applications such as autonomous vehicles, industrial IoT, and distributed healthcare systems.

- Serverless CDR Architectures: Serverless computing is transforming CDR by providing a more flexible and scalable infrastructure. Serverless CDR solutions eliminate the need for managing servers, allowing organizations to focus on the replication process itself. This approach can significantly reduce operational overhead and costs.

- Enhanced Security and Compliance: Security and compliance are paramount concerns in data replication. Future CDR technologies will incorporate advanced security features, such as end-to-end encryption, data masking, and granular access controls, to protect sensitive data during replication. Compliance with regulations such as GDPR, HIPAA, and CCPA will be a key focus.

Predictions About the Evolution of CDR in the Future

The trajectory of CDR is anticipated to be one of continuous improvement and expansion. These predictions are based on current trends and the expected evolution of related technologies.

- Increased Automation: Automation will become even more pervasive, streamlining the entire CDR lifecycle from initial setup to ongoing monitoring and maintenance. AI-powered tools will automate tasks such as conflict resolution, performance tuning, and failover management, reducing the need for manual intervention.

- Hybrid and Multi-Cloud Support: Organizations will increasingly adopt hybrid and multi-cloud strategies. CDR solutions will evolve to seamlessly support data replication across various cloud providers and on-premises environments, enabling greater flexibility and portability.

- Real-Time Data Streaming: The demand for real-time data access will continue to grow. CDR technologies will increasingly focus on enabling real-time data streaming, allowing organizations to process and analyze data as it is generated, driving faster insights and decision-making.

- Simplified Management and Monitoring: CDR solutions will become easier to manage and monitor, with intuitive dashboards and automated alerting systems. This will empower organizations to proactively identify and address potential issues, ensuring data integrity and availability.

- Wider Adoption Across Industries: CDR will be adopted by a broader range of industries, including healthcare, finance, and retail, to meet the increasing demand for data availability, disaster recovery, and real-time analytics.

Potential Impact of New Technologies on CDR

The integration of new technologies will have a profound impact on the capabilities and applications of CDR.

- Quantum Computing: While still in its early stages, quantum computing has the potential to revolutionize CDR by enabling significantly faster data processing and analysis. This could lead to real-time data replication at speeds previously unimaginable. However, this also raises security concerns regarding the need for quantum-resistant encryption.

- Blockchain Technology: Blockchain technology could be used to enhance the security and integrity of CDR processes. Data replicated using blockchain could be tamper-proof, providing a high level of trust and auditability. This is particularly relevant in industries such as finance and supply chain management.

- 5G and Beyond: The rollout of 5G and future generations of mobile networks will enable faster and more reliable data transfer, which will benefit CDR, especially in edge computing and remote environments. This will facilitate real-time data replication for applications that require low latency and high bandwidth.

- Advancements in Data Compression: Continued advancements in data compression algorithms will improve the efficiency of data replication, reducing bandwidth consumption and storage costs. This will be particularly important for replicating large datasets and in environments with limited network resources.

- Decentralized Data Management: The rise of decentralized data management systems could influence CDR by providing a new paradigm for data replication. Instead of relying on centralized servers, data could be replicated across a distributed network of nodes, improving data availability and resilience.

Closing Notes

In conclusion, continuous data replication for migration emerges as a pivotal technology for modern data management strategies. From understanding its core principles and the various technological approaches to mastering implementation and management, CDR offers a comprehensive solution to the challenges inherent in data migration. By embracing CDR, organizations can navigate complex migration scenarios with confidence, ensuring data integrity, minimizing downtime, and maintaining business continuity.

The future of data migration is undeniably intertwined with the continued evolution and adoption of CDR technologies, promising even greater efficiency and resilience in the years to come.

FAQ Insights

What are the primary benefits of using CDR over traditional migration methods?

CDR minimizes downtime, reduces the risk of data loss, ensures data consistency, and allows for a seamless cutover, making it significantly more efficient and reliable than traditional methods.

What types of data are typically replicated using CDR?

CDR can replicate various types of data, including databases, files, and application data. The specific data types depend on the CDR tool and the migration scenario.

How does CDR handle data conflicts during the replication process?

CDR tools often employ conflict resolution mechanisms, such as last-write-wins or user-defined rules, to handle data conflicts that may arise during replication. The method depends on the specific tool.

What are the key considerations for selecting a CDR tool?

Key considerations include the tool’s compatibility with the source and target environments, performance capabilities, security features, ease of use, and cost.