Understanding the nuances between monitoring and observability is crucial for modern software systems. Monitoring focuses on the immediate health of a system, while observability provides a deeper understanding of its behavior and allows for proactive problem-solving. This exploration dives into the key distinctions, techniques, and practical applications of both concepts.

Monitoring often tracks performance metrics and identifies immediate issues, but without a complete picture of system behavior. Observability, on the other hand, encompasses a broader range of data points, allowing for more thorough analysis and the identification of underlying causes. This comprehensive approach facilitates proactive maintenance and optimized system performance.

Defining Monitoring and Observability

Monitoring and observability are crucial for understanding and managing modern applications. They represent distinct approaches to system understanding, with monitoring focusing on the current state and observability aiming for a deeper understanding of the system’s behavior. Understanding the nuances between these approaches is essential for building robust and resilient systems.Observability builds upon the insights provided by monitoring, offering a more comprehensive view of the system.

This allows for proactive issue resolution and optimization. This expanded view is key to developing applications that are adaptable to fluctuating demands and maintain high performance levels.

Defining Monitoring

Monitoring focuses on the current state of a system. It tracks key metrics, such as CPU utilization, memory usage, and network traffic, to identify immediate issues. This proactive approach enables quick responses to anomalies. The primary goal is to detect and alert on problems in real-time.

Defining Observability

Observability, on the other hand, goes beyond just tracking metrics. It encompasses the ability to understand the system’s behavior and performance over time, enabling a comprehensive view of the system. This means having the information needed to diagnose complex issues, predict future problems, and optimize performance. Observability provides the context and insights required to understand why a system is behaving in a certain way.

Key Differences Between Monitoring and Observability

The following table highlights the key differences between monitoring and observability:

| Feature | Monitoring | Observability |

|---|---|---|

| Focus | Current system state; detecting immediate problems | System behavior and performance over time; understanding why the system behaves as it does |

| Goal | Alerting on problems, identifying anomalies | Diagnosing complex issues, predicting future problems, optimizing performance |

| Data Type | Metrics (CPU, memory, network); logs | Metrics, logs, traces, events |

| Depth of Understanding | Limited to the current state | Comprehensive understanding of the system’s behavior |

Relationship Between Monitoring and Observability

Monitoring and observability are not mutually exclusive; rather, they are complementary. Monitoring provides the foundation for observability. Effective observability relies on the data gathered through monitoring, enabling a deeper understanding of system behavior. Monitoring often serves as the first line of defense in identifying potential problems, which can then be further investigated using observability techniques.

Comparison: Monitoring vs. Observability

A comparison of monitoring and observability can be made using a side-by-side approach:

- Monitoring is like checking the temperature of an oven. You know the current temperature, but you don’t know why it’s that temperature or how to adjust it to get a different result. Monitoring tells you if the oven is working now.

- Observability is like understanding how the oven works. You know the temperature, the settings, the gas flow, and the insulation, allowing you to predict how changes to these factors will impact the final temperature. Observability tells you

-why* the oven is at that temperature and how to make it better.

Monitoring Techniques

Monitoring plays a crucial role in ensuring the health and performance of software systems. Effective monitoring enables proactive identification of potential issues, allowing for timely intervention and preventing service disruptions. By systematically collecting and analyzing data from various sources, monitoring provides valuable insights into the system’s behavior. This understanding is fundamental for maintaining optimal system performance and user experience.

Common Monitoring Techniques

Various techniques are employed for monitoring software systems, each with its strengths and weaknesses. Understanding these techniques and their limitations is essential for selecting the most appropriate approach for a given system.

- Application Performance Monitoring (APM): APM focuses on tracking the performance of individual applications and their components. This includes metrics such as response times, error rates, and resource utilization. APM tools provide insights into the flow of requests through the application, helping pinpoint bottlenecks and areas for optimization.

- Infrastructure Monitoring: This technique involves tracking the health and performance of the underlying infrastructure, such as servers, networks, and databases. Metrics monitored include CPU utilization, memory usage, disk I/O, network traffic, and database query response times. Infrastructure monitoring is crucial for ensuring the availability and stability of the system.

- Log Analysis: Log analysis involves collecting and analyzing system logs to identify errors, warnings, and other significant events. This can include logs from applications, servers, and databases. By examining patterns and trends in the logs, potential issues can be detected early. This method is particularly valuable for understanding the root cause of failures and identifying recurring problems.

- Metrics-Based Monitoring: This technique involves collecting and analyzing various metrics, such as request rates, error rates, and resource utilization, to detect deviations from expected behavior. It provides a comprehensive view of system performance and can be used to proactively identify potential issues. Often integrated with APM and infrastructure monitoring, it delivers a more holistic understanding of the system’s health.

Metrics Monitored in Each Technique

The choice of metrics monitored depends heavily on the specific monitoring technique and the goals of the monitoring process. A comprehensive approach typically involves a combination of metrics from different techniques.

| Monitoring Technique | Key Metrics |

|---|---|

| Application Performance Monitoring (APM) | Request latency, response time, error rate, throughput, resource utilization (CPU, memory, network), transaction duration |

| Infrastructure Monitoring | CPU utilization, memory usage, disk I/O, network traffic, server uptime, database query response time, connection rates, load averages |

| Log Analysis | Error messages, warnings, critical events, user activity, system events, exceptions |

| Metrics-Based Monitoring | Application metrics (e.g., request rate, error rate, success rate), infrastructure metrics (e.g., CPU utilization, disk space), user metrics (e.g., session duration, user engagement) |

Limitations of Monitoring Techniques

Each monitoring technique has limitations that need careful consideration. These limitations often include the inability to predict future problems or provide context for observed issues. It’s crucial to understand these limitations to avoid relying solely on monitoring and to use it in conjunction with other approaches.

- Application Performance Monitoring (APM): APM can be complex to implement and may not capture all aspects of application performance, especially in distributed systems.

- Infrastructure Monitoring: Infrastructure monitoring may not always provide the root cause of performance issues if the problem originates in the application layer.

- Log Analysis: Log analysis requires significant effort to process and analyze large volumes of log data. It can also be difficult to identify patterns in complex systems with many interdependent components.

- Metrics-Based Monitoring: Metrics-based monitoring may not always provide sufficient context for understanding observed anomalies. It may not capture the impact of issues on end-users or the overall system behavior.

Monitoring Tools

Various tools are available for implementing each monitoring technique. Choosing the right tools depends on the specific needs of the system and the resources available.

- Application Performance Monitoring (APM): Datadog, Dynatrace, New Relic

- Infrastructure Monitoring: Prometheus, Grafana, Nagios, Zabbix

- Log Analysis: Splunk, Elasticsearch, Logstash, Kibana (ELK stack)

- Metrics-Based Monitoring: Prometheus, Grafana

Observability Principles

Observability, unlike monitoring, goes beyond simply tracking system metrics. It focuses on understanding the underlying reasons behind system behavior, enabling proactive issue resolution and improved performance. It emphasizes the ability to infer the internal state of a system from observable external behavior. This shift in focus provides a deeper level of insight compared to the reactive approach of monitoring.

Core Principles of Observability

Observability is built upon four core principles:

- Telemetry: Collecting comprehensive data about the system, including metrics, logs, and traces. This encompasses all relevant information, ensuring a holistic view of system performance and behavior. Proper telemetry ensures that sufficient data is collected for thorough analysis and understanding.

- Tracing: Understanding the flow of requests and events through the system. This allows for the identification of bottlenecks and dependencies, enabling precise diagnosis of issues. Tracing provides a clear path for requests through the system, allowing for pinpointing issues and improving efficiency.

- Metrics: Collecting quantitative data about system performance, resource utilization, and error rates. This provides a foundation for understanding trends and anomalies. Metrics provide a measurable basis for evaluating system health and performance over time, allowing for identifying patterns and potential problems.

- Logs: Recording events and activities within the system. This provides contextual information and detailed descriptions of system behavior. Logs offer a narrative account of the system’s actions, enabling the reconstruction of events for problem analysis.

Observability vs. Monitoring

Monitoring primarily focuses on detecting anomalies and alerting on deviations from predefined thresholds. Observability, in contrast, aims to understand the underlying causes of those anomalies, allowing for proactive issue resolution and continuous improvement. Monitoring provides a snapshot of current system health, while observability offers a deeper understanding of why the system is behaving in a particular way. This proactive approach is vital for building resilient and performant systems.

Components of an Observability Platform

An observability platform integrates tools and technologies for collecting, processing, and analyzing data from various sources. A robust observability platform provides a unified view of the system’s health, enabling swift problem identification and resolution. Key components include:

- Agent-based monitoring: Agents collect telemetry data from various parts of the system. This enables efficient data gathering and consistent reporting, crucial for providing an accurate picture of system performance.

- Data ingestion: A system to ingest and store collected data from various sources, ensuring data consistency and accessibility. Data ingestion is a critical component for processing and analyzing data, providing a foundation for generating actionable insights.

- Data processing: Tools and techniques for transforming and enriching collected data, enabling insights and efficient analysis. This involves processing the collected data to make it useful and actionable, allowing for identification of trends and patterns.

- Visualization and analysis: Tools for visualizing data and identifying trends, anomalies, and root causes. This facilitates a comprehensive understanding of the system’s behavior and enables proactive issue resolution.

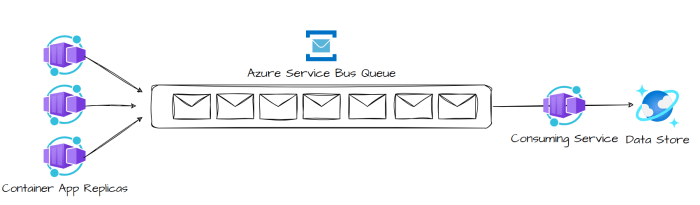

System Architecture for Observability

A distributed system architecture is well-suited for implementing observability. This design facilitates the collection and processing of data from multiple services, ensuring a holistic view of the entire system. The architecture comprises interconnected components:

- Data collection layer: Collects data from various sources, including applications, services, and infrastructure. This layer acts as the first point of contact for gathering information, ensuring that all relevant data is captured.

- Data processing layer: Transforms and enriches collected data, making it more useful for analysis. This layer is critical for preparing data for analysis, enabling efficient extraction of valuable insights.

- Data storage layer: Stores collected and processed data for long-term analysis and reporting. This layer is crucial for preserving data for later analysis, supporting trend identification and historical data review.

- Visualization and analysis layer: Provides tools for visualizing data and identifying patterns and anomalies. This layer is critical for extracting meaningful information from the data, supporting proactive issue resolution and performance improvement.

Components and Functions

| Component | Function |

|---|---|

| Data Collection Agents | Gather telemetry data from applications and infrastructure. |

| Data Ingestion Pipeline | Collect, process, and store telemetry data. |

| Data Storage (e.g., Time Series Databases) | Store and manage collected data for long-term analysis. |

| Analysis and Visualization Tools | Visualize data, identify trends, and provide insights. |

Data Collection and Analysis

Data collection and analysis are crucial steps in both monitoring and observability. Effective monitoring systems must accurately capture relevant data to identify performance issues. Observability systems go further, aiming to understand the underlying reasons for those issues and the complete system behavior. This necessitates different data collection approaches and more sophisticated analytical techniques.The methods used to gather data, and how that data is processed and interpreted, directly impact the effectiveness of both monitoring and observability initiatives.

Proper analysis allows for proactive issue resolution and optimized system performance.

Different Data Collection Methods

Data collection methods in monitoring and observability systems vary widely. Monitoring often relies on simpler, more direct methods to gather data, while observability demands a more comprehensive approach.

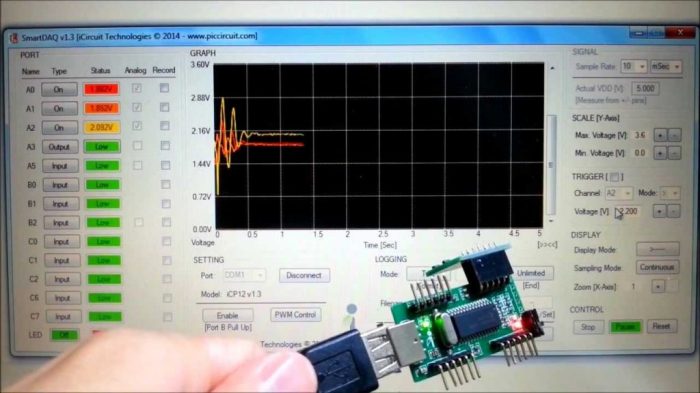

- Metrics: Metrics provide numerical measurements of system performance, such as CPU utilization, response times, or error rates. These are often collected using specialized agents or instrumentation within the application code. Metrics are essential for identifying trends and performance bottlenecks.

- Logs: Logs record events that occur within a system, such as user actions, errors, or warnings. They offer a detailed record of system activity and are critical for troubleshooting specific issues and understanding the context surrounding events.

- Traces: Traces capture the sequence of events that lead to a specific outcome, providing a complete picture of how different components of a system interact. They are invaluable for understanding complex transactions and pinpointing performance bottlenecks within a distributed system.

- Agent-Based Monitoring: This involves using agents to collect data directly from the system components. This method can provide granular, real-time insights into the system’s health. It’s commonly used for monitoring server performance, application behavior, and network traffic.

- API-Based Monitoring: In this approach, monitoring tools access system data through application programming interfaces (APIs). This is often used to collect data from cloud services, databases, and other external systems. It’s more adaptable to diverse and complex systems than agent-based methods.

Comparison of Data Collection Methods

Monitoring primarily focuses on collecting data about system performance and identifying anomalies. Observability, on the other hand, seeks a more comprehensive view of the system’s behavior by gathering logs, traces, and metrics.

| Feature | Monitoring | Observability |

|---|---|---|

| Primary Goal | Identify performance issues and anomalies. | Understand system behavior and root cause analysis. |

| Data Types | Metrics, basic logs. | Metrics, logs, traces, and other relevant data. |

| Analysis Focus | Identifying and reacting to problems. | Understanding system interactions and predicting future issues. |

Data Analysis in Monitoring and Observability

The analysis of collected data differs significantly between monitoring and observability.

- Monitoring Analysis: Monitoring often uses simple analysis techniques, such as threshold-based alerts, to identify deviations from expected performance. These systems frequently rely on dashboards and alerts to flag potential problems.

- Observability Analysis: Observability employs sophisticated analysis techniques, including statistical modeling, machine learning, and pattern recognition, to correlate data points and uncover the root cause of issues. This deeper analysis enables a more proactive and comprehensive understanding of the system’s behavior.

Logs, Metrics, and Traces in Observability

Logs, metrics, and traces play a critical role in observability, enabling a comprehensive understanding of the system.

- Logs: Provide context and detail surrounding events. Logs capture events, errors, and warnings, providing a historical record of system activities.

- Metrics: Offer quantifiable measurements of system performance, enabling trend analysis and the identification of performance bottlenecks. Metrics help determine system health and identify deviations from expected behavior.

- Traces: Capture the sequence of events across different components, revealing interactions between various parts of a distributed system. Traces enable a holistic view of the system’s behavior, which is essential for understanding complex transactions and pinpointing performance bottlenecks.

Using Data Analysis for Issue Identification and System Improvement

Analyzing collected data allows for the identification of issues and the implementation of improvements.

- Issue Identification: By analyzing logs, metrics, and traces, teams can pinpoint the root cause of performance problems or errors. Identifying the root cause allows for more targeted and effective solutions.

- System Improvement: Analyzing data reveals patterns and trends that can be used to optimize system design, improve performance, and prevent future issues. Data analysis supports the development of more robust and reliable systems.

Problem Diagnosis and Resolution

Effective problem diagnosis and resolution in a system rely heavily on the quality and type of data available. Traditional monitoring often provides limited insight, while observability offers a more comprehensive view, enabling deeper understanding and faster resolution. This section delves into the distinct approaches and methodologies employed for diagnosing and resolving issues within systems using both monitoring and observability data.The ability to pinpoint the root cause of a problem is critical for efficient issue resolution.

Monitoring provides a baseline for identifying anomalies, while observability provides the context needed to understand the intricacies of system behavior, ultimately leading to a more accurate diagnosis. By leveraging both approaches, a more holistic view of the problem is achieved, allowing for quicker resolution and preventing future occurrences.

Diagnosing Issues Using Monitoring Data

Monitoring systems primarily focus on metrics and logs. Anomalies in these data streams can signal potential problems. For instance, a sudden spike in CPU utilization might indicate a performance bottleneck, while an increase in error logs might signify a software issue. Detailed monitoring dashboards provide a visual representation of these metrics, facilitating the identification of unusual patterns.

Manual review and automated alerting systems are often employed to detect these deviations from the expected behavior.

Diagnosing Issues Using Observability Data

Observability data encompasses a broader range of information, including traces, logs, and metrics. This comprehensive data set allows for a more detailed understanding of the system’s behavior, enabling a deeper dive into the root cause of a problem. For example, a slow response time can be analyzed using traces to identify bottlenecks in specific components or services. Logs can provide context, like error messages or user actions, leading to a more complete picture of the problem.

Comparing and Contrasting Problem-Solving Approaches

Monitoring focuses on detecting deviations from predefined baselines, whereas observability focuses on understanding the system’s behavior in real-time. Monitoring often relies on predefined thresholds and alerts, while observability enables a more dynamic understanding of system interactions. A simple example: Monitoring might alert on high CPU usage, while observability would reveal that a specific database query is causing the bottleneck, allowing for more targeted resolution.

Root Cause Analysis in Monitoring and Observability

Root cause analysis (RCA) in both monitoring and observability involves identifying the underlying cause of a problem. In monitoring, RCA often focuses on isolating the metric exhibiting the anomaly. For example, if a database query latency increases, monitoring data might pinpoint the specific query causing the problem. In observability, RCA often involves analyzing traces, logs, and metrics to understand the system’s behavior.

For example, tracing the request flow through the system reveals the bottleneck or error point.

Issue Resolution Process Using Observability Data

A structured approach to issue resolution using observability data involves the following steps:

- Data Collection and Aggregation: Gather relevant traces, logs, and metrics from the affected system.

- Pattern Recognition: Identify patterns and anomalies in the collected data, using tools for visualization and analysis.

- Root Cause Analysis: Utilize the data to pinpoint the exact cause of the issue, examining dependencies and interactions between system components.

- Solution Design: Develop a solution based on the identified root cause, considering potential impact on other system components.

- Implementation and Validation: Implement the solution and validate its effectiveness by monitoring system performance.

System Performance and Stability

Maintaining optimal system performance and ensuring stability are crucial for any application. Effective monitoring and observability strategies play a significant role in achieving these goals. This section explores how these techniques contribute to uptime and resilience, and how they can be used to proactively address potential issues.

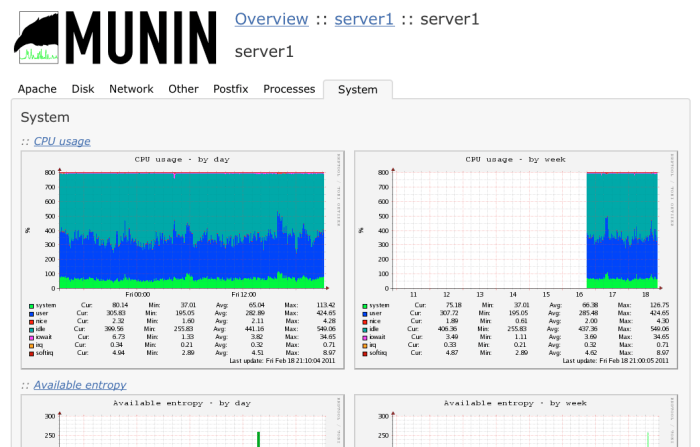

Monitoring for System Performance

Monitoring provides a real-time view of system metrics, allowing for proactive identification of performance bottlenecks. By continuously tracking key indicators such as CPU utilization, memory consumption, network throughput, and disk I/O, monitoring tools can flag potential issues before they impact user experience or lead to service disruptions.

- Identifying Performance Bottlenecks: Monitoring tools generate detailed reports that highlight resource-intensive processes and components. This information enables developers to pinpoint areas needing optimization. For example, high CPU usage on a specific server might indicate a poorly performing query in a database application, or a memory leak in a critical application module.

- Proactive Performance Tuning: Monitoring data allows for proactive adjustments to system configurations. Identifying trends in resource usage, such as increasing CPU load during peak hours, allows for anticipatory scaling of resources, preventing performance degradation. This is crucial for maintaining service levels under high demand.

- Real-time Performance Diagnostics: Monitoring systems often offer real-time dashboards and alerts, enabling immediate response to sudden performance drops or spikes. A real-time alert about a database connection time-out allows immediate intervention to resolve the issue and avoid impacting user interactions.

Observability for System Stability

Observability goes beyond monitoring by providing a holistic understanding of the system’s behavior. It enables not only the identification of current problems but also the prediction and prevention of future failures. This deeper insight into system interactions allows for more informed decisions regarding maintenance and upgrades.

- Predictive Failure Detection: By analyzing patterns in system logs, metrics, and traces, observability tools can identify anomalies and potential failure points. For instance, if a specific code path consistently produces high error rates, observability can flag this as a potential failure point and suggest corrective actions.

- Root Cause Analysis: Observability provides context around system events, allowing for deeper root cause analysis. By tracing requests through the entire system, observability tools can identify bottlenecks and pinpoint the exact cause of errors. This detailed analysis enables more effective and targeted solutions.

- Improved Fault Tolerance: Understanding system dependencies and interactions allows for better fault tolerance design. Observability provides visibility into how different components of the system interact and influence each other, enabling proactive adjustments to ensure robustness in the face of potential failures.

Impact on System Uptime

Both monitoring and observability contribute to increased system uptime. Monitoring primarily focuses on reacting to existing problems, while observability strives to prevent them. The combination of these two approaches yields a significant improvement in overall system availability.

- Monitoring’s Role: Monitoring helps maintain uptime by promptly identifying and resolving issues. By alerting on critical thresholds and providing detailed diagnostics, monitoring ensures that problems don’t escalate into widespread outages.

- Observability’s Role: Observability, through proactive identification of potential failures, can prevent outages before they occur. By understanding system behavior, observability allows for preventative measures such as scaling resources or implementing safeguards against predicted failures.

Monitoring and Performance Bottlenecks

Monitoring is instrumental in pinpointing performance bottlenecks within a system. By identifying components or processes consuming excessive resources, monitoring facilitates targeted optimization efforts.

- Identifying Resource Consumption: Monitoring tools track metrics like CPU utilization, memory usage, network bandwidth, and disk I/O. Analyzing these metrics reveals processes or services that are consuming excessive resources, thus hindering overall performance.

- Tracking Resource Trends: Monitoring allows for the observation of resource trends over time. This trend analysis helps in identifying patterns and anomalies that point to potential performance bottlenecks, enabling proactive optimization strategies.

Observability and System Failure Prevention

Observability empowers prediction and prevention of system failures. By providing a comprehensive view of the system’s interactions, observability tools enable proactive mitigation of potential issues.

- Identifying Anomalous Behavior: Observability tools analyze system logs, metrics, and traces to identify deviations from normal behavior. Such deviations can be indicative of emerging problems that, if left unaddressed, could lead to failures.

- Predicting System Failures: By detecting and analyzing patterns in data, observability can predict potential failures before they occur. This predictive capability allows for proactive measures, such as resource scaling or code adjustments, to prevent service disruptions.

Scalability and Maintainability

Monitoring and observability play crucial roles in ensuring the long-term health and success of any system. A system’s ability to handle increasing workloads and adapt to evolving needs is paramount, while efficient maintenance minimizes downtime and ensures continuous operation. Effective monitoring and observability strategies directly impact both scalability and maintainability.

Impact of Monitoring on System Scalability

Monitoring provides essential visibility into system performance under various loads. This visibility allows for proactive adjustments to infrastructure resources, such as scaling up server capacity or adding caching layers. By tracking key metrics like CPU utilization, memory consumption, and network traffic, administrators can identify potential bottlenecks and prevent performance degradation as the system grows. This proactive approach enables the system to handle increasing demands without significant performance hiccups.

- Resource Allocation and Optimization: Monitoring tools provide real-time data on resource usage, enabling administrators to optimize resource allocation. For instance, if a specific database query is identified as a bottleneck, the database server can be scaled up or the query can be optimized. This proactive adjustment prevents performance degradation under increasing loads.

- Early Detection of Bottlenecks: Monitoring systems can flag performance degradation long before it becomes a critical issue. By detecting subtle trends and anomalies, proactive intervention is possible, allowing for timely adjustments to prevent service disruptions.

- Capacity Planning: Historical monitoring data enables accurate capacity planning. Understanding the system’s performance under different load conditions enables administrators to estimate future resource requirements. This forecasting helps in avoiding sudden capacity crises and ensures that the system can handle anticipated growth without compromising performance.

Observability’s Enhancement of System Maintainability

Observability empowers developers and system administrators to understand the internal workings of a system. This understanding allows for easier debugging, troubleshooting, and faster resolution of issues. By providing detailed insights into system behavior, observability enables a more efficient maintenance process.

- Improved Debugging and Troubleshooting: Observability provides a holistic view of system behavior, enabling the identification of the root cause of problems faster. Detailed logs, metrics, and traces enable developers to understand how different components interact and pinpoint the exact location of a fault. This leads to faster resolution of issues and reduces downtime.

- Enhanced Problem Diagnosis: Observability data provides a complete picture of the system’s state, including details about user interactions, data flows, and component interactions. This complete picture allows for more effective root cause analysis and quicker resolution of problems.

- Facilitating Faster Rollouts: Observability tools help validate new deployments and configuration changes, allowing for a faster and more confident release process. The detailed insights enable rapid identification and resolution of issues in the new configuration. This allows for iterative improvements without disrupting service.

Comparison of Monitoring and Observability Benefits

| Feature | Monitoring | Observability |

|---|---|---|

| Focus | System performance metrics | System behavior and internal state |

| Goal | Detect and alert on problems | Understand and diagnose problems |

| Scalability Impact | Enables proactive scaling and resource optimization | Supports informed decisions about scaling and architecture |

| Maintainability Impact | Reduces downtime by alerting on issues | Enables faster and more effective troubleshooting |

Monitoring primarily focuses on identifying and alerting on issues, while observability focuses on understanding the system’s behavior and internal state. Observability, therefore, provides a deeper level of understanding and facilitates more proactive and informed decisions for scaling and maintenance.

Security Considerations

Monitoring and observability systems collect and process sensitive data about applications and infrastructure. Therefore, robust security measures are paramount to protect this data and prevent unauthorized access or manipulation. This section details the critical security considerations inherent in these systems.Effective security practices are essential for both monitoring and observability systems to ensure the confidentiality, integrity, and availability of the data they handle.

This includes protecting the data pipeline, securing the systems themselves, and establishing appropriate access controls.

Security Implications of Monitoring Systems

Monitoring systems collect data about system performance, resource utilization, and application behavior. This data can expose vulnerabilities if not properly secured. Unauthorized access to monitoring logs could reveal sensitive information about the system’s configuration, internal workings, or user activity. Improperly configured alerts can inadvertently leak information about potential security incidents.

Security Implications of Observability Systems

Observability systems go beyond monitoring by providing a comprehensive view of the system’s behavior. They often collect and analyze more detailed data, including logs, metrics, and traces. Compromising these systems can expose even more sensitive information, such as detailed application code snippets or intricate interaction patterns. This level of detail presents an elevated risk compared to basic monitoring systems.

Securing Monitoring and Observability Data

Protecting the data collected by monitoring and observability systems is critical. Implementing encryption throughout the data pipeline, from collection to storage, is a fundamental security measure. Access controls, including role-based access control (RBAC), should restrict data access to only authorized personnel. Regular security audits and penetration testing help identify and mitigate potential vulnerabilities.

Securing the Data Pipeline

The data pipeline in monitoring and observability systems is a crucial target for attackers. Data should be encrypted in transit and at rest. Implement strong authentication and authorization mechanisms to control access to data sources and destinations. Regularly review and update security configurations to adapt to evolving threats. A robust logging and auditing system is essential to detect and respond to security incidents promptly.

Implement a zero-trust security model for the entire monitoring and observability infrastructure. This approach assumes no implicit trust and requires verification for every access attempt.

Importance of Security in Monitoring and Observability Systems

Security is not an add-on but an integral part of monitoring and observability systems. Breaches in these systems can lead to significant damage, including data breaches, service disruptions, and financial losses. A proactive approach to security, incorporating regular security assessments and incident response plans, is essential for maintaining system integrity and preventing unwanted consequences.

Tools and Technologies

Choosing the right monitoring and observability tools is crucial for effective system management. These tools provide the insights needed to identify and resolve issues, optimize performance, and ensure system stability. Selecting the appropriate tools depends on the specific needs of the system and the resources available. A well-chosen set of tools empowers teams to proactively address problems, minimizing downtime and maximizing efficiency.

Monitoring Tools

A variety of tools are available for monitoring system performance and health. These tools provide real-time visibility into key metrics and allow for proactive identification of potential issues. Effective monitoring enables rapid response to problems, preventing them from escalating into significant outages.

- Prometheus: An open-source systems monitoring and alerting toolkit. It excels at collecting metrics from various sources and presenting them in a user-friendly format. Prometheus’s strong community support and extensibility through its exporter system make it adaptable to diverse environments.

- Datadog: A comprehensive platform offering monitoring, logging, and tracing capabilities. Datadog’s strong integration capabilities and pre-built dashboards simplify the process of collecting and visualizing data from multiple systems.

- Grafana: A popular open-source visualization tool for monitoring and observability. Grafana allows for creating custom dashboards and visualizations from diverse data sources, making it a valuable tool for customized monitoring needs.

- Nagios: A robust open-source monitoring tool for infrastructure and applications. Nagios excels at detecting and reporting issues, providing alerts to system administrators.

Observability Tools

Observability tools provide a deeper understanding of system behavior, enabling more comprehensive problem diagnosis and proactive issue resolution. These tools provide insights into the relationships between various components, allowing for the identification of root causes and the prediction of potential problems.

- Jaeger: An open-source distributed tracing system. Jaeger provides detailed information about the flow of requests through multiple services, enabling the identification of bottlenecks and performance issues.

- Zipkin: A distributed tracing system focused on tracing requests across microservices. It provides insights into the latency and performance of individual services and their interactions within the system.

- New Relic: A comprehensive platform offering application performance monitoring, application security, and distributed tracing. New Relic’s breadth of capabilities makes it suitable for monitoring and observability in complex applications.

Key Features of Monitoring and Observability Tools

Monitoring and observability tools should possess specific features to be effective. These features enable efficient data collection, analysis, and problem resolution. Critical considerations include ease of integration, scalability, and reporting capabilities.

- Real-time data collection and analysis: Tools should capture data in real-time to provide immediate insights into system performance and health. Real-time dashboards and alerts are crucial for timely issue resolution.

- Customizable dashboards and alerts: The ability to tailor dashboards and alerts to specific needs is essential for effective monitoring and observability. Custom visualizations and tailored alerts improve the identification and resolution of issues.

- Integration with other systems: Integration with existing systems and platforms is essential for seamless data collection and analysis. This enables a unified view of system health and performance.

- Scalability: Tools must be able to handle increasing data volumes and system complexity as the system grows. Scalability ensures the tool remains effective as the system evolves.

Choosing the Right Tools

Selecting the appropriate tools for monitoring and observability depends on various factors, including the type of system being monitored, the existing infrastructure, and the budget. Carefully consider these factors when making decisions about tools.

| Category | Tool | Key Features |

|---|---|---|

| Monitoring | Prometheus | Open-source, metric collection, alerting |

| Monitoring | Datadog | Comprehensive platform, monitoring, logging, tracing, integrations |

| Observability | Jaeger | Distributed tracing, microservices, request flow analysis |

| Observability | Zipkin | Distributed tracing, latency analysis, service interaction visualization |

Real-World Examples

Monitoring and observability, while conceptually distinct, are often implemented together in real-world scenarios. Successful deployments leverage the strengths of both approaches to provide a comprehensive view of system health and performance. These examples demonstrate how organizations are using monitoring and observability to gain valuable insights and improve their systems.

Successful Monitoring Implementation

A retail company experienced significant transaction delays during peak shopping seasons. They implemented a comprehensive monitoring system that tracked key metrics such as database response times, network latency, and server CPU utilization. Real-time alerts were configured to notify administrators of any deviations from predefined thresholds. By proactively identifying and addressing performance bottlenecks, the company significantly reduced transaction delays, improved customer satisfaction, and avoided revenue losses during crucial periods.

Successful Observability Implementation

A large e-commerce platform noticed inconsistent user experience across different regions. Observability tools allowed them to correlate user behavior with system performance data, such as network latency, API response times, and server resource consumption. This deeper understanding of system interactions enabled the identification of regional network issues impacting user experience. By analyzing this correlated data, the platform was able to isolate and resolve the problem, ensuring consistent performance for all users.

Case Study: Benefits of Monitoring and Observability

A cloud-based SaaS provider experienced a surge in user traffic. Monitoring systems tracked resource utilization and identified increasing CPU load on specific servers. Observability tools allowed them to pinpoint the root cause to a particular API call that was inefficient. They implemented caching mechanisms to improve the API’s performance and optimized the database queries. This combined approach allowed them to handle the increased load without impacting user experience, highlighting the value of proactively identifying and resolving performance bottlenecks using both monitoring and observability.

Case Study: Comparing Monitoring and Observability Results

A software development team implemented both monitoring and observability to track the performance of a new mobile application. Monitoring provided insights into application crashes and average response times. Observability, however, uncovered the root cause of these issues by identifying slow database queries and network bottlenecks. This analysis enabled the team to optimize the application’s architecture, leading to a significant improvement in both crash rates and user experience compared to previous approaches relying solely on monitoring.

Comparison: Monitoring and Observability for Business Problem

A financial institution needed to ensure the reliability of its online banking platform. Monitoring tools tracked transaction throughput and error rates. Observability provided insights into the impact of specific user actions on the platform, allowing them to identify and mitigate risks related to unusual transaction patterns. This combination enabled the bank to maintain high reliability, protect customer data, and prevent fraudulent activities.

Monitoring focused on identifying issues, while observability enabled understanding the

why* behind those issues, resulting in more effective solutions.

Future Trends

The landscape of monitoring and observability is constantly evolving, driven by advancements in technology and the increasing complexity of modern systems. Predicting the future involves examining current trends and anticipating how they will shape the field. The future of these practices will be characterized by increased automation, deeper integration with AI/ML, and a greater emphasis on proactive rather than reactive approaches.

Future of Monitoring Technologies

Monitoring technologies are poised for significant advancements, driven by the need for faster, more comprehensive, and automated data collection and analysis. Cloud-native monitoring solutions will continue to gain traction, offering optimized performance for distributed systems. The rise of serverless computing necessitates new monitoring approaches focused on function-level visibility.

Future of Observability Technologies

Observability technologies will continue to evolve towards a more holistic view of system behavior. Emphasis will shift from simply identifying problems to understandingwhy* problems occur, enabling more effective root cause analysis. This includes a greater emphasis on contextual understanding, leveraging AI and machine learning to automatically correlate events and pinpoint anomalies.

Emerging Trends in Monitoring and Observability

Several key trends are shaping the future of monitoring and observability. These include:

- Increased Automation: Tools and platforms will increasingly automate the monitoring and analysis process, reducing human intervention and enabling faster response times to incidents.

- AI/ML Integration: Artificial intelligence and machine learning will play a more prominent role in identifying patterns, predicting failures, and providing insights into system behavior, enabling proactive maintenance.

- Contextual Observability: Systems will be monitored not just in isolation, but in the context of their environment, including dependencies and external factors. This holistic view will enable a deeper understanding of the root causes of issues.

- Decentralized Monitoring: Distributed systems require monitoring solutions that can scale and adapt to the decentralized nature of these architectures. This necessitates a distributed approach to data collection and analysis.

- Real-time Observability: The ability to observe and respond to events in real-time will be crucial for maintaining system stability and user experience. This demands sophisticated monitoring tools capable of handling high volumes of data.

Evolution of Monitoring and Observability

Monitoring and observability will evolve from reactive, problem-solving approaches to proactive, predictive models. The emphasis will shift from merely detecting issues to understanding the underlying reasons and anticipating potential problems before they impact users. This shift requires a deeper understanding of the system’s behavior, leveraging contextual information, and utilizing machine learning for predictive analysis.

Future of Monitoring and Observability in Specific Technologies

The evolution of monitoring and observability is intricately linked to advancements in specific technologies.

- Cloud-native environments: Monitoring solutions will need to seamlessly integrate with cloud platforms, providing granular visibility into containerized applications, microservices, and serverless functions. For example, solutions like Prometheus and Grafana are being adapted for cloud-native environments, ensuring observability throughout the lifecycle of cloud-based applications.

- Serverless architectures: Monitoring and observability for serverless functions will focus on function-level metrics and insights, rather than relying on traditional server-centric monitoring approaches. Tools capable of analyzing function invocation, execution time, and resource consumption will be critical.

- AI/ML-driven systems: Observability will be crucial for understanding the behavior of complex AI/ML models. Monitoring and observability solutions will need to capture the data required to interpret model behavior, identify anomalies, and ensure model performance and integrity.

Ultimate Conclusion

In conclusion, while monitoring provides a snapshot of system health, observability offers a more holistic view of system behavior. By combining both approaches, organizations can build robust and resilient systems capable of adapting to dynamic environments. This deeper understanding leads to improved performance, reduced downtime, and ultimately, a more efficient and effective software infrastructure.

FAQ Resource

What are some common metrics monitored in software systems?

Common metrics include CPU utilization, memory usage, network traffic, response times, error rates, and transaction counts. These metrics provide insights into the system’s current state and performance.

How does observability differ from monitoring in terms of data analysis?

Monitoring typically analyzes metrics for immediate issues, whereas observability uses a broader range of data (logs, metrics, and traces) to understand the underlying reasons for system behavior. This allows for root cause analysis and predictive maintenance.

What are some examples of observability tools?

Popular observability tools include Prometheus, Grafana, Jaeger, and Datadog, each offering different functionalities and strengths in data collection and analysis.

What is the role of logs in observability?

Logs provide valuable context and details about events that occur within a system. They are essential for understanding the sequence of actions that led to a particular problem or behavior.