Choosing the right database is a critical decision in modern application development, and the selection often boils down to a choice between relational and NoSQL paradigms. Aurora Serverless and DynamoDB, both powerful offerings from AWS, represent these distinct approaches. Understanding the nuances of when to use Aurora Serverless vs DynamoDB is essential for optimizing performance, scalability, and cost-efficiency in your applications.

This analysis delves into the core functionalities, architectural differences, and suitability of each database for various use cases, providing a comprehensive guide to making informed decisions.

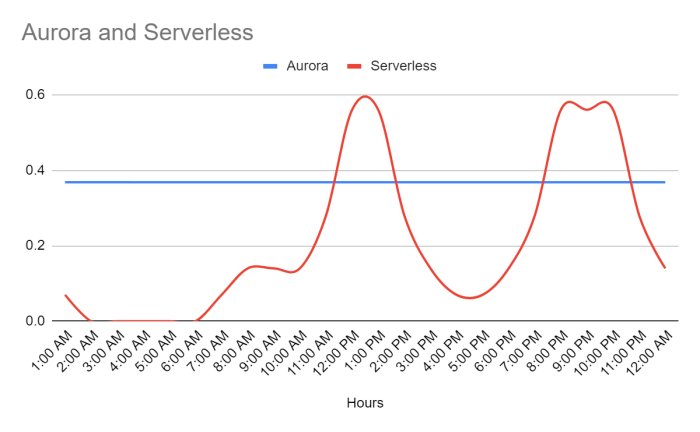

Aurora Serverless, a relational database, offers the flexibility of scaling compute resources based on demand, making it ideal for unpredictable workloads. In contrast, DynamoDB, a NoSQL database, excels in handling high-volume, low-latency operations with its inherent scalability and key-value or document-oriented data models. This comparative study explores the advantages and disadvantages of each, helping you navigate the complexities of data storage and access patterns, performance characteristics, cost considerations, and application requirements to determine the optimal solution for your specific needs.

Overview of Aurora Serverless and DynamoDB

This section provides a comparative overview of Amazon Aurora Serverless and Amazon DynamoDB, two database services offered by Amazon Web Services (AWS). The focus will be on their core functionalities, key characteristics, and architectural differences, providing a foundational understanding for informed decision-making when selecting a database solution.

Core Functionalities of Aurora Serverless

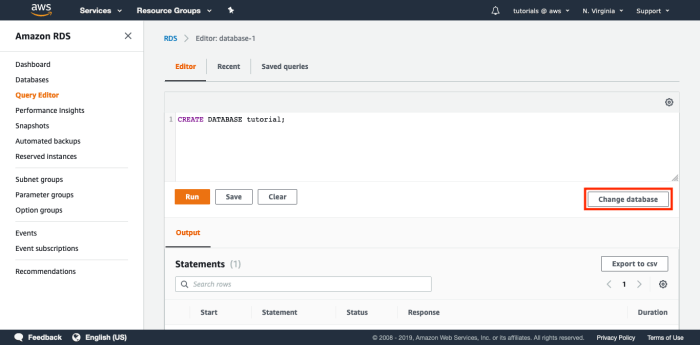

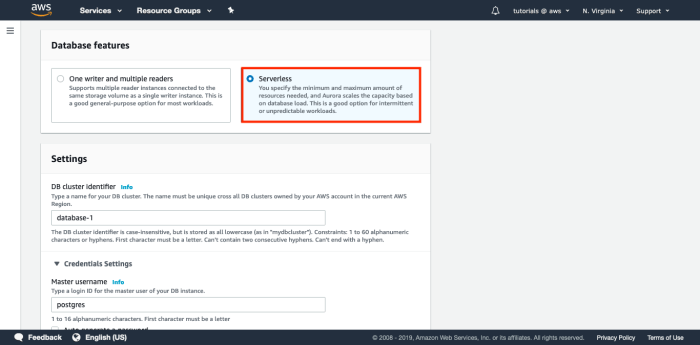

Aurora Serverless is a version of Amazon Aurora that automatically starts up, shuts down, and scales capacity up or down based on an application’s needs. It offers a cost-effective solution for infrequently used applications or workloads with unpredictable demand.

- Automatic Scaling: Aurora Serverless automatically adjusts database capacity based on the workload. This eliminates the need for manual capacity provisioning and management. Scaling occurs in increments, allowing the database to handle fluctuations in demand efficiently.

- Pay-per-use Pricing: Users are charged only for the database capacity consumed. This pricing model is advantageous for workloads with intermittent or variable usage patterns, reducing operational costs compared to provisioned database instances.

- Compatibility: Aurora Serverless supports MySQL and PostgreSQL database engines, allowing users to leverage their existing SQL skills and applications with minimal modifications.

- High Availability: Aurora Serverless benefits from the inherent high availability features of Aurora, including automated failover and data replication across multiple Availability Zones.

- Ease of Use: Setting up and managing Aurora Serverless is simplified, with AWS handling tasks such as capacity management and patching. This allows developers to focus on application development rather than database administration.

Key Characteristics of DynamoDB

DynamoDB is a fully managed NoSQL database service that provides high performance, scalability, and availability. It is designed for applications that require low latency and can handle large volumes of data.

- NoSQL Data Model: DynamoDB uses a key-value and document data model, which provides flexibility and scalability for storing and retrieving data. This contrasts with the relational model used by Aurora.

- Scalability: DynamoDB can automatically scale to handle virtually any workload, providing consistent performance even under heavy load. It achieves this through a distributed architecture.

- Performance: DynamoDB offers predictable, low-latency performance, enabling fast data access and retrieval. It is designed for applications requiring millisecond response times.

- Fully Managed: AWS manages all the underlying infrastructure, including hardware provisioning, software patching, and backups. This simplifies database administration and reduces operational overhead.

- Pay-per-request Pricing: Users are charged based on the number of read and write requests made to the database, making it cost-effective for applications with varying traffic patterns.

- Transactions: DynamoDB supports ACID (Atomicity, Consistency, Isolation, Durability) transactions, ensuring data integrity in applications that require complex operations.

Architectural Differences Between Aurora Serverless and DynamoDB

The architectural differences between Aurora Serverless and DynamoDB reflect their different design goals and use cases. These differences are crucial in determining which database is best suited for a particular application.

- Data Model: Aurora Serverless is a relational database that uses SQL, while DynamoDB is a NoSQL database that uses a key-value and document data model. This difference impacts how data is structured and accessed.

- Scaling Approach: Aurora Serverless scales compute and memory resources independently, allowing for fine-grained adjustments based on workload demands. DynamoDB scales storage and throughput automatically, adapting to changes in request rates.

- Storage: Aurora Serverless uses a distributed, fault-tolerant storage system, offering high durability and availability. DynamoDB stores data on SSD storage and replicates it across multiple Availability Zones.

- Querying: Aurora Serverless supports complex SQL queries, enabling sophisticated data analysis. DynamoDB offers flexible querying capabilities through its APIs and secondary indexes, optimized for fast data retrieval.

- Consistency: Aurora Serverless provides strong consistency by default. DynamoDB offers configurable consistency options, allowing users to choose between strong consistency and eventual consistency to optimize for performance and cost.

- Transactions: Aurora Serverless supports full ACID transactions. DynamoDB supports ACID transactions for operations within a single table or across multiple tables using the DynamoDB Transactions feature.

Data Storage and Access Patterns

Understanding the distinct data storage and access patterns of Aurora Serverless and DynamoDB is crucial for selecting the database that best aligns with an application’s requirements. These patterns dictate how data is structured, retrieved, and managed, directly impacting performance, scalability, and overall system design.

Aurora Serverless Relational Data Storage

Aurora Serverless, being a relational database service, stores data using the structured principles of SQL databases. This model is built upon tables, composed of rows and columns, enforcing data integrity through predefined schemas and relationships.Data is organized within tables, with each table representing a specific entity or object, such as “Customers” or “Orders.” Each row within a table represents a single instance of that entity, and columns define the attributes or properties of the entity.

Relationships between tables are established using primary and foreign keys, ensuring data consistency and enabling complex queries that span multiple tables. Indexing, a critical performance optimization technique, is employed to accelerate data retrieval by creating sorted pointers to specific columns within the tables. This allows for faster lookups and filtering operations. The underlying storage engine for Aurora Serverless is often a variant of MySQL or PostgreSQL, depending on the chosen compatibility.

DynamoDB NoSQL Data Model

DynamoDB, a NoSQL database, adopts a flexible data model centered around key-value and document structures, diverging from the rigid schema of relational databases. This approach offers significant advantages in terms of scalability and agility, particularly for applications with evolving data requirements or high read/write throughput demands.Data in DynamoDB is stored as items, which are collections of attributes. Each item must have a primary key, a unique identifier that allows for efficient retrieval.

Attributes can be simple data types like strings, numbers, and booleans, or more complex structures like lists and maps, allowing for the representation of nested data. DynamoDB’s schema-less design enables the addition of new attributes to items without requiring schema migrations, providing greater flexibility. Secondary indexes are used to facilitate queries based on attributes other than the primary key. These indexes create alternative lookup paths, improving query performance for non-primary key-based searches.

DynamoDB uses a partition-based storage system, where data is distributed across multiple partitions to achieve horizontal scalability. The system automatically manages data distribution, ensuring high availability and performance under heavy load.

Scenario Suitability

The suitability of Aurora Serverless and DynamoDB varies based on application characteristics. Here are examples of scenarios where each database type excels:

- Aurora Serverless:

- Applications requiring complex queries involving joins, aggregations, and transactions.

- Systems where data integrity and consistency are paramount, such as financial applications or e-commerce platforms.

- Projects with well-defined data schemas and predictable data relationships.

- Web applications with a moderate and variable traffic load, benefiting from the pay-per-use model. For example, a blog platform where content structure and relationships between posts, authors, and comments are critical, and the traffic fluctuates.

- DynamoDB:

- Applications with high read/write throughput requirements, such as social media platforms or gaming services.

- Systems where data schema is flexible and evolves frequently, like content management systems or IoT applications.

- Use cases demanding horizontal scalability and high availability, with the ability to handle massive amounts of data.

- Applications with a need for real-time data processing and low latency, like user profile management or session management. For example, a mobile game where player data (scores, inventory, progress) needs to be quickly accessed and updated, and the database needs to scale to handle a large and growing player base.

Scalability and Performance Characteristics

Understanding the scalability and performance characteristics of Aurora Serverless and DynamoDB is crucial for selecting the right database for a specific application. These characteristics dictate how well a database can handle increasing workloads, the speed at which it processes requests, and the associated costs. Both databases offer distinct approaches to scaling and performance optimization, which will be examined in detail.

Scaling Mechanisms of Aurora Serverless

Aurora Serverless employs an on-demand scaling mechanism, automatically adjusting resources based on the current workload. This mechanism allows for efficient resource allocation and cost optimization.

- Capacity Units: Aurora Serverless operates on the concept of Aurora Capacity Units (ACUs). An ACU represents a combination of processing power and memory capacity. The database automatically scales up or down the number of ACUs based on the workload demands.

- Scaling Triggers: Scaling is triggered by several factors, including CPU utilization, connection count, and memory usage. When these metrics exceed predefined thresholds, Aurora Serverless initiates scaling operations.

- Scaling Time: Scaling up is typically faster than scaling down. Scaling up can occur within seconds, while scaling down might take longer to ensure minimal disruption to ongoing operations.

- Capacity Planning: While Aurora Serverless handles scaling automatically, it’s still beneficial to understand the application’s expected load and the typical resource requirements to configure the initial minimum and maximum ACU settings.

DynamoDB’s Auto-Scaling Capabilities

DynamoDB’s auto-scaling capabilities are integral to its design, offering a highly scalable and efficient solution for managing varying workloads. This auto-scaling functionality is designed to provide seamless performance and cost optimization.

- Provisioned Capacity: DynamoDB’s auto-scaling relies on the concept of provisioned capacity, where users define the read and write capacity units (RCUs and WCUs) required for their tables.

- Target Utilization: Users can configure auto-scaling to maintain a target utilization level for read and write capacity. DynamoDB adjusts the provisioned capacity based on actual traffic and the target utilization.

- Scaling Policies: Auto-scaling policies define how DynamoDB responds to changes in workload. These policies specify the minimum and maximum capacity units, as well as the rate at which capacity should be adjusted.

- Adaptive Capacity: DynamoDB also incorporates adaptive capacity, which automatically adjusts capacity in response to sudden bursts of traffic or uneven key access patterns. This feature helps to prevent throttling and maintain consistent performance.

Performance Comparison

The performance of Aurora Serverless and DynamoDB varies depending on the workload characteristics, data access patterns, and specific configurations. The following table presents a comparative overview of key performance metrics. It is essential to remember that actual performance can vary based on the application, data volume, and specific configurations.

| Metric | Aurora Serverless | DynamoDB | Notes |

|---|---|---|---|

| Read Throughput | Can scale rapidly, but initial cold start can impact read performance. | High read throughput with provisioned capacity; auto-scaling adapts to traffic. | Read throughput is highly dependent on the number of ACUs (Aurora) or RCUs (DynamoDB) provisioned. |

| Write Throughput | Write performance can be affected during scaling operations. | High write throughput with provisioned capacity; auto-scaling adapts to traffic. | Write throughput is dependent on the number of ACUs (Aurora) or WCUs (DynamoDB) provisioned. |

| Latency | Variable latency, especially during scaling events. Cold starts can impact initial query response times. | Low and predictable latency, especially with provisioned capacity and appropriate data modeling. | Latency is a critical factor, and DynamoDB generally offers lower latency, especially with provisioned capacity. |

| Cost | Pay-per-use model based on ACUs consumed, storage, and data transfer. | Pay-per-use model based on provisioned capacity, storage, data transfer, and optional features (e.g., on-demand backup). | Cost depends on workload and resource consumption. DynamoDB can be more cost-effective for highly variable workloads with appropriate auto-scaling configuration. Aurora Serverless can be cost-effective for workloads with infrequent traffic. |

Cost Considerations

Understanding the cost implications of Aurora Serverless and DynamoDB is crucial for making informed decisions about database selection. The optimal choice often hinges on a careful evaluation of pricing models, considering factors such as storage, compute, data transfer, and the overall total cost of ownership (TCO). This section provides a detailed analysis of the cost structures associated with each database, enabling a comparative assessment.

Pricing Models for Aurora Serverless

Aurora Serverless utilizes a pay-per-use model, where users are charged based on the database capacity consumed and the storage used. The compute capacity is measured in Aurora Capacity Units (ACUs), which represent a combination of CPU and memory.

- Compute Costs: Aurora Serverless charges based on the number of ACUs used per hour. The pricing varies depending on the AWS region and the database engine (e.g., MySQL, PostgreSQL). The database automatically scales the ACUs based on workload demands, meaning you only pay for the resources utilized. For example, if a database is configured to use 1 ACU and remains idle for an hour, you are charged for 1 ACU-hour.

If the database scales up to 4 ACUs for half an hour and then back to 1 ACU for the other half, you are charged for (4 ACUs

– 0.5 hours) + (1 ACU

– 0.5 hours) = 2.5 ACU-hours. - Storage Costs: Storage costs are calculated based on the amount of data stored in the database per month. The pricing per GB-month also varies depending on the AWS region. Aurora Serverless automatically manages the storage capacity, and you are billed for the actual storage used.

- Data Transfer Costs: Data transfer charges apply for data transferred out of the AWS region. Data transfer within the same Availability Zone is typically free. Data transfer to other AWS regions or to the internet incurs charges based on the volume of data transferred.

Cost Structure of DynamoDB

DynamoDB’s pricing is based on a consumption-based model that primarily involves provisioned or on-demand capacity, storage, and data transfer.

- Provisioned Capacity: With provisioned capacity, you specify the read and write capacity units (RCUs and WCUs) required for your application. You are charged for the provisioned capacity, regardless of actual usage. If your workload exceeds the provisioned capacity, DynamoDB throttles requests.

- On-Demand Capacity: On-demand capacity offers a pay-per-request pricing model, where you pay only for the reads and writes that your application performs. This model is suitable for unpredictable workloads or applications with infrequent database access.

- Storage Costs: DynamoDB charges for the storage used by your tables, based on the amount of data stored per GB-month.

- Data Transfer Costs: Similar to Aurora Serverless, data transfer charges apply for data transferred out of the AWS region. Data transfer within the same Availability Zone is free.

Total Cost of Ownership (TCO) Comparison

Comparing the TCO of Aurora Serverless and DynamoDB requires considering storage, compute, and data transfer costs. The following table illustrates a simplified comparison, using hypothetical scenarios and approximate pricing, to highlight the differences. Actual costs may vary based on the specific region, data volume, and workload characteristics.

| Cost Component | Aurora Serverless | DynamoDB (Provisioned) | DynamoDB (On-Demand) |

|---|---|---|---|

| Storage Cost (per GB-month) | $0.10 (example) | $0.25 (example) | $0.25 (example) |

| Compute Cost (per ACU-hour / RCU/WCU) | $0.06 (example) | Based on Provisioned RCUs/WCUs (example) | Based on Read/Write Requests (example) |

| Data Transfer Cost (per GB) | $0.09 (example) | $0.09 (example) | $0.09 (example) |

| Total Cost (Monthly, estimated for 100GB Storage, moderate traffic) | Based on ACU usage, approximately $150 | Based on Provisioned RCUs/WCUs, approximately $200 | Highly variable based on request volume, approximately $250 |

The example above shows that for a hypothetical moderate traffic scenario, Aurora Serverless could be more cost-effective than provisioned DynamoDB due to its auto-scaling capabilities. However, with DynamoDB’s on-demand model, for applications with unpredictable or spiky workloads, it might be more cost-effective than both Aurora Serverless and provisioned DynamoDB, as you only pay for the resources consumed.

It is important to perform a thorough cost analysis, considering your specific workload characteristics and usage patterns, to determine the most cost-effective solution. Tools like the AWS Pricing Calculator can assist in estimating costs for both Aurora Serverless and DynamoDB.

Use Cases for Aurora Serverless

Aurora Serverless is particularly well-suited for applications characterized by fluctuating workloads, unpredictable traffic patterns, and scenarios where cost optimization is a primary concern. Its on-demand scaling capabilities allow it to efficiently handle varying demands without requiring constant resource provisioning. This makes it a compelling choice for a range of applications, from development and testing environments to production workloads.

Applications Benefiting from Aurora Serverless

Aurora Serverless excels in situations where database usage is sporadic or highly variable. Several application types can significantly benefit from its architecture.

- Development and Testing Environments: These environments often require databases that are only actively used during specific phases of the development lifecycle. The ability to automatically start, stop, and scale resources based on demand provides substantial cost savings compared to continuously running instances.

- Web and Mobile Applications with Unpredictable Traffic: Applications that experience fluctuating user traffic, such as e-commerce sites during sales events or news websites during breaking news, can leverage Aurora Serverless to automatically scale resources to handle peak loads without over-provisioning.

- Applications with Infrequent or Periodic Use: Applications accessed infrequently, such as internal reporting tools or infrequently used data analysis platforms, can minimize costs by only consuming resources when active. This is especially beneficial for applications with seasonal usage patterns.

- Low-Traffic Applications: For applications with a small user base or relatively low traffic volume, Aurora Serverless offers a cost-effective solution. The pay-per-use model aligns with the actual resource consumption, making it more economical than provisioning a fixed-size database instance.

- Multi-Tenant Applications: Aurora Serverless can be used to provide individual databases for each tenant in a multi-tenant application. This allows for efficient resource allocation and isolation, ensuring each tenant’s database resources are scaled based on their specific needs.

Optimal Scenario for Aurora Serverless

A scenario that highlights the optimal use of Aurora Serverless involves a web application that provides a platform for online courses. The application experiences significant traffic spikes during the launch of new courses and promotional periods. These spikes are unpredictable and vary in intensity.

Example Scenario: A fictional company, “EduSphere,” launches an online learning platform. They use Aurora Serverless to manage their course catalog, user accounts, and course enrollment data. During the initial launch, they anticipate a surge in traffic. Aurora Serverless automatically scales the database resources to handle the increased load. Once the initial launch period subsides, the database automatically scales down, reducing operational costs.

EduSphere regularly runs promotional campaigns that result in temporary traffic increases. Aurora Serverless efficiently handles these spikes without requiring manual intervention or over-provisioning. Furthermore, the development team uses Aurora Serverless for their staging environment. They can spin up a database instance only when needed, and quickly test new features. The staging environment database scales automatically according to testing requirements.

EduSphere’s database costs are significantly lower than they would be if they used a fixed-size database instance.

Use Cases for DynamoDB

DynamoDB, a fully managed NoSQL database service, excels in scenarios demanding high performance, scalability, and availability. Its schema-less design and key-value/document data model make it particularly well-suited for specific application types. Understanding these use cases is crucial for effectively leveraging DynamoDB’s capabilities.

Applications Benefiting from DynamoDB’s Features

DynamoDB’s architectural advantages translate to significant benefits in various application domains. The following list Artikels applications that typically gain the most from DynamoDB’s characteristics.

- Mobile Applications: DynamoDB’s ability to handle large volumes of read/write operations, coupled with its low latency, makes it ideal for storing and retrieving user data, session information, and application-specific data in mobile applications. The automatic scaling ensures that the database can accommodate sudden spikes in user activity, such as during the launch of a new feature or a promotional campaign.

- Gaming: Real-time gaming applications require a database that can quickly update player profiles, game state, and leaderboards. DynamoDB’s speed and scalability are essential for providing a smooth gaming experience. For instance, in a massively multiplayer online role-playing game (MMORPG), DynamoDB can efficiently manage millions of player profiles, inventory data, and game world information, ensuring quick data access and update speeds.

- E-commerce: E-commerce platforms benefit from DynamoDB’s ability to handle high traffic volumes and manage product catalogs, user profiles, and shopping carts. The service’s high availability is crucial for ensuring that users can always access the platform and make purchases. For example, during a flash sale, DynamoDB can handle a massive increase in concurrent users and transactions without performance degradation.

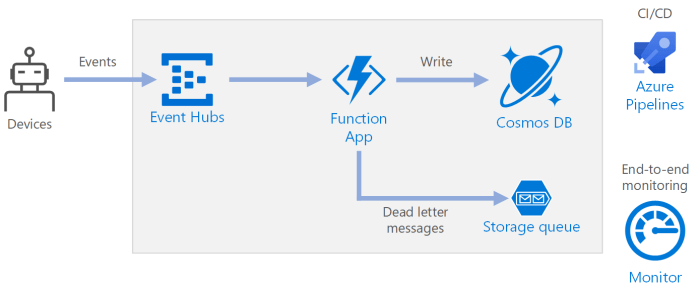

- Internet of Things (IoT): IoT applications generate a massive amount of data from connected devices. DynamoDB’s ability to ingest and store this data at scale is essential for processing sensor readings, device status updates, and other IoT-related information. This data can be used for real-time analytics, device monitoring, and predictive maintenance.

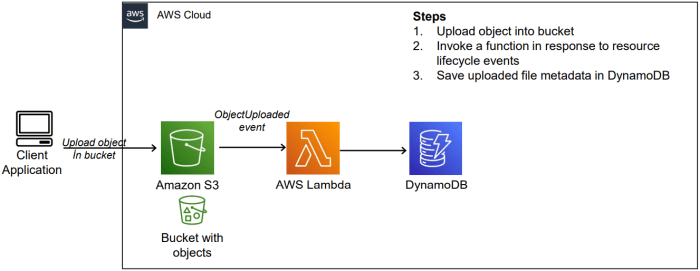

- Serverless Applications: DynamoDB integrates seamlessly with serverless computing platforms like AWS Lambda. This combination allows developers to build highly scalable and cost-effective applications without managing servers. For instance, a serverless application that processes user uploads can use DynamoDB to store metadata about the uploaded files, such as file names, sizes, and locations.

Scenario: DynamoDB as the Optimal Choice

Consider a social media platform with millions of users, each generating a constant stream of posts, comments, likes, and other interactions. The platform requires a database that can handle these high-volume, write-intensive workloads while maintaining low latency for a responsive user experience. The platform also needs to be highly available to ensure continuous operation.

Example Scenario for DynamoDB

A social media platform leverages DynamoDB to store user data, posts, comments, and relationships. The platform uses DynamoDB’s key-value and document data model to store user profiles and post content, respectively. The high read/write throughput capabilities of DynamoDB are crucial for quickly displaying user feeds, updating user profiles, and managing real-time interactions. Auto-scaling ensures that the database can handle sudden spikes in user activity, such as during major news events or viral trends. The platform’s data is stored in multiple Availability Zones to ensure high availability and data durability, mitigating the risk of data loss. DynamoDB’s eventual consistency model, while providing high performance, is carefully managed to avoid inconsistencies.

Application Requirements and Data Models

The selection of either Aurora Serverless or DynamoDB is profoundly influenced by the specific requirements of an application and the nature of its data. Understanding these factors is crucial for making an informed decision that optimizes performance, cost, and scalability. The data model, in particular, dictates how data is structured and accessed, playing a pivotal role in determining the suitability of each database.

Factors Influencing Database Selection

Several key factors must be considered when choosing between Aurora Serverless and DynamoDB. These factors collectively shape the application’s architecture and the database’s role within it.

- Data Volume and Growth Rate: The anticipated amount of data and its projected growth rate are critical. High-volume, rapidly growing datasets may favor DynamoDB’s scalability.

- Read/Write Patterns: Analyzing the balance between read and write operations helps determine the database’s ability to handle the workload. DynamoDB excels in read-heavy scenarios, while Aurora Serverless can be optimized for more balanced workloads.

- Data Consistency Requirements: Applications with stringent consistency needs, such as financial transactions, may benefit from Aurora Serverless’s ACID properties. DynamoDB offers eventual consistency by default, with options for strong consistency.

- Query Complexity: The complexity of queries, including joins and aggregations, influences the choice. Aurora Serverless supports complex SQL queries, whereas DynamoDB’s query capabilities are more limited, typically involving primary key lookups and secondary indexes.

- Application Development Expertise: The development team’s familiarity with SQL databases (Aurora Serverless) or NoSQL databases (DynamoDB) can affect development speed and efficiency.

- Cost Constraints: Budgetary considerations play a crucial role. Aurora Serverless offers pay-per-use pricing, which can be advantageous for fluctuating workloads. DynamoDB also has flexible pricing options, including on-demand and provisioned capacity.

- Compliance and Security: Regulatory requirements and security policies may influence the choice. Both databases offer robust security features, but the specific needs of the application must be considered.

Impact of Data Models on Database Selection

The structure of the data significantly impacts the performance and efficiency of both Aurora Serverless and DynamoDB. The chosen data model must align with the application’s access patterns and the database’s capabilities.

- Relational Data Models (Aurora Serverless): Aurora Serverless excels with relational data models, which organize data into tables with defined relationships. This model supports complex queries, joins, and transactions, making it suitable for applications with structured data and complex relationships.

- Document Data Models (DynamoDB): DynamoDB is well-suited for document data models, where data is stored in flexible, schema-less documents. This model is ideal for applications with semi-structured data and evolving schemas, allowing for easier adaptation to changing requirements.

- Key-Value Data Models (DynamoDB): DynamoDB’s key-value store allows for fast retrieval of data based on a primary key. This model is efficient for simple lookups and applications that require high throughput.

- Graph Data Models (Considerations): While not directly supported, graph-like relationships can be modeled in DynamoDB using techniques such as adjacency lists. Aurora Serverless, with its SQL capabilities, can handle graph-like data using recursive queries.

Examples: Data Structure Driving Database Choice

The following examples illustrate how data structure influences the choice between Aurora Serverless and DynamoDB.

- E-commerce Platform: An e-commerce platform that manages product catalogs, user profiles, and order history. The product catalog, with its structured data (e.g., product name, description, price, categories) and complex relationships (e.g., products belonging to categories), may be best suited for Aurora Serverless. User profiles, with less structured data (e.g., address, purchase history) and high read/write throughput, might be more suitable for DynamoDB.

Order history could potentially be managed in DynamoDB for scalability or in Aurora Serverless for complex reporting and data integrity.

- Social Media Application: A social media application managing user posts, comments, and relationships (e.g., followers). The relationships between users and their posts, as well as the need for flexible data structures for user profiles and posts, make DynamoDB a strong candidate. Aurora Serverless could be used for specific reporting or analytics tasks that require complex SQL queries on aggregated social data.

- Financial Transaction Processing: A financial application processing transactions, where data integrity and consistency are paramount. Aurora Serverless, with its ACID properties, would be a better choice for ensuring the reliability of transactions. The structured nature of financial data and the need for complex reporting also support the use of Aurora Serverless.

- IoT Device Data Storage: An IoT application collecting sensor data from numerous devices, where high throughput and scalability are essential. DynamoDB’s ability to handle high volumes of write operations and its flexibility in storing time-series data make it an ideal choice. The schema-less nature of the data and the need for real-time analysis support the use of DynamoDB.

Migration Considerations

Migrating between database systems, especially when considering the transition to cloud-native solutions like Aurora Serverless and DynamoDB, demands careful planning and execution. The process involves assessing existing data models, understanding the nuances of each database’s architecture, and choosing the appropriate migration strategy to minimize downtime and data loss. Successful migration hinges on a clear understanding of the target database’s capabilities and limitations, alongside a robust testing and validation plan.

Migrating from a Traditional Database to Aurora Serverless

Migrating to Aurora Serverless typically involves a multi-step process, aiming to minimize disruption while ensuring data integrity. This process frequently leverages the existing familiarity with relational database concepts, offering a smoother transition compared to NoSQL databases like DynamoDB.The migration process often includes the following steps:

- Assessment and Planning: This phase involves a thorough analysis of the existing database schema, data volume, and application requirements. The assessment should identify any potential compatibility issues, such as unsupported data types or features in Aurora Serverless. Performance benchmarks are established to measure the before-and-after performance.

- Schema Conversion: The existing database schema must be converted to be compatible with Aurora Serverless. This might involve data type mapping, index adjustments, and potentially, schema modifications to optimize performance and resource utilization. Tools like AWS Schema Conversion Tool (SCT) can automate parts of this process.

- Data Migration: The data migration process typically involves extracting data from the source database, transforming it as needed, and loading it into Aurora Serverless. The method chosen depends on the data volume and downtime tolerance. Options include:

- Full Load: Suitable for smaller datasets or periods with less strict downtime requirements. This involves copying the entire dataset at once.

- Incremental Load: This approach utilizes change data capture (CDC) mechanisms to capture changes in the source database and apply them to Aurora Serverless in near real-time. It minimizes downtime and is suitable for large datasets.

- Testing and Validation: Rigorous testing is crucial to ensure data integrity and application functionality after migration. This includes data validation, performance testing, and application testing to verify that the application operates as expected with the new database.

- Cutover: Once testing is complete and successful, the application is switched to use Aurora Serverless. This can be a phased approach, migrating some application components initially, or a complete cutover, depending on the application architecture.

Migrating Data from a Traditional Database to DynamoDB

Migrating to DynamoDB represents a more significant architectural shift due to its NoSQL nature. This transition requires a deeper understanding of data modeling for a key-value store and a rethinking of how data is accessed.The migration process includes:

- Data Modeling: The first step is to design a data model optimized for DynamoDB. This involves defining primary keys, attributes, and indexing strategies to facilitate efficient data retrieval. The design should consider access patterns and query requirements.

- Schema Transformation: The relational schema needs to be transformed into a suitable DynamoDB schema. This frequently involves denormalization, embedding related data within items to minimize the need for joins, and careful selection of primary keys.

- Data Migration: Data migration methods for DynamoDB include:

- AWS Data Migration Service (DMS): DMS can be used to migrate data from relational databases to DynamoDB. It supports both full and incremental loads, providing flexibility in managing downtime.

- Custom Scripts: For complex transformations or specific data requirements, custom scripts might be necessary. These scripts typically involve reading data from the source database, transforming it, and writing it to DynamoDB.

- AWS Glue: AWS Glue can also be utilized for ETL (Extract, Transform, Load) processes, enabling data transformation and migration to DynamoDB.

- Testing and Validation: Testing focuses on data integrity, performance, and application functionality. Validation ensures that the migrated data conforms to the new data model and that queries are optimized for DynamoDB. Performance testing is crucial to evaluate the scalability and performance of the application with the new data store.

- Application Code Changes: The application code must be modified to interact with DynamoDB. This includes updating database connection details, rewriting queries to use DynamoDB’s API, and adjusting data access patterns.

Challenges and Best Practices for Migrating Between Database Types

Migrating between database types presents unique challenges, necessitating the adoption of best practices to ensure a successful transition. These challenges vary depending on the source and target database, and the migration strategy employed.The challenges include:

- Data Model Differences: The most significant challenge is the difference in data models between relational databases and NoSQL databases like DynamoDB. Relational databases use a schema-based approach, while NoSQL databases are schema-less or have flexible schemas. This requires careful planning and data transformation to ensure that the data is structured efficiently for the target database.

- Data Volume and Downtime: Large datasets require careful consideration of data migration methods to minimize downtime. Incremental migration strategies, such as using CDC, are crucial for minimizing disruption.

- Performance Tuning: Both Aurora Serverless and DynamoDB require performance tuning. This involves optimizing query performance, choosing appropriate indexing strategies, and configuring resources to handle the expected load.

- Application Code Changes: Significant changes to application code are often required to adapt to the new database. This can be time-consuming and require careful testing to ensure that the application functions correctly.

- Cost Optimization: Cloud database services offer various pricing models. Migrating to a new database requires a thorough cost analysis to optimize resource utilization and minimize costs.

Best practices include:

- Thorough Planning: Develop a detailed migration plan, including timelines, resource allocation, and risk mitigation strategies.

- Pilot Projects: Conduct pilot projects with a subset of data or application components to test the migration process and identify potential issues before migrating the entire system.

- Automated Tools: Utilize automated tools, such as AWS SCT and DMS, to streamline the migration process and reduce manual effort.

- Data Validation: Implement robust data validation processes to ensure data integrity after migration.

- Performance Testing: Conduct comprehensive performance testing to optimize query performance and ensure that the application can handle the expected load.

- Monitoring and Optimization: Implement monitoring and alerting to track performance and identify any potential issues after migration. Continuously optimize the database configuration and application code to improve performance and reduce costs.

Security and Compliance

Database security and compliance are critical considerations when selecting a database solution. Both Aurora Serverless and DynamoDB offer robust security features and support various compliance standards, but their approaches and capabilities differ. Understanding these differences is crucial for aligning database choices with specific security requirements and regulatory frameworks.

Security Features in Aurora Serverless

Aurora Serverless leverages the security features inherent in the underlying Amazon Relational Database Service (RDS) infrastructure. These features are designed to protect data both in transit and at rest.

- Encryption: Aurora Serverless supports encryption at rest using AWS Key Management Service (KMS) managed keys. Data is automatically encrypted when stored on disk. Additionally, data in transit is encrypted using Secure Sockets Layer (SSL) or Transport Layer Security (TLS) protocols. This ensures that data remains confidential during communication between the client and the database.

- Network Isolation: Aurora Serverless can be deployed within a Virtual Private Cloud (VPC). This allows for network isolation, providing a secure environment where only authorized resources can access the database. By controlling network access through security groups and network access control lists (ACLs), organizations can limit exposure to potential threats.

- Access Control: Aurora Serverless integrates with AWS Identity and Access Management (IAM) for user authentication and authorization. IAM policies can be defined to control access to database resources, such as creating, modifying, or deleting database instances. This granular control allows for implementing the principle of least privilege.

- Auditing and Monitoring: Aurora Serverless provides auditing capabilities through database logs, such as the general query log, slow query log, and error log. These logs can be used to monitor database activity and identify potential security incidents. Furthermore, Aurora Serverless integrates with Amazon CloudWatch for monitoring performance metrics and setting up alarms for anomalous behavior.

- Data Masking: Data masking features are available, enabling the obfuscation of sensitive data within the database. This allows developers and other authorized users to work with the database without revealing the original data.

Security Measures in DynamoDB

DynamoDB, as a NoSQL database service, provides a different set of security features tailored to its architecture and operational model. These features are designed to ensure data confidentiality, integrity, and availability within a highly scalable and distributed environment.

- Encryption: DynamoDB offers encryption at rest using KMS managed keys, similar to Aurora Serverless. This encryption is enabled by default for new tables. DynamoDB also supports encryption in transit via HTTPS.

- Access Control: DynamoDB integrates with IAM for authentication and authorization. IAM policies control access to DynamoDB tables and their data. Fine-grained access control is possible, allowing permissions to be granted at the table, item, or attribute level.

- Network Isolation: DynamoDB is accessed through the AWS network. While direct VPC integration isn’t available in the same way as Aurora Serverless, security best practices recommend utilizing VPC endpoints for DynamoDB to improve network security and reduce data transfer costs.

- Auditing and Monitoring: DynamoDB integrates with AWS CloudTrail for auditing API calls made to DynamoDB. CloudTrail logs all actions performed on DynamoDB resources, providing a comprehensive audit trail. Additionally, DynamoDB integrates with CloudWatch for monitoring performance metrics, setting alarms, and analyzing logs.

- Data Backup and Restore: DynamoDB offers point-in-time recovery (PITR) and on-demand backups for data protection. PITR allows for restoring tables to a specific point in time, while on-demand backups provide a way to create consistent backups for data recovery and archival purposes.

Compliance Certifications and Standards Supported

Both Aurora Serverless and DynamoDB are designed to meet various compliance requirements. The specific certifications and standards supported can influence database selection based on the regulatory environment.

- Aurora Serverless: Aurora Serverless, being built on RDS, inherits many of the compliance certifications supported by RDS. These typically include:

- SOC 1, SOC 2, and SOC 3

- PCI DSS

- HIPAA

- ISO 27001

- FedRAMP

- DynamoDB: DynamoDB also supports a comprehensive set of compliance certifications:

- SOC 1, SOC 2, and SOC 3

- PCI DSS

- HIPAA

- ISO 27001

- FedRAMP

The specific applicability of these certifications depends on the AWS region and the specific configuration of the database. It is essential to consult the AWS documentation and compliance reports for the most up-to-date information on supported standards and certifications. Organizations should also conduct their own due diligence to ensure that the chosen database solution meets their specific compliance needs.

Monitoring and Management

Effective monitoring and management are crucial for the optimal performance, reliability, and cost-efficiency of any database system. This section will detail the monitoring tools and techniques available for Aurora Serverless and DynamoDB, along with their backup and disaster recovery capabilities. Understanding these aspects is vital for maintaining data integrity and ensuring application availability.

Monitoring Aurora Serverless

Aurora Serverless provides a comprehensive suite of monitoring tools to track performance, identify bottlenecks, and optimize resource utilization.

- Amazon CloudWatch Integration: Aurora Serverless integrates seamlessly with Amazon CloudWatch, offering a centralized platform for monitoring. This integration allows for the collection of various metrics, including:

- CPU utilization: Tracks the percentage of CPU resources being used by the database instance.

- Memory utilization: Monitors the amount of memory consumed by the database.

- Database connections: Displays the number of active connections to the database.

- Read/Write operations: Measures the number of read and write operations per second.

- Latency metrics: Provides insight into the time taken for database operations.

These metrics can be visualized through CloudWatch dashboards, enabling users to create custom views that highlight key performance indicators (KPIs). Alarms can be set based on these metrics to trigger notifications or automated actions when predefined thresholds are exceeded, such as scaling up the database capacity or notifying administrators of potential issues.

- Performance Insights: Aurora provides Performance Insights, a database performance tuning and monitoring tool that helps users analyze database performance. It offers:

- Performance dashboards: Visualizes database load, wait events, and SQL statements.

- SQL query analysis: Identifies the top SQL queries that consume the most resources.

- Performance recommendations: Suggests improvements based on identified performance bottlenecks.

Performance Insights allows users to drill down into specific time periods to diagnose performance issues, providing a granular view of database activity. The tool leverages advanced analytics to identify the root causes of performance problems, enabling users to optimize their database queries and configurations.

- Enhanced Monitoring: Users can enable enhanced monitoring to gain more detailed insights into the operating system and database instance. This feature provides access to additional metrics, such as:

- Disk I/O: Monitors disk read/write operations.

- Network traffic: Tracks network input/output.

- Process-level metrics: Provides detailed information about running processes.

Enhanced monitoring data can be integrated with CloudWatch to provide a more comprehensive view of the database instance’s performance.

Monitoring DynamoDB

DynamoDB offers a robust set of monitoring tools to track performance, usage, and operational health.

- Amazon CloudWatch Integration: DynamoDB is deeply integrated with Amazon CloudWatch, providing comprehensive monitoring capabilities. CloudWatch collects and displays various metrics, including:

- Consumed read capacity units: Measures the number of read capacity units consumed.

- Consumed write capacity units: Measures the number of write capacity units consumed.

- Throttled requests: Tracks the number of requests that were throttled due to insufficient capacity.

- Successful requests: Monitors the number of successful requests.

- Latency: Provides information about the time taken for requests.

These metrics are crucial for understanding table performance and capacity planning. CloudWatch dashboards can be customized to visualize these metrics, allowing users to monitor key performance indicators (KPIs) at a glance.

- DynamoDB Metrics: DynamoDB provides a range of built-in metrics that are automatically collected and reported to CloudWatch. These metrics are available at the table, index, and operation levels. Key metrics include:

- Capacity utilization: Tracks the percentage of provisioned capacity being used.

- Item count: Monitors the number of items stored in a table.

- Conditional check failed requests: Tracks the number of failed conditional check requests.

These metrics provide detailed insights into the behavior of DynamoDB tables, helping users to identify potential issues and optimize their applications.

- DynamoDB Streams: DynamoDB Streams captures a time-ordered sequence of item-level modifications made to a DynamoDB table. This feature is essential for:

- Data replication: Replicating data to other tables or databases.

- Event-driven architectures: Triggering actions based on data changes.

- Auditing: Tracking changes to data over time.

Streams can be used to monitor data changes in real-time, enabling users to react to events and maintain data consistency.

- AWS Management Console: The AWS Management Console provides a user-friendly interface for monitoring DynamoDB tables. The console displays key metrics, such as:

- Capacity utilization.

- Request metrics.

- Error rates.

The console also provides tools for analyzing query performance and identifying potential bottlenecks.

Backup and Disaster Recovery for Aurora Serverless

Aurora Serverless offers built-in features for backup and disaster recovery, ensuring data durability and availability.

- Automated Backups: Aurora Serverless automatically creates and stores backups of database clusters. These backups are:

- Point-in-time recovery: Enables restoration of a database to a specific point in time within the backup retention period.

- Retention period: Configurable to meet compliance and business requirements.

- Storage: Backups are stored in Amazon S3, providing durability and cost-effectiveness.

Automated backups simplify the process of data recovery and minimize the risk of data loss.

- Manual Snapshots: Users can create manual snapshots of their Aurora Serverless clusters. These snapshots:

- Provide a consistent point-in-time copy of the database.

- Are useful for long-term data retention and archival purposes.

- Can be used to create new database clusters.

Manual snapshots offer greater control over the backup process.

- Cross-Region Replication: Aurora Serverless supports cross-region replication, enabling disaster recovery across different AWS regions. This feature:

- Creates a read replica in a different region.

- Provides high availability and fault tolerance.

- Enables rapid failover in the event of a regional outage.

Cross-region replication is essential for ensuring business continuity and minimizing downtime.

- Disaster Recovery Strategies:

- Active-Passive: Deploying Aurora Serverless in two regions, with one region serving as the primary and the other as a standby. In case of a primary region failure, the standby region can be promoted to become the primary. This strategy minimizes data loss and downtime.

- Active-Active: Using cross-region read replicas to distribute read traffic across multiple regions, providing high availability and improved performance. Write operations are typically directed to a single primary region.

The choice of strategy depends on the specific requirements of the application, including Recovery Time Objective (RTO) and Recovery Point Objective (RPO).

Backup and Disaster Recovery for DynamoDB

DynamoDB offers several mechanisms for data protection and disaster recovery, designed to ensure data durability and application resilience.

- Point-in-Time Recovery (PITR): DynamoDB provides Point-in-Time Recovery, which allows users to restore a table to any point in time within a retention period of up to 35 days. PITR:

- Protects against accidental data modifications or deletions.

- Simplifies data recovery.

- Is enabled by default.

PITR is a critical feature for data protection and minimizing data loss.

- On-Demand Backups: Users can create on-demand backups of their DynamoDB tables. These backups:

- Provide a consistent copy of the data at a specific point in time.

- Can be used for long-term data retention and archival purposes.

- Are stored in Amazon S3.

On-demand backups offer greater control over the backup process.

- DynamoDB Global Tables: DynamoDB Global Tables provide a fully managed, multi-region, multi-active database that replicates data automatically across AWS regions. Global Tables:

- Enable applications to access data with low latency from multiple regions.

- Provide high availability and fault tolerance.

- Simplify disaster recovery by automatically replicating data to other regions.

Global Tables are a powerful solution for applications that require global reach and high availability.

- Disaster Recovery Strategies:

- Using Global Tables: The simplest approach, where DynamoDB handles data replication across regions. Applications can automatically failover to a different region in case of an outage. This strategy offers the lowest RTO and RPO.

- Using On-Demand Backups and Cross-Region Restore: Regularly creating on-demand backups and restoring them to a different region. This strategy provides more control but has a higher RTO.

- Custom Replication with DynamoDB Streams: Building a custom solution using DynamoDB Streams to replicate data to another DynamoDB table in a different region or another database. This offers the most flexibility but requires significant development effort.

The choice of strategy depends on the specific requirements of the application, including RTO and RPO.

Closing Summary

In conclusion, the choice between Aurora Serverless and DynamoDB hinges on a careful evaluation of your application’s requirements. Aurora Serverless shines when dealing with relational data, predictable workloads, and the need for automatic scaling of compute resources. DynamoDB is the superior choice for applications that require high throughput, low latency, and a flexible NoSQL data model. By considering factors such as data storage, access patterns, scalability, performance, and cost, developers can strategically select the database that best aligns with their project goals, leading to optimized application performance and cost-effectiveness.

FAQ Summary

What is the primary difference in data models between Aurora Serverless and DynamoDB?

Aurora Serverless uses a relational data model with tables, rows, and columns, enforcing schema and relationships. DynamoDB uses a NoSQL data model, supporting key-value, document, and wide-column stores, offering schema flexibility but requiring careful design for data access.

When should I choose Aurora Serverless over DynamoDB for a WordPress site?

Aurora Serverless is generally preferable if your WordPress site has complex relational data requirements (e.g., intricate plugin data) or requires full SQL support. DynamoDB is better suited for high-traffic sites with simpler data structures, primarily content retrieval, and need for extreme scalability.

How does the cost structure differ between Aurora Serverless and DynamoDB?

Aurora Serverless pricing is based on compute (database capacity units) and storage, with compute scaling automatically. DynamoDB pricing is based on provisioned or on-demand read/write capacity units, storage, and data transfer. Aurora’s cost can be more predictable with stable workloads, while DynamoDB is often more cost-effective for highly variable traffic.

What are the main security considerations for each database?

Both offer robust security features, including encryption at rest and in transit. Aurora Serverless leverages standard relational database security features like IAM roles and network access controls. DynamoDB provides fine-grained access control using IAM and supports features like data masking and encryption with customer-managed keys.